TL;DR:

- AI-driven content generation, especially text-to-image (T2I) models, has evolved rapidly.

- Current T2I models excel at image generation but require complex prompts.

- A novel approach, iT2I, allows multi-turn dialogues with large language models.

- iT2I enhances user-friendliness by eliminating complex prompts.

- It ensures visual consistency, supports various instructions, and integrates seamlessly with language models.

- Experiments show minor impacts on language model performance.

- iT2I promises to be a significant innovation in AI content generation.

Main AI News:

In the ever-evolving landscape of artificial intelligence content generation, the emergence of text-to-image (T2I) models has brought forth a transformative era of rich, diverse, and imaginative AI-generated content. Yet, a notable challenge persists in effectively communicating with these advanced T2I models through natural language descriptions, posing a barrier for users without specialized expertise in prompt engineering.

Leading-edge T2I methods, exemplified by Stable Diffusion, have excelled at translating textual prompts into high-quality visual creations. However, this prowess comes at the cost of intricate prompt construction, laden with word combinations, arcane tags, and annotations, ultimately constraining the accessibility of these models for the average user. Moreover, current T2I models remain constrained in their grasp of natural language, necessitating users to familiarize themselves with the idiosyncrasies of each model for effective communication. The complexity further intensifies with the multitude of textual and numerical configurations in T2I workflows, encompassing factors like word weighting, negative prompts, and style keywords, which can prove daunting for non-professional users.

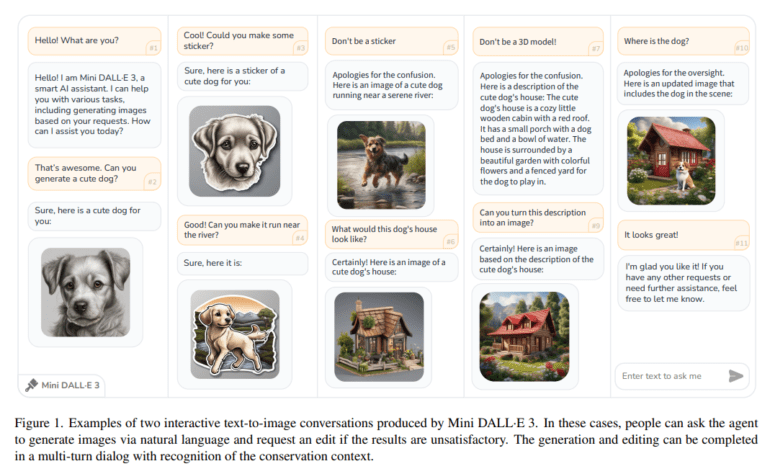

In response to these inherent limitations, a research team from China has recently unveiled an innovative approach dubbed “interactive text to image” (iT2I). This groundbreaking method empowers users to engage in multi-turn dialogues with large language models (LLMs), enabling them to iteratively define image requirements, offer feedback, and provide suggestions using natural language.

The iT2I approach leverages proven prompting techniques and readily available T2I models to elevate the capabilities of LLMs in image generation and refinement. By eliminating the need for intricate prompts and configurations, it drastically enhances user-friendliness, rendering it accessible to individuals outside the realm of professionals.

The core contributions of the iT2I method are manifold. It introduces Interactive Text-to-Image (iT2I) as a pioneering approach, fostering multi-turn conversations between users and AI agents for interactive image generation. iT2I guarantees visual coherence, offers synergy with language models, and accommodates a wide range of instructions for image creation, modification, selection, and enhancement. The paper also outlines a strategy for enhancing language models to harmonize with iT2I, underscoring its adaptability for applications spanning content generation, design, and interactive storytelling, ultimately enhancing the user experience in transforming textual descriptions into images. Furthermore, the proposed technique seamlessly integrates into existing LLMs.

To gauge the effectiveness of this innovative approach, the authors conducted a series of experiments. These evaluations assessed the impact of iT2I on LLM capabilities, compared the performance of different LLMs, and provided practical iT2I demonstrations across various scenarios. The experiments meticulously scrutinized the influence of iT2I prompts on LLM performance and revealed only minor degradations. Commercial LLMs adeptly generated images aligned with textual prompts, whereas open-source counterparts exhibited varying degrees of success. The practical demonstrations featured both single-turn and multi-turn image generation, along with integrated text-image storytelling, effectively showcasing the system’s versatile capabilities.

Conclusion:

The introduction of iT2I represents a transformative shift in AI content generation. It simplifies image creation, enhances user-friendliness, and promises to make AI-generated visual content more accessible and versatile. This innovation has the potential to expand the market for AI-driven content creation tools, catering to a broader audience of non-professional users and opening new possibilities for creative content generation.