TL;DR:

- Intel’s Habana Gaudi accelerators are being adopted by AWS for training large language models (LLMs).

- DeepSpeed, an optimization library for PyTorch, is used to mitigate LLM training challenges and accelerate model development.

- AWS utilized Habana Gaudi-based Amazon EC2 DL1 instances in their work, achieving promising results.

- A compute cluster comprising 16 dl1.24xlarge instances, each with eight Habana Gaudi accelerators, was built using AWS Batch.

- DeepSpeed ZeRO1 optimizations were employed for pre-training the BERT 1.5B model, focusing on performance and cost-effectiveness.

- Intel Habana Gaudi demonstrates high scaling efficiency, never dropping below 90% with eight instances and 64 accelerators.

Main AI News:

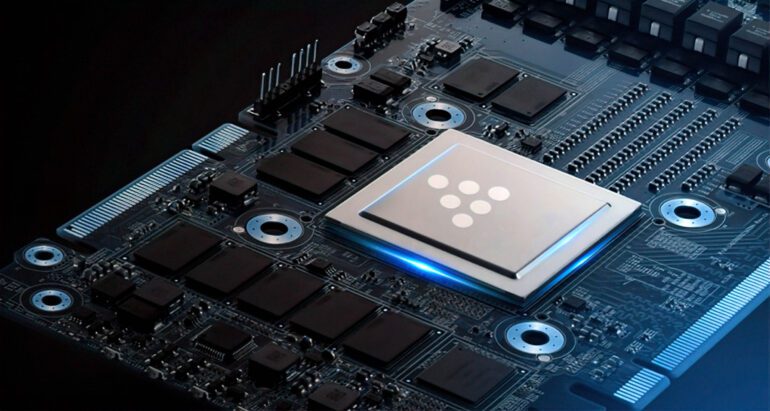

In the race to conquer the field of artificial intelligence (AI), Intel’s Habana has emerged as a key player, gaining broader deployments and significant recognition. Amazon Web Services (AWS), a leading cloud computing provider, recently turned to Intel’s 1st Generation Gaudi, a powerful deep learning accelerator, to enhance the training of large language models (LLMs). The results of this partnership have been so promising that AWS has decided to commercially offer DL1 EC2 instances, further solidifying Intel’s position in the market.

Developing LLMs with billions of parameters is a complex task that comes with its fair share of challenges. From the constraints of memory limitations on a single accelerator to ensuring scalability across multiple accelerators, researchers have been tirelessly exploring innovative training techniques. In their pursuit of excellence, AWS researchers utilized DeepSpeed, an open-source deep learning optimization library designed specifically for PyTorch. DeepSpeed aims to address some of the most pressing LLM training challenges while accelerating model development and training. To complement this, AWS harnessed the power of Intel Habana Gaudi-based Amazon EC2 DL1 instances, marking a significant step forward in their AI endeavors.

The researchers embarked on their mission by constructing a cutting-edge managed compute cluster using AWS Batch. This remarkable cluster consisted of 16 dl1.24xlarge instances, each housing eight Habana Gaudi accelerators and boasting an impressive 32 GB of memory. Moreover, the cluster was equipped with a robust full mesh RoCE network, facilitating seamless communication between cards and delivering a remarkable bi-directional interconnect bandwidth of 700 Gbps per instance. Additionally, four AWS Elastic Fabric Adapters were incorporated into the cluster, providing a total of 400 Gbps interconnectivity between nodes.

On the software front, the researchers harnessed the power of DeepSpeed’s ZeRO1 optimizations to pre-train the BERT 1.5B model, a popular architecture for LLMs, while meticulously fine-tuning various parameters. This approach aimed to maximize training performance and ensure cost-effectiveness, key considerations in any large-scale AI project. To guarantee model convergence, hyperparameters were thoughtfully adjusted, and the effective batch size per accelerator was meticulously set at 384. The researchers employed micro-batches of 16 per step and executed a total of 24 steps of gradient accumulation.

Intel Habana Gaudi’s scaling efficiency stood out during this groundbreaking endeavor, consistently operating above 90%. Even with eight instances and 64 accelerators simultaneously running a BERT 340 million model, the scaling efficiency of Intel Habana Gaudi remained impressive, further solidifying its position as a reliable and powerful solution for training large language models.

The collaboration between Intel’s Habana and AWS represents a remarkable milestone in the pursuit of AI excellence. With Intel’s cutting-edge technology driving the advancements in LLM training, the possibilities for further breakthroughs in the field are boundless. As businesses and researchers increasingly rely on large language models to tackle complex problems, Intel’s Habana Gaudi and AWS’s expertise pave the way for a future of unprecedented AI innovation and success.

Conclusion:

The adoption of Intel’s Habana Gaudi accelerators by AWS for training large language models signifies a significant development in the market. This collaboration showcases the growing demand for specialized solutions that can address the challenges associated with LLM training, including memory limitations and scalability. By leveraging DeepSpeed and the power of Intel Habana Gaudi, AWS demonstrates its commitment to providing efficient and cost-effective solutions for AI development. This partnership is expected to drive further innovation in the market and open up new possibilities for businesses and researchers seeking to harness the power of large language models.