- Intel releases OpenVINO 2024.1, a significant update to its open-source AI toolkit.

- Focuses on advancing Generative AI (GenAI) and Large Language Models (LLMs).

- Introduces Mixtral and URLNet models optimized for Intel Xeon CPUs.

- Optimizations for Intel Core Ultra processors with Arc Graphics architecture.

- Adds support for Falcon-7B-Instruct LLM and reduces compilation time for large language models.

- Enhanced LLM compression and performance on Intel Arc Graphics GPUs.

- Integration of Neural Processing Unit (NPU) plug-in for Intel Core Ultra processors.

- Improved accessibility of OpenVINO’s JavaScript API via the NPM repository.

- Default support for FP16 inference on ARM processors.

Main AI News:

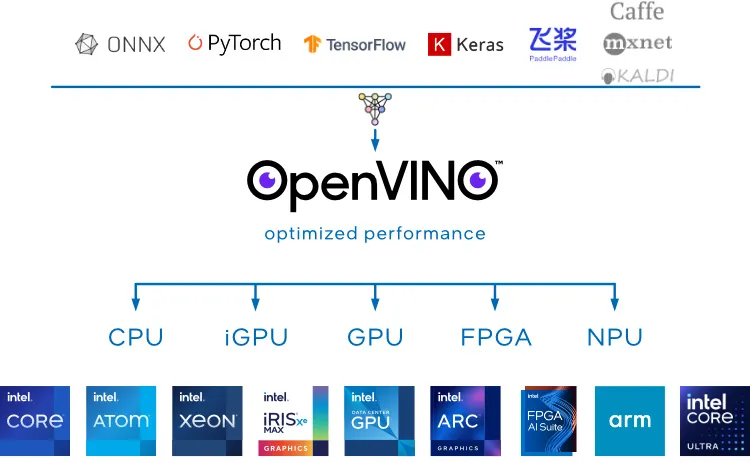

In the realm of cutting-edge AI toolkits, Intel engineers have just introduced OpenVINO 2024.1, a significant stride forward in enhancing the features and functionalities of this renowned open-source platform. With a particular focus on advancing Generative AI (GenAI) and Large Language Models (LLMs), this latest release promises to redefine the landscape of AI development.

OpenVINO 2024.1 introduces a slew of enhancements in the domain of generative AI. Notable additions include the integration of Mixtral and URLNet models meticulously optimized for Intel Xeon CPUs. Furthermore, the update showcases optimizations for Stable Diffusion 1.5, ChatGLM3-6B, and Qwen-7B models, specifically tailored to leverage the formidable performance capabilities of Intel Core Ultra processors, equipped with the revolutionary Arc Graphics architecture. Additionally, the release extends support for the Falcon-7B-Instruct LLM, thereby broadening the toolkit’s repertoire for tackling complex language tasks.

Moreover, OpenVINO 2024.1 delivers expedited compilation times for large language models on Intel processors featuring Advanced Matrix Extensions (AMX) support. The toolkit achieves superior LLM compression and performance enhancements through oneDNN, INT4, and INT8 optimizations on Intel Arc Graphics GPUs. Additionally, significant strides have been made to reduce the memory footprint for smaller GenAI models on Intel Core Ultra processors, ensuring efficient resource utilization.

Notably, OpenVINO 2024.1 integrates the Neural Processing Unit (NPU) plug-in for Intel Core Ultra “Meteor Lake” processors directly into its GitHub repository, streamlining the development process and eliminating the dependency on external PyPi packages. Furthermore, the accessibility of OpenVINO’s JavaScript API has been enhanced through seamless integration with the NPM repository. For users leveraging ARM processors, the update brings default support for FP16 inference with Arm’s Convolutional Neural Network, further enhancing performance and compatibility across diverse hardware architectures.

In conclusion, OpenVINO 2024.1 stands as a testament to Intel’s unwavering commitment to driving innovation in the realm of AI development. With its myriad of enhancements, this release not only empowers developers with advanced tools and capabilities but also paves the way for transformative AI applications across industries. As we eagerly anticipate the rollout of OpenVINO 2024.1, the promise of unparalleled performance and efficiency beckons, inviting developers to explore new frontiers in AI-powered innovation.

Conclusion:

Intel’s release of OpenVINO 2024.1 signifies a significant advancement in AI development tools, particularly in the realms of Generative AI and Large Language Models. With optimizations tailored for Intel processors and enhanced support for diverse hardware architectures, this release sets a new standard for AI development frameworks. Developers can expect improved performance, efficiency, and accessibility, paving the way for transformative AI applications across industries. This update underscores Intel’s continued leadership in driving innovation and empowering developers to harness the full potential of AI technologies.