TL;DR:

- DynPoint, an AI algorithm developed by the University of Oxford, offers a groundbreaking approach to monocular video view synthesis.

- Unlike traditional methods, DynPoint efficiently generates views for longer monocular videos by explicitly estimating consistent depth and scene flow.

- It constructs a hierarchical neural point cloud from multiple reference frames, enabling the synthesis of target frames with unparalleled speed and accuracy.

- DynPoint’s speed and accuracy were rigorously evaluated on various datasets, showcasing its superior performance in view synthesis.

Main AI News:

The realm of computer vision is witnessing a transformative shift, and at the forefront of this revolution stands the pursuit of novel view synthesis (VS). The allure of VS lies in its potential to redefine artificial reality and bolster a machine’s capacity to comprehend the intricate interplay of visual and geometric elements in diverse scenarios. While contemporary neural rendering techniques have triumphed in bestowing photorealistic incarnations upon static landscapes, the ever-shifting dynamicity of real-world environments poses a formidable challenge for existing methodologies.

In recent times, the scholarly discourse has pivoted towards the dynamic milieu, where the orchestration of one or more multilayer perceptrons (MLPs) has emerged as a promising avenue for encoding spatiotemporal scene intricacies. One notable strategy involves the creation of a comprehensive latent representation, extending down to the very frame level. Regrettably, the confined memory capacity of MLPs, along with their counterparts in the representation domain, shackles the potential of this approach, rendering it most suitable for shorter video sequences, despite its prowess in delivering visually impeccable outcomes.

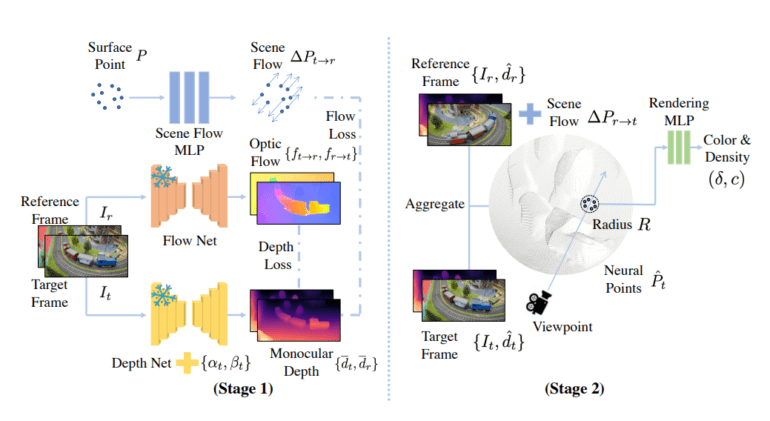

Enter DynPoint, a groundbreaking innovation brought to light by the luminaries at the University of Oxford. This pioneering method eschews the conventional path of laborious latent canonical representation learning, instead opting for a more nimble approach to generating views from extended monocular video streams. DynPoint diverges from its predecessors by proffering a deliberate estimation of consistent depth and scene flow for surface points, a stark departure from traditional paradigms that encode information implicitly. By harnessing the collective wisdom of multiple reference frames, this algorithm fuses the acquired insights into the very fabric of the target frame, giving birth to a hierarchical neural point cloud. It is through this innovative neural point cloud that the vistas of the target frame are meticulously crafted and synthesized.

This intricate process thrives on the establishment of correspondences between the target and reference frames, fortified by depth and scene flow inferences. The magic happens when these correspondences are leveraged to expedite the synthesis of the target frame within monocular videos. The researchers have meticulously crafted a representation that seamlessly aggregates information from reference frames into the target frame, ensuring a swift and harmonious transformation. DynPoint has been rigorously evaluated across an array of datasets, including the likes of Nerfie, Nvidia, HyperNeRF, iPhone, and Davis. The results from these exhaustive assessments leave no room for doubt – DynPoint reigns supreme in both accuracy and speed, cementing its status as a true game-changer in the realm of view synthesis.

Conclusion:

DynPoint’s emergence signifies a pivotal moment in the field of view synthesis, with potential implications across diverse industries. Its ability to swiftly and accurately generate novel views for monocular videos could revolutionize applications ranging from entertainment to virtual reality, opening up new possibilities for businesses to engage their audiences and deliver immersive experiences. Businesses that harness the power of DynPoint may gain a competitive edge by offering innovative solutions that leverage this cutting-edge technology.