TL;DR:

- LASER is a novel approach by MIT and Microsoft that optimizes Large Language Models (LLMs).

- It selectively reduces higher-order components of weight matrices, preserving essential components and eliminating redundancies.

- Grounded in singular value decomposition, LASER enhances model refinement without additional training or parameters.

- LASER has shown remarkable gains in accuracy across various NLP reasoning benchmarks.

- It excels in handling less frequently represented data, enhancing the robustness and factuality of LLMs.

Main AI News:

In the ever-evolving landscape of artificial intelligence, Transformer-based Large Language Models (LLMs) have taken center stage, showcasing their prowess in natural language processing, computer vision, and reinforcement learning. These models, renowned for their substantial size and computational demands, have been at the forefront of AI development. Yet, a fundamental challenge persists in the quest to maximize their performance while curbing their already considerable size and computational requirements.

Within the realm of LLMs, the concept of over-parameterization looms large. It suggests that these models harbor more parameters than are strictly necessary for effective learning and functioning, resulting in inefficiencies. Balancing this inefficiency without compromising the models’ acquired capabilities remains a paramount concern. Traditional methods often resort to pruning, a process that entails the removal of parameters from a model to bolster efficiency. However, indiscriminate pruning can lead to a precarious trade-off between model size and effectiveness, potentially diminishing performance.

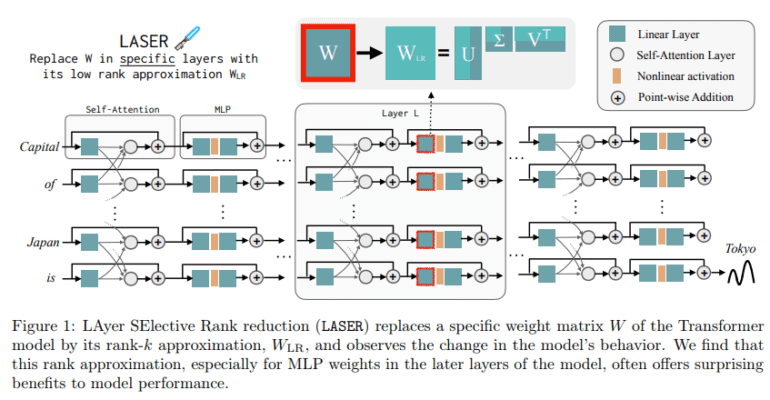

Enter LASER, an acronym for LAyer-SElective Rank reduction, a groundbreaking approach introduced by researchers from MIT and Microsoft. LASER reimagines the optimization of LLMs by precisely targeting higher-order components of weight matrices for reduction. In stark contrast to conventional methods, which uniformly trim parameters throughout the model, LASER hones in on specific layers within the Transformer model, notably focusing on the Multi-Layer Perceptron (MLP) and attention layers. This nuanced strategy enables the preservation of essential components while eliminating redundancies.

At the heart of LASER lies the application of singular value decomposition, a mathematical technique that identifies and subsequently reduces the higher-order components within weight matrices. By concentrating on specific matrices within the MLP and attention layers, LASER ensures that only the most pertinent and indispensable components are retained. This precision in reduction paves the way for more sophisticated model refinement, upholding its core capabilities while bolstering overall efficiency.

The impact of LASER has been nothing short of extraordinary. The method has demonstrated significant improvements in accuracy across a spectrum of reasoning benchmarks in Natural Language Processing (NLP). Remarkably, these advancements were attained without the need for additional training or parameters, a noteworthy accomplishment given the intricate nature of these models. One of the most remarkable outcomes of LASER is its unparalleled effectiveness in handling information that appears infrequently in the training data. This signifies a substantial increase in the models’ accuracy and an augmentation of their robustness and factuality. LASER equips LLMs to tackle nuanced and less common data with unparalleled proficiency, thus expanding their applicability and overall effectiveness in AI-driven tasks.

Conclusion:

LASER’s innovative approach promises to reshape the LLM landscape, addressing the challenge of optimization while maintaining or even improving model performance. This breakthrough has the potential to fuel advancements in various AI-driven industries, making large language models more efficient and versatile, thus contributing to the continued growth of the AI market.