TL;DR:

- MaLA-500 is a groundbreaking large language model designed to cover an extensive range of 534 languages.

- It outperforms existing open LLMs, demonstrating superior adaptability and performance in various linguistic contexts.

- The model’s training includes vocabulary expansion and continuous pretraining using the Glot500-c dataset.

- MaLA-500 addresses the limitations of LLMs in supporting low-resource languages and achieves state-of-the-art in-context learning outcomes.

- Innovative strategies, such as vocabulary extension, empower the model to comprehend and produce content in multiple languages effectively.

Main AI News:

The landscape of Artificial Intelligence (AI) is constantly evolving, with Large Language Models (LLMs) taking center stage in the latest developments. These LLMs have been making waves by demonstrating their remarkable ability to generate and understand natural language. However, when it comes to managing non-English languages, especially those with limited resources, LLMs, particularly those with an English-centric focus, encounter certain challenges. Despite the emergence of multilingual LLMs, the current language coverage offered by most models remains insufficient.

A significant breakthrough was achieved with the introduction of the XLM-R auto-encoding model, boasting an impressive 278 million parameters and providing language support for a remarkable range, from 100 to a staggering 534 languages. Furthermore, the Glot500-c corpora, spanning across 534 languages representing 47 diverse language families, has proven to be a boon for low-resource languages. Additional effective strategies for mitigating data scarcity encompass vocabulary expansion and continuous pretraining efforts.

The success of these multilingual models has ignited inspiration for further advancements in this domain. In a recent research endeavor, a collaborative team of experts set out to address the limitations that had previously hindered small-scale model deployments. Their objective was to enhance the capabilities of LLMs by extending their reach to a broader spectrum of languages. To achieve this, they delved into innovative strategies for language adaptation, scaling up model parameters to a staggering 10 billion.

Adapting LLMs to low-resource languages poses its own set of challenges, including issues related to data scarcity, region-specific vocabulary, and linguistic variations. To tackle these challenges head-on, the research team proposed solutions such as expanding the vocabulary, continuous training of open LLMs, and the implementation of adaptation techniques like LoRA low-rank reparameterization.

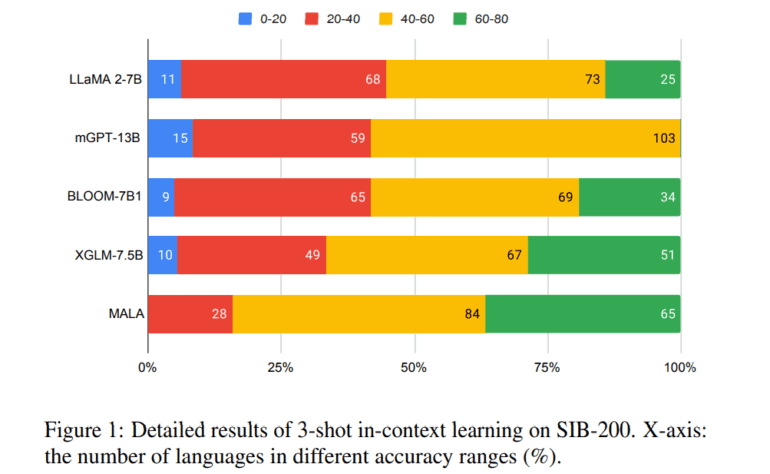

The collaborative efforts of researchers from LMU Munich, Munich Center for Machine Learning, University of Helsinki, Instituto Superior Técnico (Lisbon ELLIS Unit), Instituto de Telecomunicações, and Unbabel have culminated in the development of MaLA-500. MaLA-500 stands as a groundbreaking large language model designed to encompass an extensive range of 534 languages. Its training incorporates vocabulary expansion and ongoing LLaMA 2 pretraining utilizing Glot500-c. An in-depth analysis conducted with the SIB-200 dataset has demonstrated that MaLA-500 outperforms currently available open LLMs, even those with comparable or marginally larger model sizes. It has exhibited impressive in-context learning outcomes, showcasing its ability to comprehend and generate language within specific environments, underscoring its adaptability and relevance across diverse linguistic contexts.

In addressing the current inadequacies of LLMs in supporting low-resource languages, MaLA-500 emerges as a transformative solution. It achieves state-of-the-art in-context learning results through innovative approaches, such as vocabulary extension and continuous pretraining. Vocabulary extension, in particular, plays a pivotal role in expanding the model’s linguistic repertoire, enabling it to navigate and produce content in an array of languages effectively.

Conclusion:

The introduction of MaLA-500 signifies a significant advancement in the field of multilingual language models. Its superior performance and adaptability across a wide range of languages hold great promise for industries and markets seeking to expand their reach and relevance in diverse linguistic environments. MaLA-500’s innovative approaches, particularly vocabulary extension, pave the way for enhanced communication and understanding on a global scale, offering exciting opportunities for businesses and organizations to tap into previously underserved markets.