TL;DR:

- TruEra has launched TruLens for LLM Applications, an open source testing software for apps built on Large Language Models (LLMs).

- LLMs are becoming essential for future apps, but concerns about their use, including hallucinations, inaccuracies, toxicity, bias, and safety, have arisen.

- TruLens addresses two major challenges in LLM app development: slow experiment iteration and inadequate testing methods.

- The software enables quick testing, iteration, and improvement of LLM-powered apps through feedback functions.

- TruLens evaluates truthfulness, relevance, harmful language, user sentiment, fairness, bias, and more.

- It helps developers enhance LLM efficacy, reduce toxicity, evaluate information retrieval, and flag biased language.

- TruLens also helps developers understand the cost of their app’s LLM API usage.

- LLM-based applications are growing, and TruLens facilitates their development, performance, and market launch.

- TruLens fills a critical gap in the emerging LLMOps tech stack, ensuring responsible LLM integration.

- It offers valuable tools for developers to optimize LLM performance and implementation.

Main AI News:

TruEra, a leading provider of software solutions for ML model testing and monitoring throughout the MLOPs lifecycle, has unveiled TruLens for LLM Applications, an innovative open source testing software designed specifically for apps built on Large Language Models (LLMs) such as GPT. With LLMs rapidly emerging as a pivotal technology that will underpin a wide range of applications in the near future, concerns regarding their usage have also been growing. Prominent news stories have highlighted issues such as LLM hallucinations, inaccuracies, toxicity, bias, safety, and the potential for misuse.

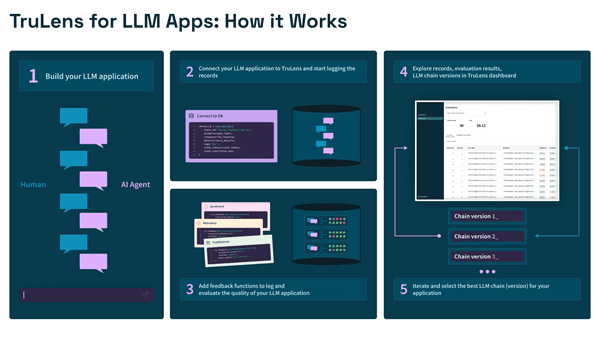

TruLens effectively addresses two major pain points currently faced in LLM app development:

- Slow and laborious experiment iteration and champion selection: Developing LLM applications involves a significant amount of experimentation. Once the initial version of an app is created, developers engage in manual testing and review, constantly adjusting prompts, hyperparameters, and models and repeatedly retesting until they achieve a satisfactory outcome. This iterative process can be arduous, as determining the ultimate winner is often far from clear.

- Inadequate, resource-intensive, and time-consuming testing methods: A key challenge in experiment iteration stems from the ineffectiveness of existing tools for testing LLM apps. Presently, the most common testing method relies on direct human feedback. While this approach serves as a valuable starting point, it suffers from being slow, inconsistent, and difficult to scale. TruLens revolutionizes this aspect through its unique feedback functions, which offer a programmatic approach to evaluating LLM applications at scale. This empowers teams to swiftly test, iterate, and enhance their LLM-powered apps.

TruLens utilizes feedback functions to score the output of an LLM application by analyzing the generated text and associated metadata. By developing a model that captures this relationship, TruLens can programmatically apply it to facilitate the large-scale evaluation of LLM applications. Anupam Datta, Co-founder, President, and Chief Scientist at TruEra, elaborated on this approach, stating, “TruLens feedback functions score the output of an LLM application by analyzing generated text from an LLM-powered app and metadata. By modeling this relationship, we can then programmatically apply it to scale up model evaluation.”

The TruLens platform for LLMs equips AI developers with a myriad of benefits, enabling them to:

- Enhance the efficacy of LLM usage within their applications.

- Mitigate the potential social harm or “toxicity” associated with LLM results.

- Evaluate the performance of information retrieval capabilities.

- Identify and address biased language in application responses.

- Gain insights into the financial cost of their application’s LLM API usage.

Furthermore, TruLens offers a wide range of feedback functions that can evaluate various aspects, including truthfulness, question answering relevance, harmful or toxic language, user sentiment, language mismatch, response verbosity, fairness, and bias, as well as any other custom feedback functions tailored to the user’s specific requirements.

“LLM-based applications are experiencing exponential growth and will only become more prevalent,” remarked Datta. “TruLens empowers developers to build high-performing applications and expedite their time to market. By validating the effectiveness of the LLM for their application’s use case and mitigating the potentially harmful effects associated with LLMs, TruLens fills a critical void within the emerging LLMOps tech stack.“

TruEra’s TruLens for LLM Applications sets a new standard in LLM app development, providing developers with invaluable tools and methodologies to ensure the optimal performance and responsible implementation of LLMs in their applications. By enabling rapid iteration, precise evaluation, and effective monitoring, TruLens paves the way for the successful integration of LLMs into the future of business and technology.

Conlcusion:

The launch of TruLens for LLM Applications by TruEra signifies a significant development in the market. The availability of open source testing software specifically designed for apps built on Large Language Models (LLMs) addresses key concerns regarding their use and paves the way for their wider adoption. By enabling faster experiment iteration, improved testing methods, and comprehensive evaluation of LLM-powered apps, TruLens empowers developers to enhance the efficacy of LLM usage, mitigate potential risks such as toxicity and bias, and optimize their applications’ performance. This advancement in LLM app development contributes to the growth and maturation of the market, fostering responsible integration of LLMs and accelerating the delivery of high-performing applications to meet evolving business needs.