TL;DR:

- Kolena, a startup specializing in AI model testing tools, has raised $15 million in funding.

- Lobby Capital led the funding round, with participation from SignalFire and Bloomberg Beta.

- This funding brings Kolena’s total capital to $21 million.

- The funds will be used for expanding research, regulatory collaborations, and sales and marketing efforts.

- Kolena’s focus is on enhancing trust in AI technology and offering comprehensive model quality evaluation.

- The platform enables customized testing, risk management, and privacy-focused evaluation of AI models.

- The company plans to target “mission-critical” partners and introduce team bundles for mid-sized organizations and startups in 2024.

Main AI News:

Kolena, the pioneering startup specializing in the development of tools for testing, benchmarking, and validating the performance of AI models, has secured a substantial $15 million in funding. This funding round, led by Lobby Capital and featuring participation from SignalFire and Bloomberg Beta, propels Kolena’s total raised capital to an impressive $21 million. In an exclusive email interview with TechCrunch, co-founder and CEO Mohamed Elgendy outlined the strategic allocation of this fresh capital, focusing on the expansion of the company’s research team, strategic collaborations with regulatory authorities, and the amplification of Kolena’s sales and marketing initiatives.

Elgendy emphasized the pivotal importance of establishing trust in the AI sector, stating, “The use cases for AI are enormous, but AI lacks trust from both builders and the public. This technology must be rolled out in a way that makes digital experiences better, not worse. The genie isn’t going back in the bottle, but as an industry, we can make sure we make the right wishes.”

Kolena, founded in 2021 by Elgendy along with Andrew Shi and Gordon Hart, seasoned AI professionals with extensive experience at tech giants such as Amazon, Palantir, Rakuten, and Synapse, aims to revolutionize AI model quality assessment. Their brainchild, the “model quality framework,” offers unit testing and end-to-end testing for models within a customizable, enterprise-friendly package.

Elgendy explained the core philosophy behind Kolena: “First and foremost, we wanted to provide a new framework for model quality — not just a tool that simplifies current approaches. Kolena makes it possible to continuously run scenario-level or unit tests. It also provides end-to-end testing of the entire AI and machine learning product, not just sub-components.”

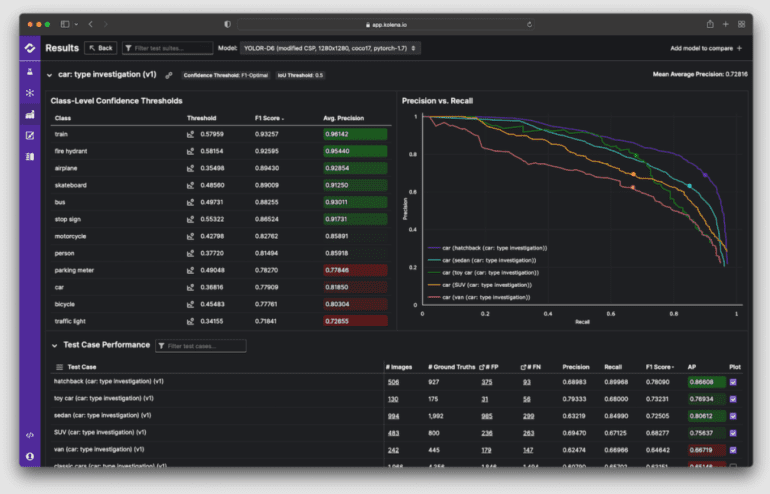

Kolena’s platform facilitates the identification of gaps in AI model test data coverage and incorporates robust risk management features to monitor the deployment of AI systems. Users can leverage Kolena’s intuitive UI to create test cases, scrutinize a model’s performance, and discern potential reasons for underperformance while making side-by-side comparisons with other models.

Elgendy highlighted the significance of nuanced performance evaluation, stating, “With Kolena, teams can manage and run tests for specific scenarios that the AI product will have to deal with, rather than applying a blanket ‘aggregate’ metric like an accuracy score, which can obscure the details of a model’s performance.”

The potential impact of Kolena on the data science community is substantial. AI engineers often allocate just 20% of their time to model analysis and development, with the majority of their efforts dedicated to data sourcing and cleaning. Moreover, a significant number of AI models fail to progress from the pilot stage to production due to challenges in achieving accurate and high-performing models.

While numerous players in the market are developing tools for testing, monitoring, and validating AI models, Kolena distinguishes itself by affording customers “full control” over data types, evaluation logic, and other critical components of AI model testing. Privacy concerns are also addressed, as Kolena eliminates the need for customers to upload their data or models; the platform only retains model test results for future benchmarking, which can be promptly deleted upon request.

Elgendy underscored Kolena’s commitment to comprehensive model evaluation: “Minimizing risk from an AI and machine learning system requires rigorous testing before deployment, yet enterprises don’t have strong tooling or processes around model validation. Kolena focuses on comprehensive and thorough model evaluation.“

Based in San Francisco and currently boasting a team of 28 dedicated employees, Kolena is selective in its choice of partners, opting to collaborate with “mission-critical” companies. Future plans include the introduction of team bundles tailored to mid-sized organizations and early-stage AI startups, scheduled for release in Q2 2024.

Conclusion:

Kolena’s successful funding round highlights the growing importance of trustworthy AI models in the market. The injection of $15 million will allow Kolena to further develop its comprehensive model quality framework and privacy-focused approach, potentially addressing the industry’s need for rigorous model validation. As AI continues to play a pivotal role in various sectors, Kolena’s advancements may contribute to building greater confidence in AI technologies.