TL;DR:

- KOSMOS-G, a cutting-edge AI model, pioneers high-quality zero-shot image creation from generalized vision-language input.

- Developed by a collaboration between Microsoft Research, NYU, and the University of Waterloo.

- It surpasses existing models, generating images in diverse scenarios based on textual descriptions.

- KOSMOS-G’s training involves three stages, including pre-training on multimodal data and fine-tuning with CLIP supervision.

- It can replace CLIP in image generation systems, opening up new horizons for innovative applications.

- Offers multi-entity Vision-Language-to-Image synthesis capabilities.

Main AI News:

In a groundbreaking stride at the intersection of artificial intelligence and visual creativity, a consortium of leading researchers hailing from Microsoft Research, New York University, and the University of Waterloo has unveiled KOSMOS-G. This pioneering AI model introduces an unprecedented era of high-fidelity zero-shot image generation from generalized vision-language input, harnessing the potent capabilities of Multimodal Large Language Models (LLMs).

In recent times, significant advancements have been made in the realm of synthesizing images from textual descriptions and amalgamating text with visuals to birth novel compositions. Yet, an uncharted frontier has emerged – the arena of image creation stemming from generalized vision-language inputs. Imagine crafting an image from a comprehensive scene depiction encompassing myriad objects and individuals. KOSMOS-G steps in as the vanguard, equipped to surmount this challenge with the prowess of Multimodal LLMs.

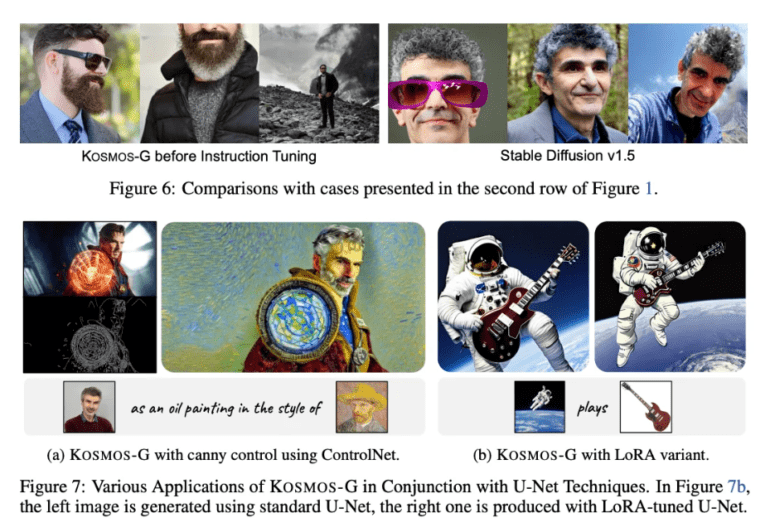

KOSMOS-G possesses the remarkable ability to craft intricate images from intricate combinations of textual narratives and diverse visual elements, even in scenarios where it has never encountered analogous instances before. It heralds a new era as the premier model capable of image generation in contexts replete with diverse entities, responding faithfully to textual descriptions. Moreover, KOSMOS-G’s emergence ushers in an era where it can seamlessly replace CLIP, thereby unlocking a plethora of novel possibilities that can be harnessed in conjunction with techniques such as ControlNet and LoRA, fostering innovative applications.

The architecture of KOSMOS-G is underpinned by a clever approach. It commences by training a Multimodal LLM, adept at comprehending both text and images and subsequently aligns it with the CLIP text encoder, renowned for its text comprehension prowess. When furnished with a caption containing textual descriptions and segmented images, KOSMOS-G is primed to fabricate images that faithfully correspond to the narrative and adhere to the provided instructions. It achieves this feat by leveraging a pre-trained image decoder, effectively translating its acquired knowledge from visual data into the creation of accurate depictions across diverse scenarios.

KOSMOS-G’s proficiency extends to generating images based on prescribed instructions and input data. This multifaceted model undergoes three distinct stages of training to hone its capabilities. In the initial stage, the model is pre-trained on a vast corpus of multimodal data. Subsequently, in the second stage, an AlignerNet is trained to synchronize KOSMOS-G’s output space with the input space of U-Net, guided by CLIP supervision. The third and final stage sees KOSMOS-G undergo fine-tuning through a compositional generation task employing meticulously curated data. During the initial stage, only the Multimodal LLM is trained. In the second stage, the AlignerNet is trained while keeping the Multimodal LLM static. The third stage involves the joint training of both AlignerNet and Multimodal LLM, with the image decoder maintaining a fixed state throughout all phases.

KOSMOS-G distinguishes itself as an adept zero-shot image generator across diverse contexts. It possesses the capacity to produce coherent, aesthetically pleasing images that can be tailored to diverse styles, contexts, modifications, and additional detailing. This remarkable capability positions KOSMOS-G as the pioneer in achieving multi-entity Vision-Language-to-Image (VL2I) synthesis in a zero-shot setting.

By seamlessly stepping into the shoes of CLIP in image generation systems, KOSMOS-G ushers in a realm of exciting possibilities that were previously deemed unattainable. Building upon the foundations laid by CLIP, KOSMOS-G propels the transformation from image generation grounded solely in text to an era where text and visual information harmoniously coalesce, birthing an array of opportunities for innovative applications in the realms of artificial intelligence and visual creativity.

Conclusion:

KOSMOS-G’s advent marks a pivotal moment in the field of artificial intelligence and image generation. Its ability to create images from complex textual descriptions and diverse visual elements, combined with its prowess in zero-shot image generation, offers exciting prospects for a market hungry for innovative applications. By seamlessly integrating text and visual data, KOSMOS-G opens up new avenues for creativity and practicality, positioning itself as a powerful tool for businesses seeking to leverage the potential of AI in visual content creation.