TL;DR:

- Lamini AI is a groundbreaking tool that empowers developers to train high-performing Language Model Models (LLMs) with ease.

- The Lamini library offers fine-tuning and RLHF capabilities, allowing developers to optimize LLM performance quickly.

- The hosted data generator simplifies the creation of training data for instruction-following LLMs.

- Lamini AI provides a free and open-source LLM that can accurately follow instructions with minimal programming effort.

- Developers can leverage Lamini’s capabilities to adjust LLMs with industry-specific knowledge and optimize prompt tuning.

- The RLHF process is streamlined, eliminating the need for extensive machine learning and human labeling resources.

- Lamini AI enables seamless deployment of trained LLMs in the cloud, simplifying integration into applications.

Main AI News:

In the world of machine learning, training language models from scratch can be a daunting task, especially when it comes to understanding why fine-tuned models fall short. The iteration cycles for fine-tuning on small datasets can stretch over months, making the process time-consuming and resource-intensive. However, there is a game-changing solution on the horizon: Lamini AI.

Lamini AI introduces a groundbreaking approach to language model training. Unlike traditional methods, Lamini AI enables developers to train high-performing Language Model Models (LLMs) with remarkable ease and efficiency. With just a few lines of code from the Lamini library, any developer, regardless of their machine learning expertise, can unlock the power of LLMs that rival ChatGPT on even the most massive datasets.

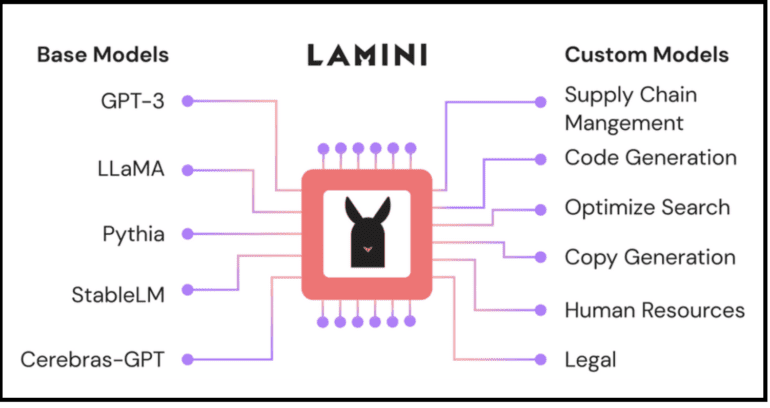

Released by Lamini.ai, this innovative library goes beyond the capabilities of existing programming tools. It incorporates advanced techniques like Reinforcement Learning from Human Feedback (RLHF) and hallucination suppression, enabling developers to achieve unprecedented results. Whether it’s leveraging OpenAI’s cutting-edge models or tapping into open-source alternatives on HuggingFace, Lamini simplifies the process of comparing and executing various base models, all with just a single line of code.

So, how can you harness the power of Lamini AI to develop your own high-performing LLM? Let’s dive into the steps:

- The Lamini library: Your gateway to fine-tuning and RLHF Lamini AI offers a comprehensive library that allows for seamless fine-tuning of prompts and text outputs. With its powerful capabilities, you can easily fine-tune your LLM and leverage RLHF techniques, taking your models to new heights of performance.

- The hosted data generator: Unlocking commercial-grade data creation. For the first time ever, Lamini introduces a hosted data generator explicitly designed for commercial usage. This game-changing tool enables you to effortlessly create the data required to train instruction-following LLMs. No longer do you need to rely solely on extensive programming expertise—Lamini makes data generation simple and accessible.

- Free and open-source: Effortlessly follow instructions Lamini AI provides a free and open-source LLM that excels at following instructions, thanks to the aforementioned software. Even with minimal programming effort, you can leverage Lamini’s capabilities to create LLMs that understand and respond to instructions accurately.

- Tailor your LLM with industry-specific knowledge. While base language models like ChatGPT are proficient in understanding English, they may fall short when it comes to industry-specific jargon and standards. Lamini AI offers a solution by allowing you to adjust your LLM’s prompt and even switch between different models using its intuitive APIs. Seamlessly tune your LLM’s performance to meet your industry’s unique requirements.

- Generate massive input-output data effortlessly. To train a powerful LLM, you’ll need a substantial amount of input-output data. With Lamini’s data generation capabilities, you can easily produce vast datasets that showcase how your LLM should react to various inputs, be it English or JSON. Even from minimal input, the Lamini library can generate up to 50,000 data points, making data creation more efficient than ever before.

- Leverage your extensive data for model adjustment. To fine-tune your LLM further, Lamini AI enables you to adjust your starting model using your own extensive dataset. In addition to the data generator, Lamini also provides a pre-trained LLM that has been meticulously tuned using synthetic data. This combination of techniques ensures optimal performance for your LLM.

- Reinforcement Learning from Human Feedback (RLHF) To further enhance your LLM’s capabilities, Lamini simplifies the RLHF process. You no longer need a large team of machine learning (ML) and human labeling (HL) experts to operate RLHF. With Lamini’s streamlined approach, putting your finely adjusted model through RLHF becomes a hassle-free endeavor.

- Seamlessly deploy in the cloud. Once your LLM is trained and ready, Lamini AI makes it effortless to deploy your model in the cloud. By invoking the API’s endpoint in your application, you can seamlessly integrate your LLM into your workflow and start benefiting from its powerful language processing capabilities.

The Lamini AI team is excited to revolutionize the training process for engineering teams, providing them with a simplified yet highly effective way to develop high-performing LLMs. By making iteration cycles faster and more efficient, Lamini AI empowers developers to construct sophisticated language models without being limited to prompt tinkering. With Lamini AI, the future of language model training is within reach, and the possibilities are boundless.

Conclusion:

Lamini AI’s revolutionary approach to language model training has significant implications for the market. It empowers developers of all skill levels to create high-performing LLMs that rival industry-leading models. The simplified and efficient training process, combined with the ability to fine-tune LLMs with industry-specific knowledge, opens up new possibilities for various sectors. With Lamini AI, the market can expect accelerated development of sophisticated language models, leading to improved language understanding, instruction-following capabilities, and enhanced user experiences in a wide range of applications.