- Overconfidence in AI testing can lead to accidents and loss of trust.

- Tesla’s overreliance on risk-tolerant users resulted in AI mistakes and fatal accidents.

- Publicized errors discouraged risk-averse users from engaging with the autopilot feature, limiting AI learning opportunities.

- Healthcare AI testing shows similar patterns, with cautious doctors avoiding tools after hearing about mistakes.

- Involving risk-averse professionals in early AI testing can provide better data and feedback for improvement.

- Transparent AI systems are crucial to gaining user trust, especially in sectors like healthcare.

Main AI News:

Relying solely on optimistic users to test AI tools can lead to significant setbacks—a mistake Tesla has made that the health sector should avoid.

Tesla has been testing self-driving cars for years, with its autopilot AI improving with each update. The system learns from user input, primarily from drivers actively using the autopilot feature. However, some users need to be more confident risk-takers, leading to dangerous outcomes.

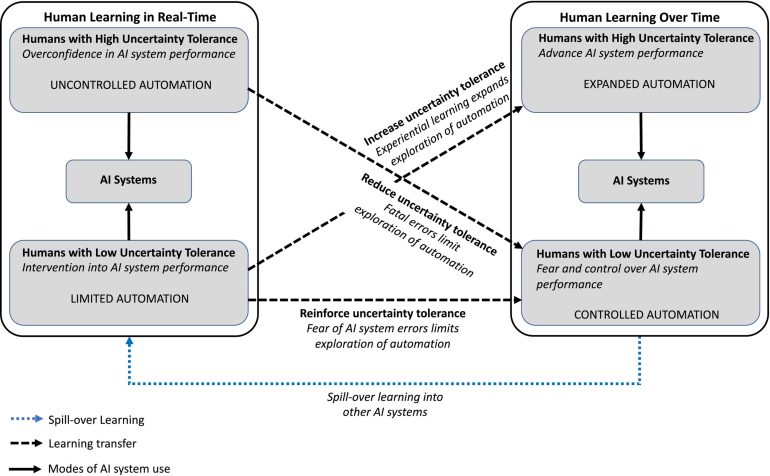

In some cases, drivers have trusted the autopilot function so much that they’ve fallen asleep at the wheel, resulting in fatal accidents. One incident involved a car crashing into a parked fire engine. Research by NTNU, published in Information and Organization, shows that these overconfident users have undermined Tesla’s progress. Their publicized accidents caused risk-averse drivers to lose faith in the system, reducing its use and limiting the AI’s learning opportunities.

This research offers valuable lessons for other industries, especially healthcare, where AI has the potential to revolutionize patient care. Typically, risk-tolerant individuals are chosen to test new technologies because they’re more willing to take chances. However, their mistakes can erode the confidence of cautious users, making it harder for AI tools to gain traction.

In healthcare, researchers from NTNU and SINTEF have observed similar behaviors among doctors testing AI models. Risk-averse physicians often avoid using these tools after hearing about errors, reinforcing their initial skepticism. This distrust is heightened by AI’s ‘black box’ nature, which makes decisions without providing clear explanations.

To avoid the pitfalls experienced by Tesla, the healthcare sector should involve risk-averse doctors in the early stages of AI testing. Encouraging these professionals to experiment with AI in a controlled manner—without directly relying on it for patient care—can provide the system with valuable data to improve. Just as cautious Tesla drivers corrected autopilot errors, helping the AI learn, healthcare professionals can offer crucial feedback to refine medical AI tools.

If AI tools are to succeed in healthcare, companies must prioritize feedback from cautious users. Their input can help create smarter, more reliable systems. However, if early mistakes alienate them, the sector risks losing the full benefits AI could offer.

Conclusion:

The market for AI in critical sectors such as healthcare must prioritize user trust and data accuracy. The Tesla case illustrates that over-reliance on risk-tolerant users can cause more harm than good, limiting broader adoption and valuable feedback. To succeed, companies need to engage cautious, risk-averse users early in the testing process, ensuring that AI systems are refined with careful intervention and transparent explanations. By building AI tools incorporating diverse feedback, businesses can create more reliable systems, fostering confidence and expanding market opportunities.