- Research proposes leveraging Large Language Models (LLMs) as proxies for human cognitive modeling.

- LLMs exhibit behaviors akin to humans in decision-making processes.

- Challenges include data volume and origin of behavioral similarities.

- Solution involves pretraining LLMs on synthetic datasets for improved human behavior prediction.

- Joint effort by Princeton and Warwick Universities explores computationally equivalent tasks for LLMs and rational agents.

- Approach enhances LLMs’ utility in decision-making scenarios, outperforming traditional models.

Main AI News:

A recent exploration by researchers delving into Large Language Models (LLMs) has unveiled a striking parallel between LLMs and human cognitive abilities. Both entities display tendencies in decision-making processes that often diverge from conventional rationality, exhibiting traits such as risk aversion and loss aversion. Additionally, LLMs showcase biases and errors akin to human cognition, particularly evident in tasks involving probability judgments and arithmetic operations. These striking resemblances hint at the potential of utilizing LLMs as proxies for studying human cognition. Nevertheless, formidable hurdles persist, including the vast corpus of data upon which LLMs are trained and the murky origins of these cognitive analogs.

Controversy surrounds the suitability of LLMs as accurate models of human cognition, spurred by several contentious factors. LLMs undergo training on substantially larger datasets compared to their human counterparts and may have encountered test scenarios during their training phase, potentially leading to artificially induced human-like behaviors through alignment processes. Despite these impediments, the fine-tuning of LLMs, exemplified by models such as LLaMA-1-65B, using human choice datasets has resulted in heightened precision in predicting human behavior. Past studies have also underscored the significance of synthetic datasets in augmenting LLM capabilities, particularly evident in problem-solving domains like arithmetic. Pretraining on such datasets has shown a marked enhancement in the predictive accuracy of human decisions.

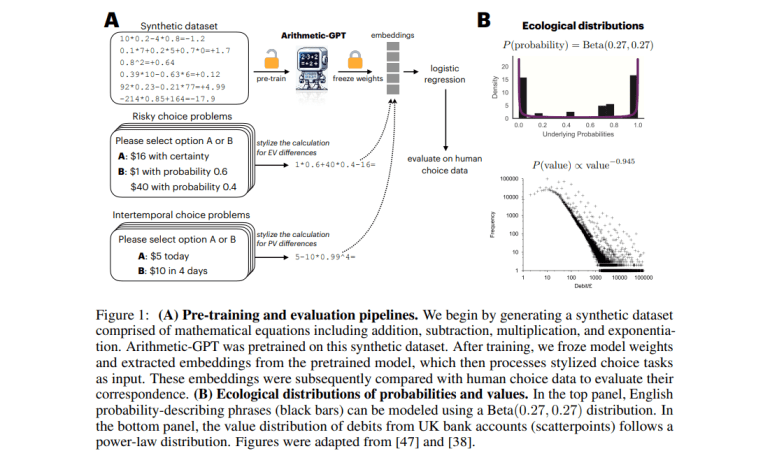

A joint effort by researchers from Princeton University and Warwick University proposes an innovative approach to bolstering the applicability of LLMs as cognitive models, primarily by (i) leveraging computationally equivalent tasks crucial for cognitive problem-solving, shared by both LLMs and rational agents, and (ii) scrutinizing task distributions requisite for eliciting human-like behaviors from LLMs. Applied in the context of decision-making, particularly in scenarios involving risk and intertemporal choices, Arithmetic-GPT, an LLM pretrained on a meticulously curated arithmetic dataset, demonstrates superior predictive capabilities compared to conventional cognitive models. This strategic pretraining serves to closely align LLMs with human decision-making paradigms.

Researchers tackle the challenges associated with employing LLMs as cognitive models by devising a data generation algorithm to craft synthetic datasets and accessing neural activation patterns pivotal for decision-making processes. A compact LM imbued with a Generative Pretrained Transformer (GPT) architecture, dubbed Arithmetic-GPT, underwent pretraining on arithmetic tasks. Synthetic datasets, mirroring realistic probabilities and values, were meticulously crafted for training purposes. Pretraining specifications encompass a context length of 26, batch size of 2048, and a learning rate of 10⁻³. Human decision-making datasets pertaining to risky and intertemporal choices underwent reanalysis to gauge the model’s efficacy.

Experimental outcomes showcase that embeddings derived from the Arithmetic-GPT model, pretrained on ecologically valid synthetic datasets, offer the most precise predictions of human choices in decision-making scenarios. Logistic regression utilizing these embeddings as independent variables and human choice probabilities as the dependent variable yields elevated adjusted R² values compared to alternative models, including LLaMA-3-70bInstruct. Comparative assessments against behavioral models and Multilayer Perceptrons (MLPs) reveal that while MLPs generally exhibit superior performance, Arithmetic-GPT embeddings still exhibit a substantial correlation with human data, particularly evident in intertemporal choice tasks. The robustness of these findings is confirmed via rigorous 10-fold cross-validation.

Conclusion:

Leveraging artificial intelligence for enhanced cognitive modeling, as explored in this study, signifies a significant advancement in understanding human cognition. This not only offers valuable insights for cognitive science but also presents opportunities for businesses to develop more nuanced and human-like AI systems for decision-making and problem-solving tasks. Understanding and harnessing the capabilities of LLMs can lead to the development of more effective AI solutions across various industries, ultimately driving innovation and improving efficiency in the market.