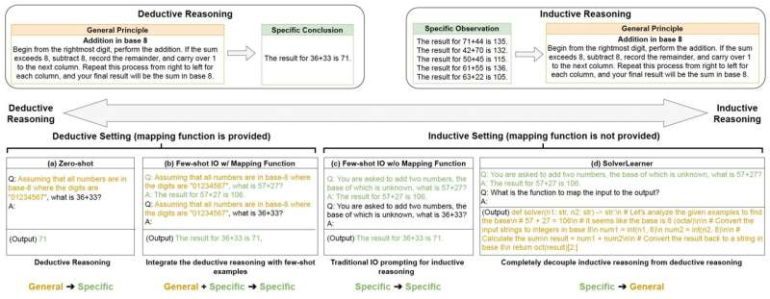

- Reasoning in AI is categorized into deductive and inductive reasoning.

- Deductive reasoning applies general principles to specific cases; inductive reasoning forms general rules from specific observations.

- A study by Amazon and UCLA examined the reasoning abilities of large language models (LLMs).

- The research found that LLMs excel in inductive reasoning but need help with deductive reasoning.

- The SolverLearner framework was developed to enhance LLMs’ inductive reasoning capabilities by separating learning from inference.

- LLMs performed well in counterfactual scenarios that deviate from norms.

- Insights are crucial for optimizing LLMs in AI applications like chatbots.

Main AI News:

In the dynamic field of cognitive processes, reasoning is generally divided into two key types: deductive and inductive. Deductive reasoning begins with a broad principle or rule applied to specific situations to reach conclusions. On the other hand, inductive reasoning involves creating general principles based on specific observations, such as concluding that all swans are white because every swan observed has been white.

Although human reasoning using deduction and induction has been extensively researched, applying these strategies in artificial intelligence (AI) systems, particularly in large language models (LLMs), has yet to be deeply explored. A recent study by researchers from Amazon and the University of California, Los Angeles, aimed to bridge this knowledge gap by investigating the reasoning abilities of LLMs, advanced AI systems known for their capability to process, generate, and adapt text in human languages. Their findings, shared on the arXiv preprint server, indicate that while LLMs are strong in inductive reasoning, they often need to catch up in deductive reasoning.

The study sought to pinpoint the shortcomings in LLM reasoning, focusing mainly on “counterfactual” reasoning tasks that differ from established norms. To achieve this, the researchers developed a framework called SolverLearner, which enhances inductive reasoning by clearly separating the learning of input-output mapping functions from their application during inference. These functions are executed through external code interpreters, reducing dependence on the LLM’s deductive reasoning abilities.

Earlier research primarily assessed LLMs’ deductive reasoning by evaluating their capability to follow instructions in simple reasoning tasks. However, this study is among the first to systematically examine LLMs’ inductive reasoning, or their ability to generalize from previously processed information to make future predictions.

Through the SolverLearner framework, the research team trained LLMs to learn functions that map inputs to outputs based on specific examples, enabling them to evaluate the models’ capacity to form general rules. The study found that LLMs exhibit strong inductive reasoning skills, particularly in counterfactual scenarios that diverge from the norm. This insight is vital for optimizing the use of LLMs in AI applications. For example, leveraging LLMs’ inductive reasoning strengths may yield better results when creating chatbots or other automated systems.

Conclusion:

The study’s findings indicate that AI systems, particularly large language models, have strong inductive reasoning capabilities but are weaker in deductive reasoning. For the market, businesses can effectively deploy LLMs in applications where pattern recognition and generalization from past data are key, such as customer service automation and predictive analytics. However, reliance on LLMs for tasks requiring strict logical deductions or handling hypothetical scenarios might require supplementary tools or different AI models. It opens up opportunities for developing hybrid AI systems that combine inductive and deductive reasoning strengths, potentially driving innovation in AI-driven solutions across various industries.