TL;DR:

- Instruction tuning combines multiple activities, improving generalization to new tasks.

- Visual encoders like CLIP-ViT enhance human-agent interaction based on images but struggle with text comprehension.

- OCR techniques enable the recognition of words from images, bridging the gap between visuals and language.

- Computation is augmented by incorporating recognized texts into visual instruction-tuned models.

- The gathering of 422K noisy instruction-following data using text-rich images significantly improves feature alignment.

- GPT-4 generates sophisticated instructions by denoising OCR data and producing unique questions.

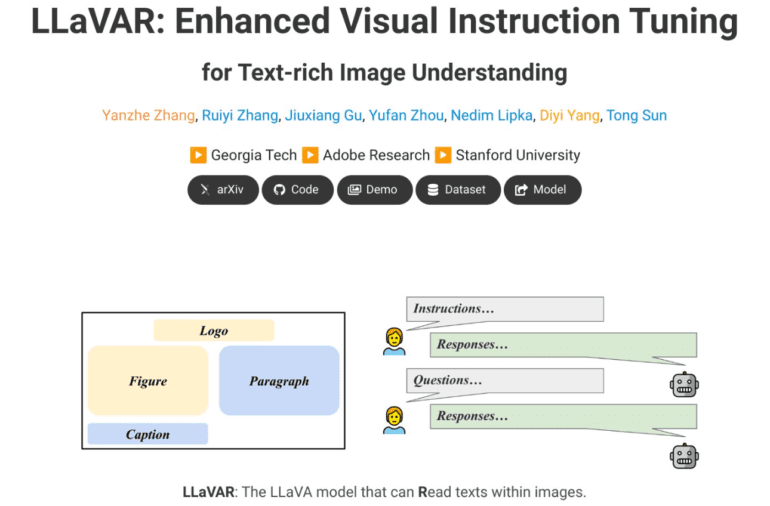

- LLaVAR (Large Language and Vision Assistant that Can Read) by Georgia Tech, Adobe Research, and Stanford University, scales input resolution and achieves impressive results on text-based VQA datasets.

- Public availability of training and assessment data promotes further advancements.

Main AI News:

The realm of instruction tuning has experienced a groundbreaking leap forward by merging various activities into a single directive, thereby enhancing the ability to generalize across new tasks. This extraordinary capacity to respond to open-ended questions has played a pivotal role in the recent explosion of chatbot technology, particularly with the advent of ChatGPT 2. However, the integration of visual encoders, such as CLIP-ViT, into conversation agents has added a new dimension to this landscape. These visual instruction-tuned models empower human-agent interactions through the utilization of images. Nonetheless, a major hurdle lies in their ability to comprehend text embedded within these images, possibly due to the inherent bias in the training data, which predominantly consists of natural imagery from sources like Conceptual Captions and COCO. Yet, reading comprehension is an essential aspect of daily visual perception in humans. Fortunately, the advent of OCR techniques has unlocked the ability to decipher text from photographs.

One approach to address this challenge is to augment the computation, particularly the context lengths, by incorporating recognized text into the input of visual instruction-tuned models. However, this approach often falls short of fully harnessing the encoding capacity of visual encoders. To overcome this limitation, researchers propose the collection of instruction-following data that requires a comprehensive understanding of words within images, ultimately leading to the end-to-end improvement of visual instruction-tuned models. By combining manually provided directions, such as “Identify any text visible in the image provided,” with OCR results, they have successfully amassed a colossal dataset of 422,000 noisy instruction-following instances, leveraging text-rich images.

This vast repository of noisy-aligned data has yielded remarkable advancements in feature alignment between the language decoder and visual features. Moreover, the researchers have enlisted the expertise of text-only GPT-4 to generate 16,000 conversations utilizing OCR results and image captions as high-quality examples of the instruction following. These conversations encompass multiple turns of question-and-answer pairs and require GPT-4 to generate intricate instructions based on the input. This approach necessitates GPT-4 to denoise the OCR data and generate unique questions, as depicted in Figure 1. By incorporating both noisy and high-quality examples into the pretraining and finetuning stages of LLaVA, the researchers evaluate the efficacy of the acquired data.

The formidable collaboration between researchers from Georgia Tech, Adobe Research, and Stanford University has resulted in the birth of LLaVAR, an acronym for Large Language and Vision Assistant that Can Read. In their quest to enhance the encoding of even the minutest textual features, the researchers experiment with scaling the input resolution from 2242 to 3362 compared to the original LLaVA. Through empirical assessment techniques, they present their findings on four text-based VQA datasets, accompanied by the ScienceQA finetuning outcomes. Additionally, they conduct an assessment of GPT-4-based instruction-following using 50 text-rich pictures from LAION and 30 natural images from COCO. Furthermore, they provide a qualitative analysis to gauge LLaVAR’s prowess in following more intricate instructions, such as those found on posters, website screenshots, and tweets.

In summary, the remarkable contributions of this research endeavor are as follows:

- The acquisition of 16,000 high-quality and 422,000 noisy instruction-following instances, both of which have proven instrumental in enhancing visual instruction tuning. This expanded capacity enables LLaVAR to facilitate end-to-end interactions based on diverse online materials, encompassing both text and images, while modestly improving the model’s performance on natural photographs.

- The training and assessment data, as well as the milestones achieved by the model, have been made publicly accessible, fostering further progress and collaboration in this rapidly evolving field.

Conclusion:

The breakthroughs achieved by LLaVAR and its integration of visual and text comprehension capabilities mark a significant milestone in the field of AI interaction. This innovation opens up new possibilities for AI-powered chatbots and virtual assistants, enabling them to seamlessly understand and respond to instructions that involve both images and text. The combination of instruction tuning, OCR techniques, and the utilization of massive instruction-following datasets has propelled LLaVAR to deliver end-to-end interactions based on diverse online content.

This advancement has substantial implications for various industries, including e-commerce, customer support, and content creation, as businesses can now leverage AI systems that comprehend and follow instructions in a multimodal context. With LLaVAR’s publicly available training and assessment data, researchers and developers can further enhance the performance and capabilities of similar models, driving continuous innovation and advancements in the market.