TL;DR:

- LMSYS-Chat-1M dataset showcases real-world interactions with large language models (LLMs).

- It addresses the challenges of collecting user question data due to operational costs and data privacy concerns.

- The dataset highlights the effectiveness of fine-tuning smaller language models for content moderation.

- LMSYS-Chat-1M offers insights into model robustness and safety, setting a new benchmark.

- It empowers instructional fine-tuning, boosting performance in specific benchmarks.

- This resource is a goldmine for generating new benchmark questions for language models.

Main AI News:

In the realm of artificial intelligence, large language models (LLMs) have emerged as indispensable tools, revolutionizing a multitude of applications, from virtual assistants to code generation. What sets these LLMs apart is their ability to adapt and respond to specific queries and question formats tailored to diverse purposes. Understanding the intricate nuances of user interactions with LLMs not only unveils invaluable insights into user expectations but also builds trust in these AI powerhouses. Moreover, it opens the door to enhancing LLMs, curbing misuse, and fortifying AI safety protocols. This brings us to a critical juncture where:

- The exorbitant operational costs tied to running large language model services pose a financial challenge for numerous organizations keen on collecting authentic user question data.

- Enterprises armed with substantial user question datasets remain reluctant to share them, wary of revealing their competitive edge and safeguarding data privacy.

- Encouraging users to engage with open-source language models presents a formidable hurdle, as these models often fall short of the performance benchmarks set by industry giants.

- This uphill battle to captivate users’ interest with open models makes it arduous to amass a substantial dataset that faithfully mirrors real user interactions with these models for research purposes.

To bridge this chasm, we introduce a groundbreaking research paper unveiling a pioneering large-scale dataset: LMSYS-Chat-1M. This meticulously curated dataset is a product of extensive real-world interactions between large language models (LLMs) and users, meticulously gathered over a five-month period. We achieved this feat by offering a free online LLM service, granting access to 25 prominent LLMs, encompassing both open-source and proprietary models. The endeavor consumed considerable computational resources, amounting to thousands of A100 hours.

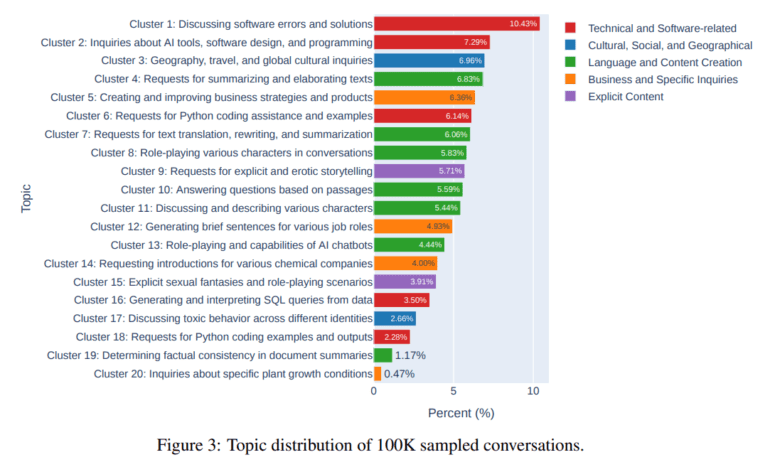

To sustain user engagement throughout this journey, we ingeniously incorporated a competitive dimension known as the “chatbot arena.” We incentivized users to utilize the service by consistently updating rankings and leaderboards for popular LLMs. The result? LMSYS-Chat-1M boasts over one million user conversations, spanning an eclectic array of languages and topics. Importantly, all users willingly consented to their interactions being included in this dataset, in adherence to the terms outlined in the “Terms of Use” section on the data collection website.

This invaluable dataset was meticulously harvested from the Vicuna demo and Chatbot Arena website during the period spanning April to August 2023. The platform offers users three distinct chat interface options: single model chat, chatbot arena (where chatbots engage in friendly combat), and a side-by-side chatbot arena for comparing two chatbots. The best part? This platform is entirely free, with no compensation required from users and no associated fees.

Our research paper delves into four distinct use cases, showcasing the versatile applications of LMSYS-Chat-1M. We reveal how this dataset can be employed to fine-tune smaller language models, elevating them to serve as formidable content moderators, rivaling the capabilities of GPT-4. Furthermore, even in the presence of safety measures in some of the served models, LMSYS-Chat-1M presents dialogues that can put the safeguards of leading language models to the test, offering a fresh benchmark for scrutinizing model robustness and safety.

Notably, the dataset encompasses high-quality user-language model dialogues, ideally suited for instructional fine-tuning. We illustrate how a subset of these dialogues empowers Llama-2 models to attain performance levels akin to Vicuna and Llama2 Chat on specific benchmarks. In conclusion, with its extensive coverage of topics and tasks, LMSYS-Chat-1M emerges as a priceless resource for generating new benchmark questions for language models, propelling the field of AI research forward.

Conclusion:

The release of LMSYS-Chat-1M represents a significant stride in the AI market. It addresses critical data collection challenges and strengthens the capabilities of language models. Researchers and organizations can leverage this dataset to enhance AI applications, ensuring better user experiences and improved safety measures in AI-driven systems.