TL;DR:

- Med-Flamingo is a unique foundation model specialized for the medical domain.

- It utilizes in-context learning and multimodal knowledge sources from diverse medical fields.

- The model excels in generative medical visual question-answering (VQA) tasks, outperforming earlier models.

- Med-Flamingo demonstrates medical reasoning skills by providing justifications for its predictions.

- However, its effectiveness is affected by the diversity and accessibility of training data and the complexity of medical tasks.

Main AI News:

As the world witnesses a surging wave of Artificial Intelligence (AI) advancements, the potential of foundation models to tackle diverse challenges with minimal labeled data is truly astounding. Among these breakthroughs, in-context learning has emerged as a captivating concept, empowering models to grasp new tasks from mere snippets of examples while preserving their inherent parameters intact. In the realm of healthcare and medicine, this in-context learning holds the key to catapulting medical AI models to unparalleled heights.

However, implementing in-context learning in the medical domain comes with its share of hurdles. The intrinsic complexity and multimodality of medical data, coupled with a multitude of tasks that demand attention, present formidable obstacles. While prior attempts have been made at multimodal medical foundation models like ChexZero and BiomedCLIP, which excel in tasks like reading chest X-rays and processing biological literature, none have truly embraced in-context learning in the medical realm.

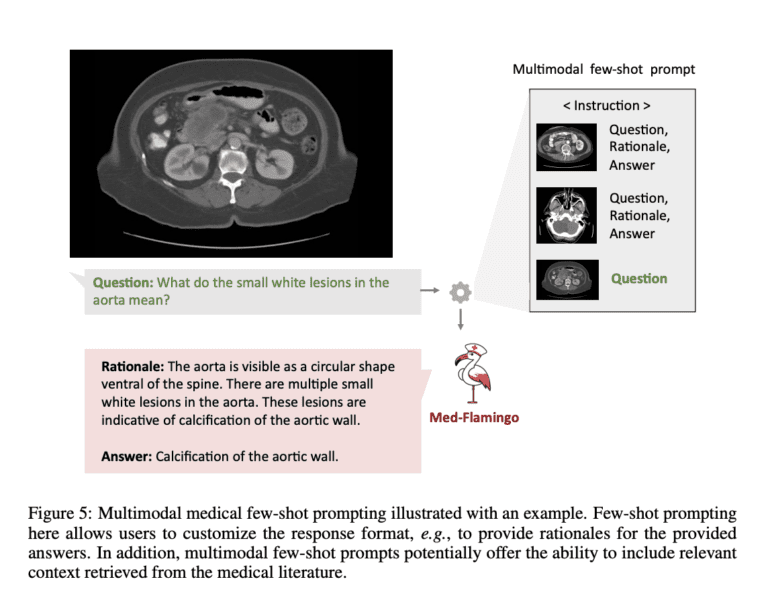

To bridge this gap and shatter existing limitations, an ingenious team of researchers has given rise to Med-Flamingo, a groundbreaking foundation model meticulously designed for the medical domain. Building on the success of Flamingo, one of the pioneers in vision-language models that showcased extraordinary in-context and few-shot learning capabilities, Med-Flamingo takes it a step further by incorporating multimodal knowledge from diverse medical fields.

The journey of Med-Flamingo starts with the creation of an original, interleaved image-text dataset sourced from over 4,000 esteemed medical textbooks, ensuring accuracy and reliability. Through rigorous evaluation, the researchers have focused on generative medical visual question-answering (VQA) tasks, where the model effortlessly crafts open-ended responses, surpassing predefined possibilities. Their ingenious evaluation process yields a human evaluation score, a pivotal parameter in assessing performance. Additionally, the team has introduced a challenging visual USMLE dataset that encompasses difficult generative VQA tasks spanning various specialties, enriched with images, case vignettes, and lab results.

In head-to-head comparisons, Med-Flamingo has outshined earlier models in clinical evaluation scores across three generative medical VQA datasets. Doctors themselves have shown a preference for the model’s predictions, a testament to its medical reasoning prowess. Remarkably, Med-Flamingo deftly addresses intricate medical queries and provides justifications, a feat never before achieved by multimodal medical foundation models.

While the success of Med-Flamingo is undeniable, it is crucial to acknowledge the impact of training data diversity and accessibility, as well as the complexity of certain medical tasks on the model’s effectiveness.

Conclusion:

Med-Flamingo’s emergence represents a significant step forward for the medical AI market. The incorporation of in-context learning and multimodal knowledge processing enhances the model’s capabilities, making it more valuable for medical professionals and researchers. The success of Med-Flamingo underscores the potential for further advancements in AI technologies tailored for the healthcare sector. As the demand for more sophisticated and reliable medical AI solutions grows, Med-Flamingo’s innovations position it favorably in the competitive landscape of the AI-driven medical market.