- Meta AI now includes Llama 3.1 405B, offering improved handling of complex queries.

- Users must manually switch to Llama 3.1 405B and face query limits before reverting to Llama 3.1 70B.

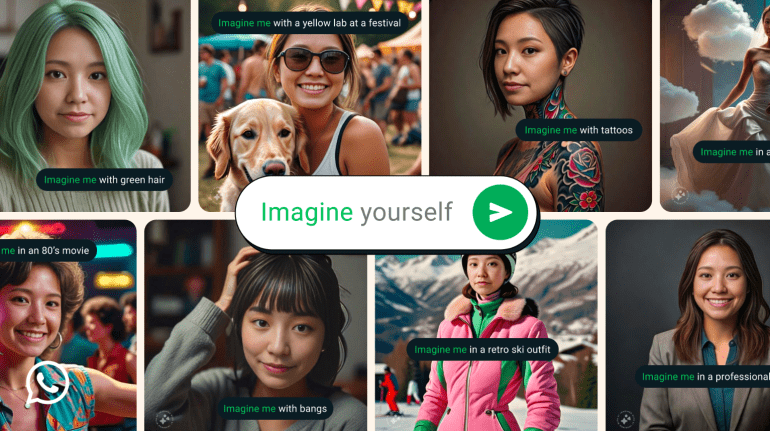

- The new “Imagine Yourself” model generates creative selfies based on user prompts.

- Privacy concerns arise due to Meta’s use of public posts and images for training the new model.

- New editing tools allow for further customization of generated images.

- Meta AI will replace the Voice Commands feature in Meta Quest’s VR headset.

- Meta AI expands availability to 22 countries, including new regions like Argentina and Mexico.

- The assistant now supports multiple languages, with more expected in the future.

Main AI News:

Meta AI, the AI-driven assistant powering interactions across Facebook, Instagram, Messenger, and the web, has unveiled a suite of new features designed to elevate user engagement and functionality. At the core of these enhancements is Meta’s latest flagship AI model, Llama 3.1 405B, which offers significant improvements in processing complex queries over its predecessor.

The update responds to prior feedback that found Meta AI lacking in performance, particularly with tasks such as fact-checking, web searches, and simple queries. TechCrunch’s Devin Coldewey and other reviewers noted that earlier iterations of Meta AI struggled to perform even basic functions like finding recipes and airfares. The new Llama 3.1 405B aims to address these shortcomings with advanced capabilities in mathematics and coding. This makes it particularly suited for users seeking help with homework, scientific explanations, or code debugging, promising a more reliable and responsive assistant experience.

However, users should note that accessing Llama 3.1 405B requires a manual switch from the default model and is subject to query limits. If users exceed this limit, the system reverts to the Llama 3.1 70B model, which offers reduced functionality. Meta has labeled this integration as a “preview,” indicating that the feature is still in its early stages.

Beyond the Llama 3.1 405B integration, Meta AI introduces the “Imagine Yourself” model, which generates creative selfies based on user inputs. Available in beta, this feature allows users to transform their photos into imaginative scenarios by typing commands like “Imagine me surfing” or “Imagine me on a beach vacation.” Despite its potential, there are concerns regarding privacy, as Meta has not disclosed the specific data used for training the model. Users have expressed unease about the use of public posts and images from Meta’s platforms, coupled with a complex opt-out process.

Additionally, Meta AI introduces new editing tools for users to customize their generated images further. Features include the ability to add or remove objects and adjust scenes with commands like “Change the cat to a corgi.” Starting next month, users will see the “Edit with AI” button for more precise adjustments. Meta also plans to integrate new shortcuts for sharing AI-generated images across Meta apps, enhancing user convenience.

The update extends to Meta Quest’s VR headset, replacing the Voice Commands feature with Meta AI, which will be available in “experimental mode” starting next month in the U.S. and Canada. Users can utilize Meta AI with passthrough enabled to inquire about their physical surroundings, such as asking, “Look and tell me what kind of top would complete this outfit” while holding up a pair of shorts.

Meta AI’s reach is also expanding globally. The assistant is now available in 22 countries, including newly added regions like Argentina, Chile, Colombia, Ecuador, Mexico, Peru, and Cameroon. It supports a range of languages, including French, German, Hindi, Hindi-Romanized Script, Italian, Portuguese, and Spanish, with additional languages expected in the future.

Conclusion:

Meta AI’s latest updates represent a significant push towards enhancing user experience by integrating more advanced capabilities and expanding its reach. The introduction of Llama 3.1 405B addresses previous criticisms of the AI’s performance, potentially improving user satisfaction and broadening its applications in education and coding. The addition of the “Imagine Yourself” model and new editing tools highlights Meta’s commitment to innovation in user engagement and personalization. However, concerns over privacy and the complexity of the new features could impact user adoption. Overall, these developments position Meta AI as a more competitive player in the AI assistant market, with its expanded global presence and multilingual support likely to attract a broader user base.