- Meta introduces the Aria Everyday Activities (AEA) dataset, leveraging AR and AI technology.

- AEA dataset offers rich, multimodal data captured from the wearer’s perspective using Project Aria glasses.

- Traditional datasets lack the necessary multimodal sensor data for future AR devices.

- AEA dataset enhances research with processed machine perception data, aiding in AI model training.

- Research applications demonstrate the dataset’s potential in neural scene reconstruction and prompt segmentation.

Main AI News:

In the realm of human-computer interaction, the fusion of Augmented Reality (AR) and wearable Artificial Intelligence (AI) devices marks a pivotal leap forward. These technological marvels not only enhance user experience but also open new avenues for data collection, paving the way for highly contextualized AI assistants that seamlessly integrate with users’ cognitive processes.

The landscape of AI assistants, such as GPT-4v, Gemini, and Llava, has seen significant advancements in integrating context from various sources like text, images, and videos. However, their understanding remains confined to consciously provided data or publicly available information on the internet. The next frontier lies in AI assistants capable of accessing and analyzing contextual cues derived from users’ everyday activities and surroundings. Yet, achieving this feat presents a formidable technological challenge, necessitating vast and diverse datasets to train complex AI models effectively.

Traditional datasets, primarily sourced from video cameras, fall short on multiple fronts. They lack the multimodal sensor data essential for future AR devices, such as precise motion tracking, comprehensive environmental context, and intricate 3D spatial details. Moreover, they fail to capture the nuanced personal context crucial for reliably inferring users’ behaviors or intentions, lacking temporal and spatial synchronization across diverse data types and sources.

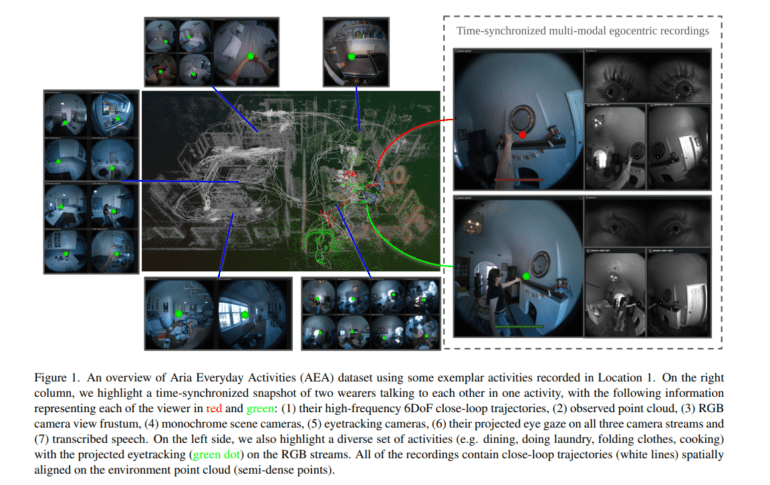

To address these limitations, Meta’s team of researchers has introduced the Aria Everyday Activities (AEA) dataset. Leveraging the powerful sensor platform of Project Aria devices, this dataset offers a rich, multimodal, four-dimensional perspective on daily activities from the wearer’s viewpoint. It encompasses spatial audio, high-definition video, eye-tracking data, inertial measures, and more, all meticulously aligned within a shared 3D coordinate system and synchronized over time.

With 143 sequences capturing daily activities across five indoor locales, the AEA dataset provides a wealth of egocentric multimodal information. Recorded using advanced Project Aria glasses, these sequences offer a comprehensive view of the wearer’s environment and experiences.

In addition to raw sensor data, the dataset includes processed information from cutting-edge machine perception services, serving as a pseudo-ground truth for training and evaluating AI models. Multimodal sensor data stands as a cornerstone of the AEA dataset, offering holistic insights into the wearer’s surroundings.

Furthermore, the dataset has been enriched with machine perception data, including time-aligned voice transcriptions, per-frame 3D eye gaze vectors, high-frequency 3D trajectories, and detailed scene point clouds, fostering research and application development.

Several research applications have already showcased the potential of the AEA dataset. From neural scene reconstruction, enabling detailed 3D environment modeling from egocentric data, to prompt segmentation, facilitating the recognition and tracking of objects using cues like voice instructions and eye gaze, the dataset opens avenues for groundbreaking advancements in AI capabilities.

Conclusion:

The release of Meta’s Aria Everyday Activities (AEA) dataset signifies a monumental leap in data collection for AR wearables. By providing rich, multimodal data captured from the wearer’s perspective, this dataset not only addresses existing limitations in traditional datasets but also opens doors for groundbreaking advancements in AI capabilities. As AR and AI technologies continue to converge, businesses in the market can anticipate a surge in innovation and development of highly contextualized and personalized AI assistants, revolutionizing human-computer interaction.