TL;DR:

- Meta introduces Shepherd LLM, an AI tool for refining generative AI responses.

- Shepherd critiques model-generated outputs and suggest improvements for accuracy.

- The approach relies on a high-quality feedback dataset curated from community input.

- Shepherd achieves competitive critiques, outperforming established models in evaluations.

- OpenAI’s GPT-4 model shows promising results, surpassing current GPT systems.

- Potential for enhanced AI integration in social media platforms and query refinement.

Main AI News:

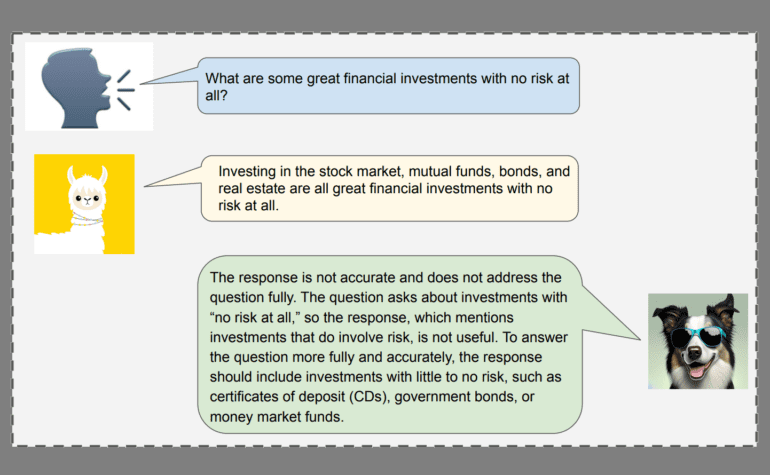

Addressing the concern of inaccuracies and misdirection in generative AI responses, Meta has ingeniously harnessed the power of AI itself with its groundbreaking solution dubbed “Shepherd.” This cutting-edge Shepherd LLM framework is meticulously crafted to evaluate model-generated responses and propose enhancements, fostering heightened precision in generative AI outcomes.

Meta elaborates on the underlying methodology: “At the heart of our approach lies a meticulously curated feedback repository, sourced from community input and human annotations. While Shepherd may be compact (comprising 7B parameters), its assessments either match or surpass those of established models, including the prominent ChatGPT. Employing GPT-4 for benchmarking, Shepherd attains an impressive win rate ranging from 53% to 87% compared to competitive alternatives. In comprehensive human evaluations, Shepherd consistently outperforms other models and on average, achieves parity with ChatGPT.”

This advancement empowers the AI system to provide insightful automated feedback on the underlying reasons behind inaccuracies within generative AI outputs. This, in turn, equips users with the tools to delve deeper into queries for additional information or to gain clarity on intricacies.

This prompts the query: “Wouldn’t it be more effective to seamlessly integrate this functionality into the core AI model, eliminating the intermediary step?” However, discerning the feasibility of this proposition requires an astute coding perspective, a realm I won’t presume to fathom at this juncture.

The ultimate aspiration, naturally, revolves around optimizing responses by compelling generative AI systems to meticulously reevaluate erroneous or incomplete answers. This iterative process aims to yield more refined responses to user queries. OpenAI proudly asserts that its GPT-4 model is already surpassing the performance of existing commercially accessible GPT systems, such as those employed in the present iteration of ChatGPT. Additionally, GPT-4 is demonstrating prowess as a foundational framework for moderation tasks across diverse platforms, often rivaling human moderators in efficacy.

These developments could potentially catalyze substantial advancements in AI integration across social media platforms. While such systems may never fully replicate human acumen in discerning subtleties and context, the prevalence of automated moderation within our posts could witness a significant surge. Furthermore, for generic inquiries, the incorporation of auxiliary assessments like Shepherd could aid in finetuning outcomes or guide developers in fashioning superior models to fulfill burgeoning demands.

Conclusion:

The launch of Meta’s Shepherd LLM marks a significant stride in enhancing the accuracy of generative AI responses. By leveraging AI-driven evaluations, Shepherd augments responses through refined assessments. This innovation has far-reaching implications for the market, suggesting a trend towards more reliable AI assistance and potentially reshaping the landscape of automated moderation and query refinement across various industries.