- Meta teams up with Hammerspace for cutting-edge AI infrastructure project.

- The partnership aims to support 49,152 Nvidia H100 GPUs across two clusters.

- Meta envisions creating open and responsibly built artificial general intelligence (AGI) for widespread accessibility.

- New GPU clusters will drive advancements in AI model development and research.

- Previous AI Research SuperCluster laid the groundwork, now superseded by larger, more advanced clusters.

- Meta’s storage infrastructure, including collaboration with Hammerspace, enables rapid code iteration and debugging.

- Ambitious plans to scale up to 350,000 NVIDIA H100 GPUs by the end of 2024.

Main AI News:

In a strategic move, Meta has officially partnered with Hammerspace to spearhead an advanced AI infrastructure initiative. This collaboration solidifies Hammerspace as the primary supplier of data orchestration software for Meta, supporting an impressive array of 49,152 Nvidia H100 GPUs, strategically divided into two equal clusters.

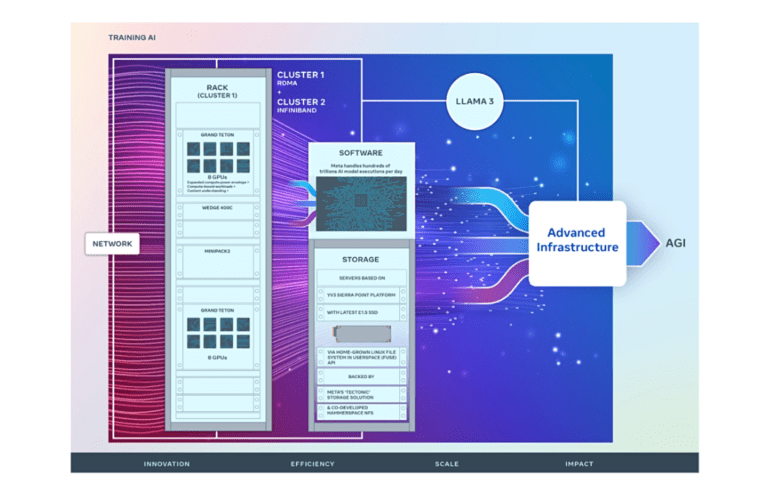

As the parent company of social media giants like Facebook and Instagram, Meta envisions a future where artificial general intelligence (AGI) is not only accessible but also responsibly developed for the benefit of all. In a recent blog post, Meta outlined its commitment to this vision, unveiling plans for two 24k GPU clusters designed to propel its AI capabilities to new heights. The comprehensive overview covers various aspects, including hardware specifications, network architecture, storage solutions, design principles, performance metrics, and software integrations, all aimed at maximizing throughput and reliability across diverse AI workloads.

For weeks, Hammerspace has been hinting at a major hyperscaler AI customer, which industry insiders speculated to be Meta. Now, with Meta’s confirmation, the role of Hammerspace in powering Meta’s AI infrastructure, particularly in the deployment of two Llama 3 AI training systems, has been officially disclosed.

According to Meta’s bloggers, these clusters will support both current and next-generation AI models, including the highly anticipated Llama 3, the successor to the publicly released Llama 2, as well as various AI research initiatives spanning GenAI and beyond.

Meta’s journey in AI infrastructure dates back to its precursor AI Research SuperCluster, boasting 16,000 Nvidia A100 GPUs, instrumental in laying the groundwork for the company’s initial AI models. The new clusters, however, mark a significant leap forward, with their capacity to handle hundreds of trillions of AI model executions daily, surpassing the capabilities of their predecessors.

Detailed diagrams provided in the blog illustrate the scale and sophistication of Meta’s latest infrastructure, emphasizing their pivotal role in advancing GenAI product development and AI research endeavors.

The clusters, each comprising 24,576 Nvidia H100 GPUs, are equipped with state-of-the-art networking solutions, including RDMA over RoCE 400 Gbps Ethernet and Nvidia Quantum2 400Gbps InfiniBand setups. These are complemented by Meta’s Grand Teton OCP hardware chassis and Tectonic distributed storage system, optimized for flash and exabyte-scale storage requirements.

A key aspect of Meta’s storage infrastructure involves its collaboration with Hammerspace to develop a parallel network file system (NFS) deployment. This integration facilitates seamless developer experiences, enabling interactive debugging across thousands of GPUs while ensuring rapid code iteration and data accessibility at scale.

Both storage deployments leverage Meta’s YV3 Sierra Point servers, outfitted with top-tier E1.S format SSDs, meticulously customized for optimal throughput, rack efficiency, and fault tolerance.

Looking ahead, Meta has ambitious plans for its infrastructure, aiming to scale up to 350,000 NVIDIA H100 GPUs by the end of 2024. This expansion underscores Meta’s unwavering commitment to innovation and its relentless pursuit of AI excellence on a global scale.

Conclusion:

Meta’s collaboration with Hammerspace to revolutionize AI infrastructure signals a significant leap forward in the market. With plans to scale up to 350,000 NVIDIA H100 GPUs by the end of 2024, Meta is poised to dominate the AI landscape, setting new standards for innovation and accessibility in artificial intelligence. This partnership not only underscores Meta’s commitment to pushing the boundaries of technology but also presents lucrative opportunities for stakeholders in the AI market to capitalize on the evolving demands for advanced infrastructure solutions.