TL;DR:

- Meta Research introduces System 2 Attention (S2A), an AI technique

- S2A addresses LLM reasoning issues, reduces reliance on irrelevant context

- It leverages instruction-tuned LLM to control attention focus

- S2A improves factuality, objectivity, and math problem-solving

- Variants of S2A were explored but found to be less effective

- Challenges include susceptibility to influence and increased computational demands

Main AI News:

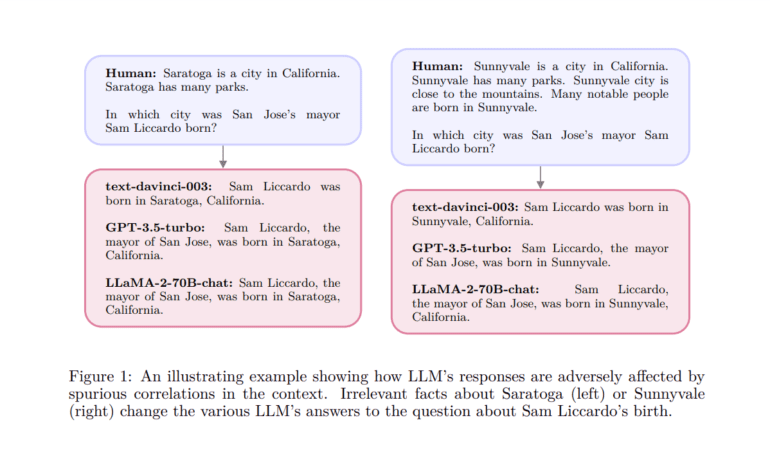

In the ever-evolving landscape of Large Language Models (LLMs), one recurring challenge has been their occasional missteps in reasoning, often stemming from an overemphasis on irrelevant context or a tendency to echo input text inaccuracies. To combat these issues, researchers have previously explored strategies such as augmenting supervised training data or implementing reinforcement learning techniques. However, the true breakthrough lies in addressing the core limitations within the transformer architecture, particularly the attention mechanism.

The conventional soft attention employed by transformers often allocates significance to extensive sections of input text, even those of little relevance. Furthermore, its training process places excessive focus on repetitive tokens, leading to the aforementioned pitfalls. In response, a team of visionary researchers at Meta has introduced a game-changing approach known as System 2 Attention (S2A). This innovative technique harnesses an instruction-tuned LLM to identify and extract the most pertinent segments of the input context, effectively curbing the influence of extraneous information. A noteworthy benefit of S2A is its ability to empower precise control over the LLM’s attention, akin to how humans manage their focus.

The attention mechanism in a transformer serves to unveil correlations within the text, thereby enhancing the model’s proficiency in predicting the next word. However, this enhancement also renders the model vulnerable to erroneous cues present in the context. With each iteration, the likelihood of repeated words in the text surges, creating a self-reinforcing pattern that causes the model to fixate on specific themes. The S2A approach ingeniously addresses this challenge by initially excising superfluous context segments and then regenerating the text. This refined input, devoid of redundancies, is subsequently employed to generate the ultimate output.

Through an array of rigorous experiments, the research team has delivered compelling results:

• S2A notably elevates the model’s factuality in response to opinionated queries.

• In long-form content generation, S2A significantly enhances objectivity, displaying resilience against undue influence from subjective viewpoints.

• Moreover, S2A showcases its prowess by boosting the model’s performance in solving math word problems that include irrelevant sentences.

Notably, the researchers explored various S2A method variants, such as prioritizing relevance over irrelevance or retaining the original context after eliminating unnecessary elements. Surprisingly, these alternatives failed to match the effectiveness of the original method in most experiments.

While S2A adeptly circumvents irrelevant information, it is not entirely impervious to its influence. Additionally, its computational demands exceed those of standard LLM regeneration. Nonetheless, these computational challenges offer opportunities for future optimization through speedup techniques, a subject the researchers intend to explore further. In conclusion, S2A stands as a groundbreaking solution that prevents LLMs from fixating on inconsequential aspects of the text, enhancing their capabilities in handling opinionated prompts and addressing math problems marred by irrelevant content. The journey towards maximizing LLM reasoning power continues, with alternate avenues awaiting exploration.

Conclusion:

The introduction of System 2 Attention (S2A) by Meta Research represents a significant advancement in the field of Large Language Models (LLMs). By mitigating LLMs’ susceptibility to irrelevant context and enhancing their decision-making abilities, S2A has the potential to revolutionize the market for AI-driven language solutions. Its applications in improving factuality, objectivity, and problem-solving make it a valuable tool for various industries, although challenges like susceptibility to influence and computational demands should be addressed for wider adoption.