TL;DR:

- Meta introduces Audiocraft, a framework for generating high-quality, realistic audio and music using generative AI.

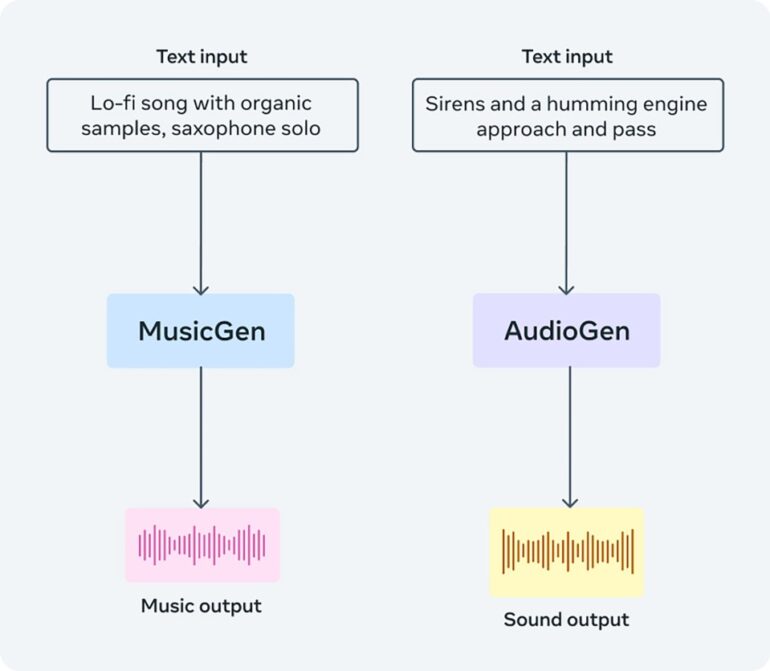

- The framework includes three models: MusicGen, AudioGen, and EnCodec.

- MusicGen allows users to train the model with their own music data, raising ethical concerns.

- AudioGen generates environmental sounds and sound effects based on text descriptions of acoustic scenes.

- EnCodec improves audio generation with higher fidelity and efficient compression.

- Meta emphasizes transparency and ease of use, while acknowledging potential misuse and biases.

Main AI News:

The horizon of generative AI is quickly expanding, reaching beyond writing and images into the realm of music and sounds, now even matching the work of professionals. Today, Meta, a tech giant known for its AI ventures, introduced Audiocraft, a groundbreaking framework capable of generating high-quality, realistic audio and music based on short text descriptions or prompts. Building on the success of their AI-powered music generator, MusicGen, released earlier this year, Meta claims to have made significant advancements that enhance the quality of AI-generated sounds, ranging from dogs barking and cars honking to footsteps on wooden floors.

In a blog post shared with TechCrunch, Meta sheds light on the innovative design of the Audiocraft framework. The primary goal was to simplify the use of generative models for audio compared to prior efforts like Riffusion, Dance Diffusion, and OpenAI’s Jukebox. By providing a collection of sound and music generators along with compression algorithms, Audiocraft enables seamless creation and encoding of songs and audio without the need to switch between various codebases.

Audiocraft comprises three powerful generative AI models: MusicGen, AudioGen, and EnCodec.

MusicGen, though not new, has seen a significant update as Meta released the training code for it, allowing users to train the model with their own music datasets. However, this raises ethical and legal concerns, as MusicGen learns from existing music to produce similar effects, a fact that some artists and generative AI users might find uncomfortable.

In recent times, homemade tracks leveraging generative AI to produce familiar sounds have gone viral, raising questions about their authenticity and copyright infringement. While Meta clarifies that the pretrained version of MusicGen was trained on a mixture of “Meta-owned and specifically licensed music” from various sources, the potential for misuse remains.

AudioGen, another key component of Audiocraft, focuses on generating environmental sounds and sound effects rather than music and melodies. Employing a diffusion-based model, similar to modern image generators, AudioGen gradually subtracts noise from starting data to create audio with realistic recording conditions and complex scene content, all based on text descriptions of acoustic scenes.

Meta acknowledges the potential for misuse, including deepfaking a person’s voice and the ethical questions it raises. Despite this, Meta does not impose stringent restrictions on the use of AudioCraft or its training code.

The third model, EnCodec, represents a significant improvement over a previous Meta model for generating music. By more efficiently modeling audio sequences and capturing varying levels of information in training data audio waveforms, EnCodec excels at crafting novel audio with high fidelity.

While Audiocraft promises many potential benefits, such as inspiring musicians and offering new ways to iterate on compositions, it is not without drawbacks and potential legal implications. Meta remains committed to exploring better controllability and performance improvements for generative audio models while addressing biases and limitations. MusicGen, for instance, exhibits notable bias in its training data, making it less effective for non-English descriptions and non-Western musical styles and cultures.

Conclusion:

Meta’s Audiocraft presents a significant advancement in generative AI for music and sounds. The framework offers promising potential for inspiring musicians and aiding professionals in the music industry. However, ethical concerns surrounding data training and potential misuse must be addressed. As the market for AI-generated content grows, it is essential for companies and regulators to establish clear guidelines to ensure fair and responsible usage.