TL;DR:

- Meta released an AI tool called ImageBind.

- ImageBind predicts connections between data from different modalities.

- The tool links text, images, videos, audio, 3D measurements, temperature, and motion data

- It can generate fully realized scenes based on limited input.

- ImageBind mimics human learning by processing data from different modalities.

- The technology opens new doors in the accessibility space.

- It points to one of Meta’s core ambitions: VR, mixed reality, and the metaverse.

- Developers can explore the tool through Meta’s open-source code

- ImageBind is a step toward human-centric AI models.

- The tool has the potential to revolutionize various fields, from entertainment and gaming to healthcare and accessibility.

Main AI News:

Meta, a leading tech company, has recently made a groundbreaking announcement with the release of ImageBind, a revolutionary AI tool that can predict connections between data similar to how humans perceive or imagine an environment. While there are other image generators in the market, such as Midjourney, Stable Diffusion, and DALL-E 2, that allow for the creation of visual scenes based solely on text descriptions, ImageBind sets itself apart by linking not only text and images but also videos, audio, 3D measurements, temperature, and motion data.

The primary function of ImageBind is to generate fully realized scenes and environments from input as simple as a text prompt, image, or audio recording. Unlike traditional AI systems that require specific embeddings for each respective modality, ImageBind can create a joint embedding space across multiple modalities without needing to train on every possibility. This means that the technology can generate complex environments from limited chunks of data, paving the way for more sophisticated and comprehensive AI models.

What sets ImageBind apart from other AI tools is its ability to mimic human learning closely. Humans and other animals evolved to process sensory data for genetic advantage: survival and passing on DNA. Similarly, ImageBind can mimic this process, processing data from different modalities and generating fully realized scenes based on limited input.

The potential of ImageBind extends beyond entertainment and creativity, offering new doors in the accessibility space. For instance, it can generate real-time multimedia descriptions to help people with vision or hearing disabilities better perceive their immediate surroundings.

Additionally, ImageBind points to one of Meta’s core ambitions: VR, mixed reality, and the metaverse. Imagine a future headset that can construct fully realized 3D scenes (with sound, movement, etc.) on the fly. Virtual game developers could use the technology to take much of the legwork out of their design process, and content creators could make immersive videos with realistic soundscapes and movement based on only text, image, or audio input.

Meta views this technology as a step towards human-centric AI models. By linking as many senses as possible, such as touch, speech, smell, and brain fMRI signals, the technology will enable richer and more comprehensive AI models. Meta believes that ImageBind will eventually expand beyond its current six “senses” to encompass all human senses, paving the way for more human-like AI models.

Developers interested in exploring this innovative tool can start by diving into Meta’s open-source code. With ImageBind, the possibilities are endless, making it a revolutionary tool for generating fully realized scenes in a variety of industries. The technology has the potential to change the game, allowing for the creation of more sophisticated and comprehensive AI models that could benefit various fields, from entertainment and gaming to healthcare and accessibility.

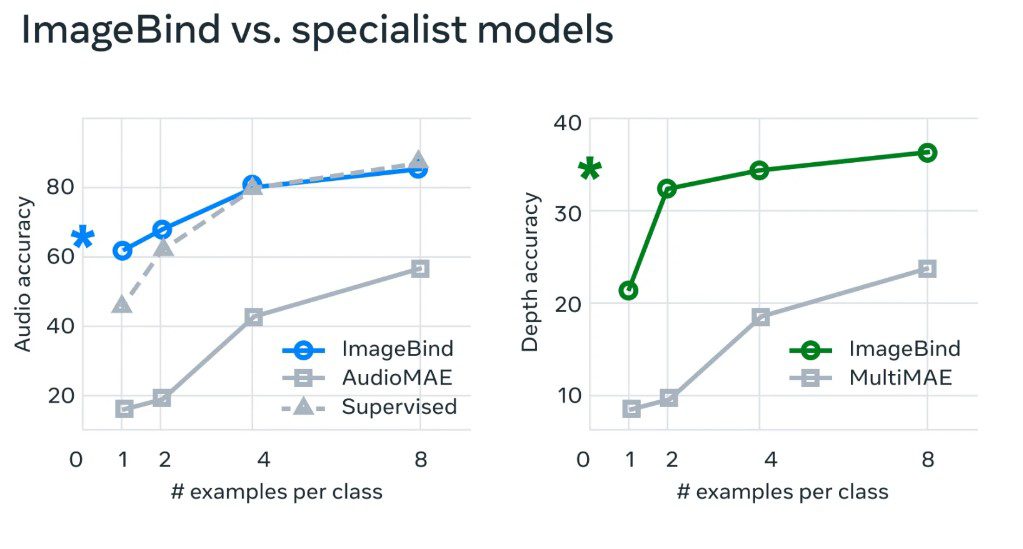

Meta’s graph showing ImageBind’s accuracy outperforming single-mode models. Source: Meta

Conlcusion:

ImageBind’s release by Meta represents a significant step forward for the market of AI and machine learning. The tool’s ability to predict connections between data from different modalities and generate fully realized scenes based on limited input presents exciting opportunities for a wide range of industries, from entertainment and gaming to healthcare and accessibility.

ImageBind’s potential to revolutionize these sectors may lead to increased investment and interest in AI technologies, driving growth in the market and creating new business opportunities for companies in the field. As such, ImageBind represents a significant development that could have a profound impact on the future of AI and machine learning in the business world.