- Meta Platforms unveils MTIA v2 chip, signaling a leap towards AI independence.

- Chip focuses on AI inference tasks, setting a foundation for future iterations capable of AI training.

- Led by experts, MTIA v2 prioritizes compute, memory bandwidth, and capacity for optimal performance.

- Specifications include 5nm fabrication, 68.8% clock speed increase, and significant SRAM enhancements.

- Doubled LPDDR5 memory capacity and bandwidth boost enhance memory performance.

- MTIA v2 retains 8 x 8 PE grid layout, with notable improvements in SRAM memory and bandwidth.

- Integration of PCI-Express 5.0 controller and chassis consolidation maximize efficiency and scalability.

- Comparative analysis with Nvidia’s offerings highlights superior performance-per-watt metrics.

- Inclusion of Triton language and compiler underscores Meta’s commitment to fostering innovation.

Main AI News:

Meta Platforms, formerly known as Facebook, has been at the forefront of innovation in the metaverse and AI landscapes. Their latest endeavor, the MTIA v2 chip, marks a significant step towards liberating themselves from the reliance on costly GPU accelerators. This move underscores Meta’s commitment to shaping the future of AI infrastructure to align seamlessly with their software requirements.

The MTIA v2 chip, unveiled recently, represents a substantial leap in capability compared to its predecessor. While it maintains its focus on AI inference tasks, it falls short of handling AI training, a limitation that Meta is undoubtedly working to overcome in future iterations.

Crafted by a team of seasoned experts led by Eran Tal, Nicolaas Viljoen, and Joel Coburn, the MTIA v2 chip embodies Meta’s dedication to optimizing compute, memory bandwidth, and capacity. In their blog post, the trio emphasized the chip’s architecture’s pivotal role in delivering optimal performance for ranking and recommendation models, which is vital for Meta’s suite of applications.

The technological advancements of the MTIA v2 chip are evident in its specifications. Fabricated on a 5-nanometer process, the chip boasts a clock speed increase of 68.8 percent compared to its predecessor, alongside a significant enlargement in size. This expansion facilitated a boost in on-chip SRAM, which is essential for enhancing operational efficiency.

Noteworthy improvements extend beyond the chip’s internal architecture. Meta has doubled the LPDDR5 memory capacity to 128 GB while augmenting memory speed by 16.4 percent. These enhancements translate into a substantial increase in memory bandwidth, which is crucial for handling complex AI workloads efficiently.

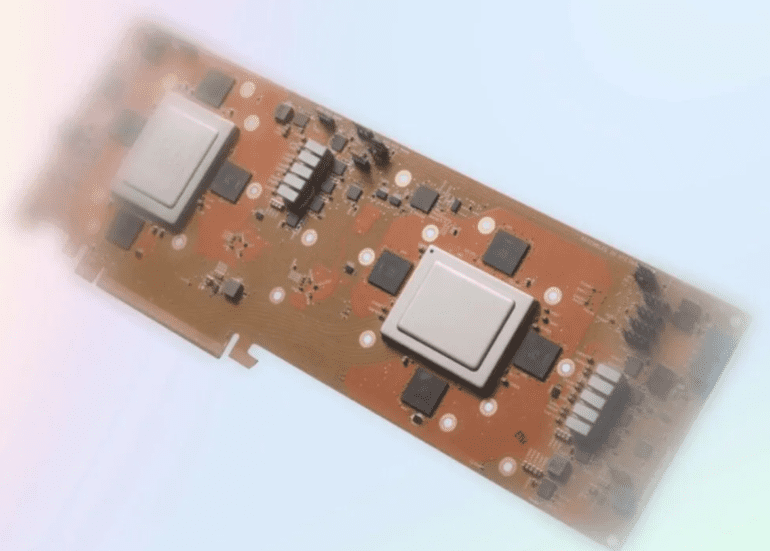

The MTIA v2 chip’s design retains the familiar 8 x 8 grid layout of processing elements (PEs), a configuration optimized for AI inference tasks. Each PE benefits from a significant increase in assigned SRAM memory, ensuring enhanced performance across the board. Moreover, Meta has invested in improving both local and shared SRAM memory bandwidth, further bolstering the chip’s capabilities.

In terms of connectivity, the MTIA v2 integrates a PCI-Express 5.0 x8 controller, offering a substantial bandwidth upgrade compared to its predecessor. Meta’s decision to deploy two chips per card, each sharing a physical PCI-Express 5.0 x16 connector, underscores their commitment to scalability and performance optimization.

Meta’s deployment strategy involves consolidating MTIA v2 chips into chassis configurations, maximizing efficiency and performance per rack. With PCI-Express 5.0 switching facilitating interconnectivity, Meta aims to achieve unparalleled INT8 inference performance, signaling a paradigm shift in AI hardware infrastructure.

Comparative analysis with Nvidia’s accelerators showcases the MTIA v2’s superiority in performance-per-watt metrics. While Nvidia’s offerings boast impressive capabilities, the MTIA v2 emerges as a cost-effective alternative, offering superior performance at a fraction of the power consumption.

A notable inclusion in Meta’s software stack is the Triton language and compiler, enabling developers to harness the chip’s full potential. This compatibility underscores Meta’s commitment to fostering an ecosystem conducive to innovation and collaboration.

Conclusion:

Meta’s introduction of the MTIA v2 chip marks a significant milestone in the AI hardware landscape. By prioritizing performance, efficiency, and scalability, Meta is poised to disrupt the market, offering a compelling alternative to existing solutions. The MTIA v2 chip’s advanced capabilities pave the way for a new era of AI infrastructure, where Meta emerges as a key player driving innovation and shaping the future of AI technologies.