TL;DR:

- Metomic introduces Metomic for ChatGPT, a browser plugin enhancing data security on OpenAI’s ChatGPT platform.

- Reports show a 60% increase in sensitive data uploads to ChatGPT, raising concerns about data privacy and compliance.

- Accidental data sharing includes source code leaks and exposure of confidential information.

- Metomic’s browser extension offers real-time monitoring, alerting security teams to sensitive data uploads.

- It comes with pre-built data classifiers and allows customization for identifying vulnerabilities.

- Employee education and empowerment are emphasized, ensuring data safety while using generative AI tools.

- Metomic’s holistic data security extends across SaaS, GenAI, and cloud applications.

- This proactive data security approach addresses evolving workplace challenges.

Main AI News:

Data security company Metomic has unveiled an innovative solution, Metomic for ChatGPT, aimed at preventing employees from accidentally sharing sensitive data on OpenAI’s ChatGPT platform. This browser plugin empowers businesses to harness the benefits of generative AI technology while ensuring the safety of their most confidential information. In this article, we delve into Metomic’s ChatGPT data security integration and its potential implications for businesses.

The Rising Challenge

Recent reports have highlighted a concerning trend of a 60 percent increase in sensitive data uploads to ChatGPT between March and April of the previous year. This surge raised alarms among IT and security leaders, prompting them to seek ways to address this growing issue.

Rich Vibert, CEO of Metomic, noted, “Management at more and more companies, particularly at the CEO level, are waking up and thinking, what are my employees putting into these tools?” The accidental sharing of customer Personally Identifiable Information (PII), company secrets, or passwords on third-party tools poses significant privacy and compliance risks, including GDPR violations.

Understanding the Breach Patterns: Vibert clarified that most security breaches involving ChatGPT and similar AI tools are non-malicious and primarily fall into two categories:

- Sharing of Source Code: Employees have inadvertently shared confidential source code, potentially leaking corporate secrets and intellectual property to competitors.

- Sharing of Confidential Information: Employees also tend to upload confidential company data, such as earnings, salaries, and PII, into AI models trained on sensitive data. This becomes more concerning when such tools are accessible to all employees.

Proactive Data Security

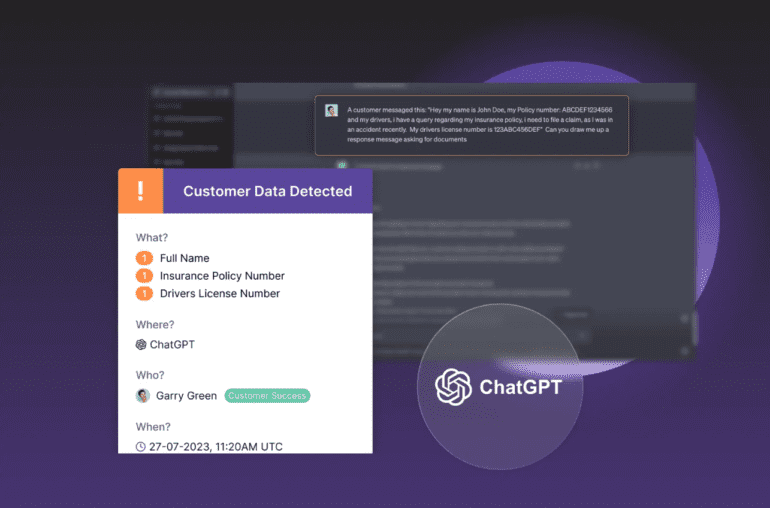

Metomic’s ChatGPT integration offers a proactive approach to data security. Situated within the browser itself, it monitors employees’ usage of OpenAI’s ChatGPT platform and scans uploaded data in real-time. This enables security teams to receive alerts when employees upload sensitive information, including customer PII, security credentials, and intellectual property.

The browser extension comes equipped with 150 pre-built data classifiers to detect common critical data risks, and businesses can also create custom classifiers to identify their most vulnerable information.

Vibert emphasized, “Our ChatGPT integration expands on our foundational value as a data security platform. Businesses gain all the advantages of ChatGPT while avoiding serious data vulnerabilities.”

Metomic for ChatGPT provides real-time identification of critical risks in ChatGPT conversations, offering contextual previews of sensitive data being uploaded to the platform. This enhanced visibility ensures that businesses can safeguard their data effectively.

Education and Empowerment

As the rapid development of generative AI tools outpaces existing policies, Vibert stresses the importance of employee education and trust. Metomic’s solution offers real-time training that places control in employees’ hands, allowing them to use the tools while stripping out sensitive data before submission.

Vibert envisions a future where security alerts trigger workflow improvements, security training, and controlled access, ensuring safer tool usage across the organization.

Holistic Data Security

Metomic’s data security software extends beyond ChatGPT to cover SaaS, GenAI, and cloud applications. This comprehensive approach allows businesses to gain a complete understanding of how employees share sensitive data across their tech stack, encompassing tools like Slack, Microsoft Teams, Google Drive, and ChatGPT.

Conclusion:

Metomic’s ChatGPT data security solution addresses the growing concern of accidental data sharing on AI platforms. This proactive approach aligns with the evolving landscape of workplace security challenges, providing businesses with the means to protect their sensitive information effectively. As the adoption of generative AI tools continues to rise, proactive data security measures like Metomic’s will be crucial for maintaining data integrity and compliance in the market.