- Microsoft introduces GLAN (Generalized Instruction Tuning) for optimizing Large Language Models (LLMs).

- GLAN addresses challenges in human instruction delivery by fine-tuning LLMs based on preferred instruction-reply pairs.

- It systematically generates teaching data across various subjects, levels, and disciplines using a curated taxonomy of human knowledge.

- GLAN is flexible, scalable, and task-agnostic, simplifying customization without requiring dataset recreation.

- Experimental results demonstrate GLAN’s excellence across coding, reasoning, academic tests, and general instructions, without task-specific training data.

Main AI News:

In the realm of Artificial Intelligence (AI), Large Language Models (LLMs) have made significant strides, particularly in text comprehension and generation. However, enhancing LLMs for optimal human instruction delivery has presented challenges. While LLMs demonstrate proficiency in tasks such as token prediction and limited demonstrations, this prowess doesn’t necessarily translate into effective human instruction.

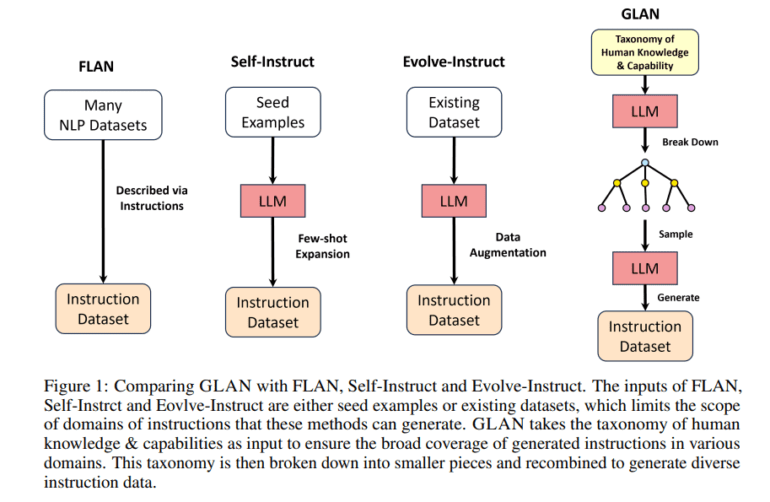

Enter instruction tuning, a method aimed at fine-tuning LLMs based on human-preferred instruction-reply pairs. Current techniques often rely on scarce Natural Language Processing (NLP) datasets or self-instruct approaches, which struggle with dataset diversity. Even with data augmentation, as seen in Evolve-Instruct, limitations persist due to initial dataset constraints.

Addressing these limitations head-on, Microsoft’s research team introduces GLAN (Generalized Instruction Tuning), drawing inspiration from the organized framework of human education systems. GLAN encompasses various subjects, levels, and disciplines, systematically generating large-scale teaching data using a curated taxonomy of human knowledge.

This innovative approach dissects human knowledge into domains, sub-fields, and disciplines through LLMs and human validation. The taxonomy is further categorized into subjects, each with a meticulously crafted syllabus covering essential themes for every class session. GLAN leverages these concepts to generate instructions mirroring human educational systems’ designs.

Flexibility, scalability, and universality define GLAN. It scales seamlessly, generating instructions on a massive scale, and transcends specific tasks, encompassing diverse disciplines. Minimal human effort is required to create the input taxonomy through LLM prompting and validation. Moreover, GLAN simplifies customization by eliminating the need to recreate the entire dataset to incorporate new fields or skills.

Leveraging its comprehensive curriculum, GLAN produces instructions spanning every facet of human knowledge and abilities. Numerous experiments, including those with Mistral LLMs, underscore GLAN’s excellence across coding, logical and mathematical reasoning, academic tests, and general instructions, all achieved without task-specific training data.

Conclusion:

Microsoft’s GLAN signifies a significant advancement in optimizing Large Language Models. Its flexibility, scalability, and task-agnostic nature promise enhanced efficiency and adaptability in human instruction delivery, potentially reshaping the landscape of AI-driven language understanding and generation in various industries. Companies leveraging GLAN can anticipate improved language model performance and more effective human interaction, driving innovation and productivity in the market.