- Microsoft and Tsinghua University introduced RHO-1, a model revolutionizing language model training.

- RHO-1 employs Selective Language Modeling (SLM) to prioritize ‘high-utility’ tokens, optimizing training efficiency.

- Traditional approaches treating all tokens equally are challenged, revealing inefficiencies in uniform training methodologies.

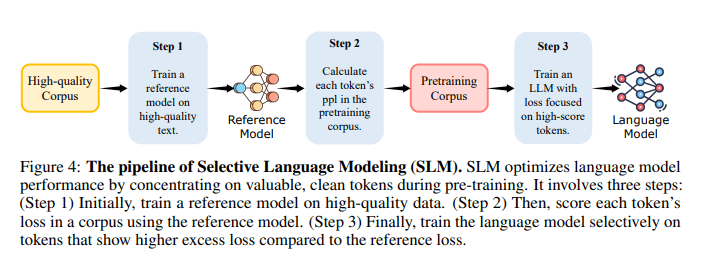

- RHO-1 methodology involves training a reference model on a high-quality dataset to assess token utility, followed by selective training on high-utility tokens.

- Implementation of SLM in RHO-1 yields substantial performance enhancements, achieving up to 30% increase in few-shot accuracy across various tasks.

- Models trained with RHO-1 reach baseline performance up to ten times faster than those trained using conventional methods.

Main AI News:

The landscape of artificial intelligence, especially in language processing, has evolved significantly through the strategic scaling of model parameters and dataset sizes. Traditionally, advancements in language model training have hinged upon the ubiquitous application of next-token prediction tasks across all training tokens. However, there’s growing scrutiny regarding the assumption that every token contributes equally to the learning process, revealing significant inefficiencies in uniform training methodologies.

In response to this challenge, previous research has delved into optimizing language model training through selective data utilization and curriculum learning. While conventional models like BERT have employed heuristic filters to enhance data quality, newer methodologies such as Masked Language Modeling (MLM) have emerged, focusing on predicting specific token subsets to augment training efficiency. Additionally, studies have examined token-level dynamics, distinguishing between ‘easy’ and ‘hard’ tokens that influence learning trajectories. These foundational insights have paved the way for more targeted training approaches aimed at maximizing language model efficiency and efficacy.

Introducing RHO-1: A Paradigm Shift in Language Model Training

Researchers from Xiamen University, Tsinghua University, and Microsoft have introduced RHO-1, a pioneering model that employs Selective Language Modeling (SLM). Unlike traditional approaches that treat all tokens equally, RHO-1 identifies and prioritizes ‘high-utility’ tokens, thereby optimizing training efficiency and model performance while minimizing computational resource expenditure.

The RHO-1 methodology begins with training a reference model using a high-quality dataset to evaluate token utility. Subsequently, tokens with the highest utility are selectively chosen for focused training, effectively streamlining the process and enhancing model performance. This methodology was applied to the OpenWebMath corpus, comprising 15 billion tokens, providing a robust foundation for evaluating RHO-1’s efficiency.

Unveiling the Impact: Enhanced Performance through Selective Language Modeling

Implementing Selective Language Modeling (SLM) within the RHO-1 framework has yielded remarkable performance enhancements. Notably, the RHO-1-1B model exhibited an absolute increase in few-shot accuracy of up to 30% across nine mathematical tasks when trained on the OpenWebMath corpus. Furthermore, post fine-tuning, the RHO-1-1B achieved a peak accuracy of 40.6% on the MATH dataset, while the larger RHO-1-7B model surpassed expectations with an accuracy of 51.8% on the same dataset. Remarkably, these models achieved baseline performance up to ten times faster than those trained using conventional methods, underscoring the scalability and efficacy of SLM across varying model sizes.

Conclusion:

The introduction of the RHO-1 model marks a significant advancement in language model training, offering a more efficient and effective approach through Selective Language Modeling. This innovation underscores the importance of optimizing training methodologies to enhance model performance and scalability. For businesses in the AI market, adopting such cutting-edge techniques can lead to improved competitiveness and accelerated development of advanced language processing solutions.