- Language modeling, pivotal in machine learning, predicts word sequences’ likelihood.

- Transformer architecture, notably decoder-only variations like YOCO, revolutionizes text generation.

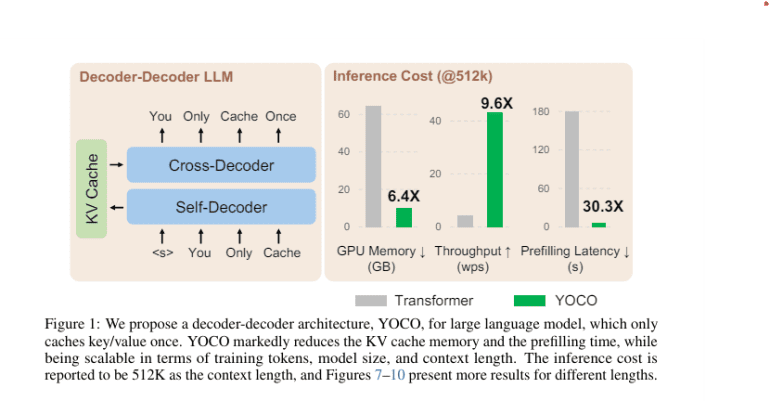

- YOCO introduces a unique decoder-decoder framework, caching key-value pairs just once, minimizing computational and memory overhead.

- It combines self-decoder and cross-decoder mechanisms, optimizing language processing with advanced attention techniques.

- YOCO outperforms traditional Transformer-based models, showcasing substantial improvements in processing speeds and memory efficiency across various datasets.

Main AI News:

In the realm of machine learning, language modeling stands tall as a fundamental pillar, tasked with predicting word sequences’ likelihood. This pivotal field not only enriches machine comprehension but also fuels the generation of human-like language, underpinning a myriad of applications spanning text summarization, translation, and auto-completion systems. Yet, the journey towards efficient language modeling is riddled with challenges, particularly when dealing with colossal models. Foremost among these challenges is the daunting computational and memory overhead incurred in processing and storing extensive data sequences, impeding scalability and real-time processing capabilities.

At the forefront of language modeling research lies the Transformer architecture, renowned for its self-attention mechanism adept at processing word sequences irrespective of their distance. Among its notable adaptations is the decoder-only Transformer, which revolutionizes text generation processes, exemplified in models like OpenAI’s GPT series. Additionally, innovative solutions such as Sparse Transformers have emerged, alleviating computational burdens by curtailing interactions between distant sequence elements. Moreover, hybrid models like BERT and T5 amalgamate diverse architectural strengths, augmenting language models’ efficiency and proficiency in comprehending and generating nuanced text.

Introducing a paradigm shift, researchers from Microsoft Research and Tsinghua University unveil a groundbreaking architecture known as You Only Cache Once (YOCO) tailored for large language models. YOCO presents a distinctive decoder-decoder framework, diverging from conventional methods by caching key-value pairs only once. This ingenious approach dramatically slashes the computational overhead and memory footprint typically associated with repetitive caching in massive language models. YOCO streamlines the attention mechanism and bolsters overall performance by incorporating both a self-decoder and a cross-decoder, allowing for efficient processing of long sequences.

The YOCO methodology intertwines self-decoder and cross-decoder mechanisms with advanced attention techniques to optimize language processing. The self-decoder harnesses a sliding window and gated retention attention to generate a concise set of key-value pairs. Subsequently, the cross-decoder capitalizes on these pairs via cross-attention, obviating the need for re-encoding and thereby conserving computational resources. Extensive evaluation across diverse datasets underscores YOCO’s prowess in real-world scenarios, showcasing remarkable enhancements in processing speeds and memory efficiency compared to conventional Transformer-based models.

Experimental findings underscore YOCO’s efficacy, with the model achieving nearly flawless needle retrieval accuracy for sequences spanning up to 1 million tokens. YOCO slashes GPU memory demands by an astounding 80-fold for 65-billion-parameter models. Furthermore, it slashes prefilling latency from 180 seconds to under 6 seconds for contexts as expansive as 512,000 tokens, while concurrently boosting throughput to 43.1 tokens per second, a staggering 9.6 times increase compared to the traditional Transformer. These metrics unequivocally establish YOCO as an exceptionally efficient architecture for processing extensive data sequences, heralding a new era in language modeling prowess.

Conclusion:

YOCO’s introduction marks a significant leap in language model efficiency, addressing key challenges of computational overhead and memory usage. Its streamlined approach not only enhances processing speeds and memory efficiency but also sets a new standard for large-scale language model architectures. In the market, this innovation could drive the development of more efficient and scalable language models, catering to diverse applications across industries reliant on natural language processing.