- Microsoft reaffirms ban on US police use of generative AI for facial recognition through Azure OpenAI Service.

- New terms explicitly prohibit integration with law enforcement agencies for facial recognition in the US.

- Global ban on real-time facial recognition via mobile cameras extended to uncontrolled environments.

- Concerns raised regarding potential pitfalls such as hallucinations and racial biases.

- Flexibility in policy allows for international applications and excludes facial recognition in controlled environments.

- Recent collaborations between OpenAI and the Pentagon underscore a shift in approach towards defense contracts.

- Azure OpenAI Service integration into Microsoft’s Azure Government product targets government agencies, including law enforcement.

Main AI News:

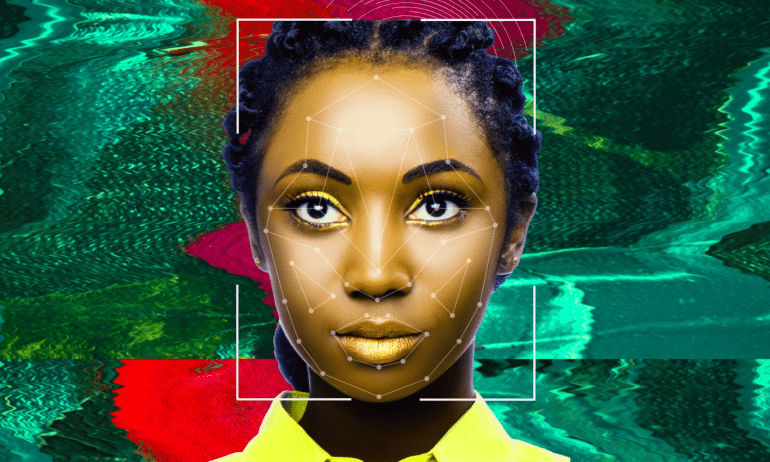

Microsoft’s Azure OpenAI Service, a pinnacle of enterprise-focused AI technology, has once again asserted its stance against the use of generative AI for facial recognition by US police departments. The recent amendment to the service’s terms explicitly bars integrations with Azure OpenAI Service from catering to the facial recognition needs of law enforcement agencies in the US This encompasses both present and potential future integrations with OpenAI’s image-analyzing models.

The updated policy extends beyond domestic borders, encompassing a global prohibition on employing “real-time facial recognition technology” via mobile cameras in uncontrolled environments. This notably includes body cameras and dashcams. The move arrives on the heels of Axon’s introduction of a new product utilizing OpenAI’s GPT-4 generative text model to summarize audio from body cameras. However, concerns have been raised regarding potential pitfalls such as hallucinations and racial biases inherent in the training data.

While the connection between Axon’s product and Azure OpenAI Service remains unclear, the timing suggests a possible correlation prompting the revised policy. Notably, OpenAI had previously imposed restrictions on the utilization of its models for facial recognition through APIs. Efforts to clarify the situation are ongoing, with queries directed to Axon, Microsoft, and OpenAI.

Despite the stringent stance, Microsoft’s policy does allow flexibility. The ban applies solely to US law enforcement, leaving room for international applications. Moreover, it excludes facial recognition conducted in controlled environments with stationary cameras, such as back offices. This strategic approach aligns with Microsoft’s and OpenAI’s evolving engagements with AI-related law enforcement and defense contracts.

Recent collaborations between OpenAI and the Pentagon underscore this evolution. OpenAI’s involvement in Pentagon projects, including cybersecurity initiatives, signifies a departure from previous restrictions on military partnerships. Similarly, Microsoft’s proposals to utilize OpenAI’s tools for military software development demonstrate a concerted effort towards defense applications.

The incorporation of Azure OpenAI Service into Microsoft’s Azure Government product further solidifies its appeal to government agencies, including law enforcement. With additional compliance measures tailored for such entities, Microsoft reaffirms its commitment to supporting government missions. Candice Ling, SVP of Microsoft’s government-focused division Microsoft Federal, has emphasized the service’s potential, highlighting intentions to pursue additional authorizations for DoD missions.

Conclusion:

The stringent measures imposed by Microsoft regarding the use of facial recognition technology for US law enforcement through Azure OpenAI Service underscore a growing emphasis on ethical AI deployment. This shift not only reflects evolving regulatory landscapes but also highlights the need for responsible AI practices across various industries. As companies navigate these complexities, there is a clear trend towards transparency, accountability, and ethical considerations, shaping the future of AI markets.