TL;DR:

- Microsoft unveils Phi-2, a 2.7 billion-parameter language model, targeting specific business needs.

- Phi-2 outperforms larger language models (LLMs) by up to 25 times its size.

- The shift towards smaller, domain-specific models challenges the dominance of leading LLMs.

- Global AI chip shortage prompts the adoption of cost-effective solutions like Phi-2.

- Phi-2’s availability in Azure AI Studio supports research and experimentation.

- Emphasis on data quality and domain-specific knowledge ensures factually accurate AI outputs.

Main AI News:

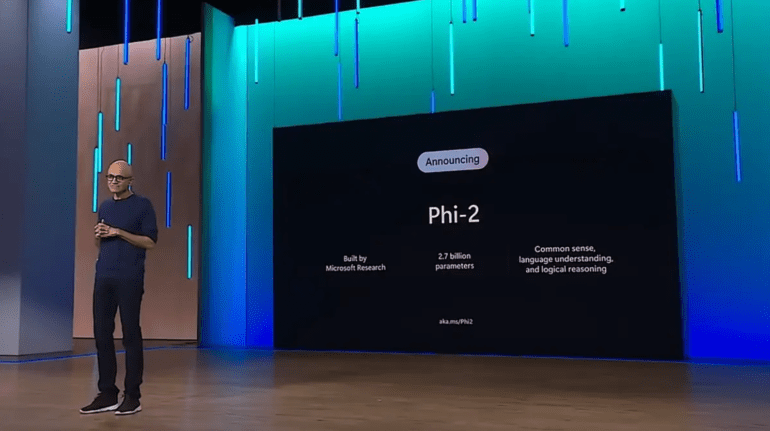

In a bid to revolutionize the field of artificial intelligence (AI) and cater to more specific business needs, Microsoft has unveiled Phi-2, the latest addition to its suite of smaller, more agile language models. This move follows the earlier introduction of Phi-1, the pioneer in Microsoft’s lineup of small language models (SLMs), designed to offer an alternative to their larger language model (LLM) counterparts.

Phi-1 set the stage for this transformation, boasting a significantly reduced parameter count compared to colossal models like GPT-3, with its 175 billion parameters, and OpenAI’s latest LLM, GPT-4, which boasts a staggering 1.7 trillion parameters. In contrast, Phi-1 contained just 1.3 billion parameters. Phi-2 takes this evolution further, offering a 2.7 billion-parameter language model that Microsoft claims can outperform LLMs up to 25 times its size.

As a major stakeholder and partner of OpenAI, the developer of ChatGPT, Microsoft understands the importance of efficient AI models for various applications. ChatGPT, for instance, serves as the foundation for Microsoft’s Copilot generative AI assistant. However, traditional LLMs, when used in generative AI (genAI) applications like ChatGPT or Bard, often demand substantial computational resources and prove to be costly and time-intensive to tailor for specific business purposes, owing to their sheer size.

Avivah Litan, Vice President Distinguished Analyst at Gartner Research, highlights the limitations of continuously scaling GPU chips to accommodate ever-expanding model sizes. This approach, she believes, is unsustainable. To address this, there is a growing trend toward downsizing LLMs, making them more cost-effective and better suited for domain-specific tasks, such as financial service chatbots or genAI applications capable of summarizing electronic healthcare records.

Smaller, domain-specific language models, trained on specific datasets, are poised to challenge the dominance of today’s leading LLMs, including OpenAI’s GPT-4, Meta AI’s LLaMA 2, and Google’s PaLM 2.

Dan Diasio, Global Artificial Intelligence Consulting Leader at Ernst & Young, observes that the current chip shortage has caused a backlog of GPU orders, impacting not only tech companies developing LLMs but also user companies aiming to customize models or create proprietary LLMs. The high costs associated with fine-tuning and developing specialized corporate LLMs have led to the adoption of knowledge enhancement packs and prompt libraries containing specialized knowledge.

Microsoft positions Phi-2 as an “ideal playground for researchers,” offering opportunities for mechanistic interpretability exploration, safety enhancements, and fine-tuning experiments across various tasks. Phi-2 is readily available in the Azure AI Studio model catalog.

Victor Botev, former AI research engineer at Chalmers University and CTO and co-founder of Iris.ai, emphasizes the significance of Microsoft’s Phi-2 release. By challenging conventional scaling norms and focusing on “textbook-quality” data with a smaller-scale model, Microsoft underscores the multifaceted nature of AI, highlighting that increasing model size isn’t the sole path to progress.

While the specifics of Phi-2’s training data remain undisclosed, it is clear that innovations are driving models to achieve more with less. However, the quality of data input remains paramount, as AI tools, including LLMs, heavily rely on prompt engineering to generate accurate responses. Ensuring well-structured, reason-based data and utilizing domain-specific knowledge is key to producing factually accurate outputs and minimizing the risk of erroneous information.

Conclusion:

Microsoft’s Phi-2 represents a pivotal moment in the AI market. It showcases a strategic shift towards smaller, more efficient models tailored to business needs, addressing the limitations of larger models. This trend towards domain-specific AI solutions is expected to democratize AI adoption across various industries, making it cost-effective and accessible for businesses of all sizes. Chip shortages are a challenge that both tech and user companies must navigate. Phi-2’s release underscores the importance of data quality and structured knowledge in ensuring AI model accuracy and reliability, shaping the future landscape of AI innovation.