- Microsoft announces plan to offer AMD AI chips alongside Nvidia processors for cloud computing.

- Details to be revealed at upcoming Build developer conference, including preview of Cobalt 100 custom processors.

- AMD’s Instinct MI300X AI chips to be integrated into Azure cloud service, providing alternative to Nvidia GPUs.

- 4th Gen AMD EPYC processors to cater to diverse needs of clientele.

- AMD chips projected to generate $4 billion revenue, capable of training and executing large-scale AI models.

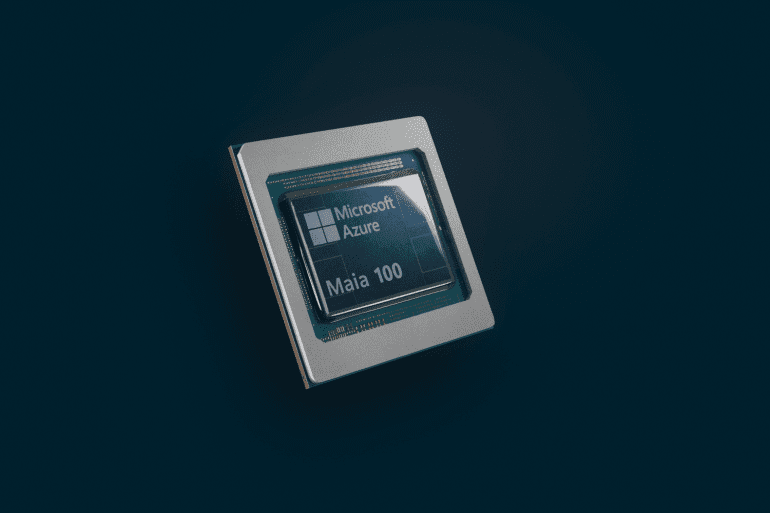

- Microsoft’s cloud division also offers proprietary AI chips, Maia, in addition to Nvidia’s offerings.

- Cobalt 100 processors promise 40% performance improvement over other Arm-based processors, undergoing testing for integration into Microsoft Teams.

Main AI News:

In a strategic move to diversify its offerings and cater to evolving customer needs, Microsoft has unveiled plans to introduce AMD artificial intelligence chips as an alternative to Nvidia processors for its esteemed cloud computing clientele. This announcement comes ahead of the highly anticipated Build developer conference where Microsoft will divulge the intricate details of this groundbreaking initiative.

Furthermore, during the conference, Microsoft is set to showcase a preview of its cutting-edge Cobalt 100 custom processors, signaling a significant stride in the realm of cloud computing innovation. This groundbreaking news has been corroborated by a separate confirmation from AMD, underlining the magnitude of this collaboration.

The focal point of this collaboration lies in the availability of clusters featuring AMD’s flagship Instinct MI300X AI chips, which will be seamlessly integrated into Microsoft’s Azure cloud computing service. This strategic maneuver aims to provide customers with a viable alternative to Nvidia’s H100 family of GPUs, addressing the challenges associated with procurement in the face of soaring demand.

Simultaneously, Microsoft will roll out 4th Gen AMD EPYC processors tailored to meet the diverse needs of its clientele, encompassing general-purpose, memory-intensive, and compute-optimized virtual machines. This comprehensive approach underscores Microsoft’s commitment to empowering its customers with a versatile array of options to optimize their cloud computing endeavors.

Traditionally, the deployment of multiple GPUs in clusters has been a prerequisite for building AI models or executing resource-intensive applications, owing to the immense data and computational requirements. AMD’s MI300X AI chips, projected to yield a staggering $4 billion in revenue this year, offer unparalleled power capable of both training and executing large-scale AI models, revolutionizing the landscape of AI computing.

In addition to Nvidia’s renowned AI chips, Microsoft’s cloud computing division also grants access to its proprietary AI chips, known as Maia, further enriching its diverse portfolio. Meanwhile, the forthcoming Cobalt 100 processors, slated for preview next week, boast a remarkable 40% performance enhancement over other Arm-based processors, heralding a new era of efficiency and productivity in cloud computing. These avant-garde chips, announced in November, are currently undergoing rigorous testing for integration into Microsoft Teams and are poised to rival the formidable Graviton CPUs developed in-house by Amazon.com.

Conclusion:

Microsoft’s decision to expand its AI chip offerings to include AMD alongside Nvidia signifies a strategic move towards diversification and catering to the evolving needs of its cloud computing clientele. This initiative not only provides customers with a viable alternative to Nvidia processors but also enhances Microsoft’s competitiveness in the market by offering a comprehensive range of solutions to optimize cloud computing endeavors. The introduction of AMD chips, coupled with the promising performance improvements offered by Cobalt 100 processors, underscores Microsoft’s commitment to driving innovation and efficiency in cloud computing, ultimately shaping the landscape of the industry.