TL;DR:

- Microsoft introduces Orca, a 13-billion parameter model, that learns from GPT-4’s complex explanation traces.

- Orca significantly enhances the performance of smaller instruction-tuned models by addressing the limitations of poor reasoning and comprehension skills.

- Three key contributions: explanation tuning, scaling tasks and instructions, and thorough evaluation.

- Explanation tuning involves augmenting query and response pairs from GPT-4 with detailed responses to improve student models’ understanding.

- Scaling tasks and instructions utilize the Flan 2022 Collection to obtain a diverse range of tasks and complex prompts for training.

- Orca outperforms SOTA instruction-tuned models, showcasing over 100% improvement on BigBench Hard (BBH) and competitive performance in zero-shot academic exams.

Main AI News:

In the realm of Large Foundation Models (LFMs), the prowess of ChatGPT and GPT-4 in zero-shot learning has garnered immense attention. Their remarkable achievements can be attributed to the scalability of model and dataset sizes, along with the meticulous fine-tuning process to align them with user-generated content.

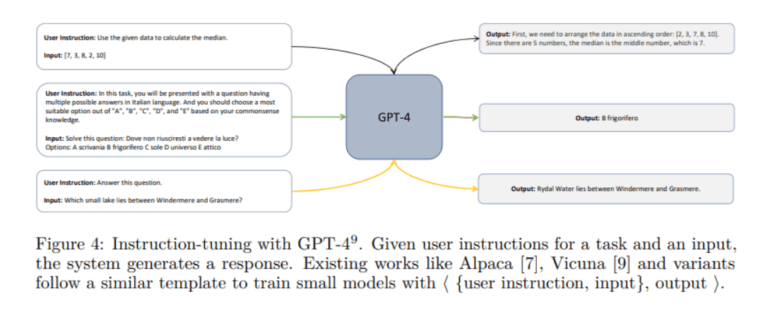

As LFMs continue to flourish, a thought-provoking question arises: Can these models autonomously supervise their own behaviors or even other models, thereby minimizing human intervention? To explore this possibility, a surge of research has emerged utilizing LFMs as instructors to generate datasets for training smaller models. However, the resultant student models often exhibit inferior reasoning and comprehension skills when compared to their teachers.

To address this limitation, a Microsoft research team presents a groundbreaking solution in their paper titled “Orca: Progressive Learning from Complex Explanation Traces of GPT-4.” Orca, a 13-billion parameter model, is introduced to learn intricate explanation traces, step-by-step thought processes, and complex instructions from GPT-4. This novel approach significantly enhances the performance of existing state-of-the-art instruction-tuned models.

The research team makes three pivotal contributions, encompassing explanation tuning, scaling tasks and instructions, and comprehensive evaluation, to tackle the prevailing challenges associated with instruction-tuned models in terms of task diversity, query complexity, and data scaling.

In the domain of explanation tuning, the researchers emphasize that the query and response pairs extracted from GPT-4 contain valuable signals that can aid in the learning process of student models. Consequently, they augment these pairs with detailed responses to elucidate the reasoning process employed by the teachers when generating responses.

To ensure the scalability of tasks and instructions, the team leverages the Flan 2022 Collection, harnessing its diverse array of tasks. By strategic sampling from this collection and further sub-sampling, they create complex prompts that enable efficient querying of LFMs, leading to the creation of a rich and varied training set.

Finally, a thorough evaluation is conducted to assess the generative, reasoning, and comprehension abilities of Orca. This comprehensive analysis includes comparisons against strong baselines such as Text-Davinci-003, ChatGPT, GPT-4, and Vicuna. Impressively, Orca outperforms state-of-the-art instruction-tuned models like Vicuna-13B by more than 100% on BigBench Hard (BBH) and showcases competitive performance in zero-shot academic exams.

Conclusion:

The introduction of Orca represents a significant advancement in the market. By enabling smaller models to learn from GPT-4’s explanation traces, Microsoft addresses the limitations of poor reasoning and comprehension skills. This breakthrough has the potential to revolutionize various industries by empowering smaller models with enhanced capabilities, leading to improved performance and more accurate results. The market can expect a surge in the development and utilization of instruction-tuned models, with Orca paving the way for their success.