TL;DR:

- Mintplex Labs introduces AnythingLLM, a full-stack application that revolutionizes interactions with large language models (LLMs).

- AnythingLLM provides an intuitive user interface, leveraging Pinecone, ChromaDB, and the OpenAI API for enhanced conversational functionality.

- Unlike competitors like PrivateGPT and LocalGPT, AnythingLLM offers an interactive UI and eliminates the need for local LLM deployment, making it more accessible and efficient.

- The application uses containerization to organize documents into workspaces, allowing users to maintain separate environments for different use cases.

- AnythingLLM offers two chat modes: conversation, which retains previous questions, and query, for simple question-and-answer interactions with specified documents.

- Publicly accessible document responses include citations linking back to the original content.

- The project is structured with three main sections: collector, frontend (built with viteJS and React), and server (based on nodeJs and Express).

- AnythingLLM is open-sourced under the MIT License, welcoming bug fixes and contributions from the community.

Main AI News:

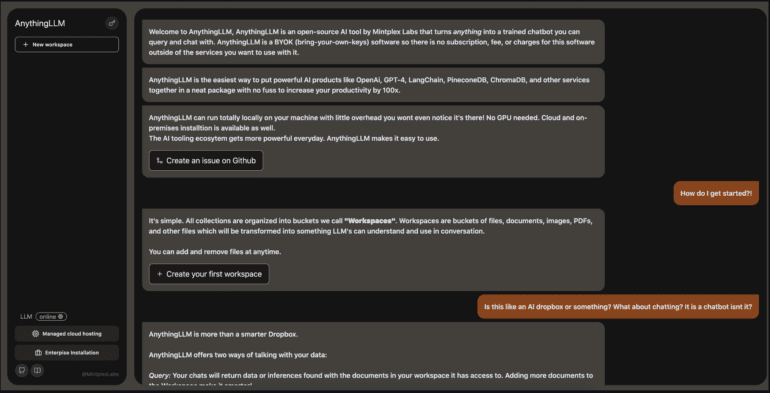

The surge in projects centered around artificial intelligence, particularly large language models (LLMs), has reached unprecedented heights since the launch of OpenAI’s groundbreaking ChatGPT. These projects hold immense potential for disrupting various industries by empowering researchers and developers to harness the capabilities of LLMs for tackling complex tasks, enhancing natural language understanding, and generating human-like text. Mintplex Labs has recently unveiled AnythingLLM, a remarkable full-stack application poised to become the easiest and most intelligent means for customers to engage with documents, resources, and more, all through an exquisitely crafted user interface.

AnythingLLM leverages the formidable prowess of Pinecone and ChromaDB for efficient vector embedding management, while seamlessly integrating with the OpenAI API to provide access to LLMs and facilitate conversational functionalities. What sets this tool apart is its unparalleled ability to operate effortlessly in the background without imposing excessive memory or resource burdens. By default, AnythingLLM hosts the LLM and vectorDB remotely on the cloud, ensuring a streamlined user experience.

The creators of AnythingLLM have taken great care to highlight the unique features that distinguish their tool from others currently available in the market, such as PrivateGPT and LocalGPT. Unlike PrivateGPT, which is solely a command-line tool, AnythingLLM boasts an interactive UI that contributes to an intuitive and user-friendly experience.

Furthermore, PrivateGPT necessitates running a local LLM on the user’s machine, which may prove suboptimal for individuals lacking high-performance hardware. Similarly, LocalGPT, inspired by PrivateGPT, encounters similar concerns as it also relies on a local LLM. Both solutions entail significant technical overhead. This is precisely where AnythingLLM gains a competitive advantage—it utilizes familiar LLMs and vectorDBs, ensuring accessibility. This versatile full-stack application can be seamlessly employed both locally and remotely, running inconspicuously in the background.

Central to AnythingLLM’s architecture is the containerization of documents into workspaces. Each workspace can house the same records without allowing interaction, enabling users to maintain distinct workspaces for different use cases. The application comprises two chat modes: conversation, which retains previous questions, and query, designed for simple question-and-answer exchanges against user-specified documents.

Furthermore, when responding to publicly accessible documents, each chat response includes a citation linking back to the original content. The project’s monorepo structure encompasses three primary sections: collector, frontend, and server. The collector, a Python utility, enables swift conversion of publicly available data from online resources, such as videos from specific YouTube channels, Medium articles, blog links, and more, or local documents into a format compatible with LLMs. The product’s frontend is built on viteJS and React, while a nodeJs and Express server seamlessly handles all LLM interactions and vectorDB management.

The AnythingLLM project is proudly open-sourced under the MIT License, with the developers eagerly anticipating bug fixes and contributions from the community. This project has the potential to fundamentally transform how users interact with documents and content utilizing the power of LLMs. Enthusiastic users can clone the project from the dedicated GitHub repository and proceed to set up their own instance of this innovative application.

Conclusion:

The introduction of AnythingLLM represents a significant development in the market for large language models. This full-stack application addresses the limitations of existing tools by providing an interactive user interface, remote LLM hosting, and effortless document organization. By leveraging the familiarity of LLMs and vectorDBs, AnythingLLM offers improved accessibility and resource efficiency. Its seamless integration with Pinecone, ChromaDB, and the OpenAI API further enhances its functionality. This innovative solution has the potential to reshape how users interact with documents, paving the way for more intelligent and intuitive experiences. As an open-source project, AnythingLLM encourages collaboration and the contribution of bug fixes and enhancements from the community, ensuring its continuous evolution and growth in the market.