TL;DR:

- Mistral AI introduces Mistral 7B, a 7.3 billion parameter language model, outperforming larger competitors.

- This versatile model excels in text summarization, classification, text and code completion.

- Mistral 7B is open-source under Apache 2.0 license, promoting innovation and accessibility.

- Benchmark tests show their superiority over Llama models in various tasks, except coding.

- Mistral 7B’s efficiency leads to substantial cost savings for businesses.

- The company plans to release a larger model with enhanced capabilities in 2024.

Main AI News:

Mistral AI, the Paris-based startup that has been making waves in the tech world, has just dropped a bombshell with the release of its groundbreaking language model, Mistral 7B. This remarkable achievement comes merely six months after the company’s inception and follows an unprecedented $118 million seed round, the largest in Europe’s startup history.

Mistral 7B, a 7.3 billion parameter model, has set a new standard for compact yet powerful language models. It not only outperforms larger competitors like Meta’s Llama 2 13B but also introduces a range of capabilities that cater to both English language tasks and natural coding needs. This dual functionality positions Mistral 7B as a versatile option for various enterprise-centric applications.

One of the most noteworthy aspects of this release is Mistral AI’s commitment to open-source principles. The company is generously sharing Mistral 7B under the Apache 2.0 license, allowing anyone to harness its capabilities without restrictions, whether on local servers or in the cloud. This move is expected to spark innovation and drive new possibilities across diverse industry sectors.

Mistral 7B: Redefining Possibilities

Founded by former talents from Google’s DeepMind and Meta, Mistral AI is on a mission to revolutionize the enterprise landscape by leveraging publicly available data and contributions from its customers. With the launch of Mistral 7B, the company is taking its first significant step towards realizing this vision.

Mistral 7B boasts an impressive range of features, including low-latency text summarization, classification, text completion, and code completion. Even though this model has just been unveiled, it has already surpassed its open-source rivals in various benchmark tests.

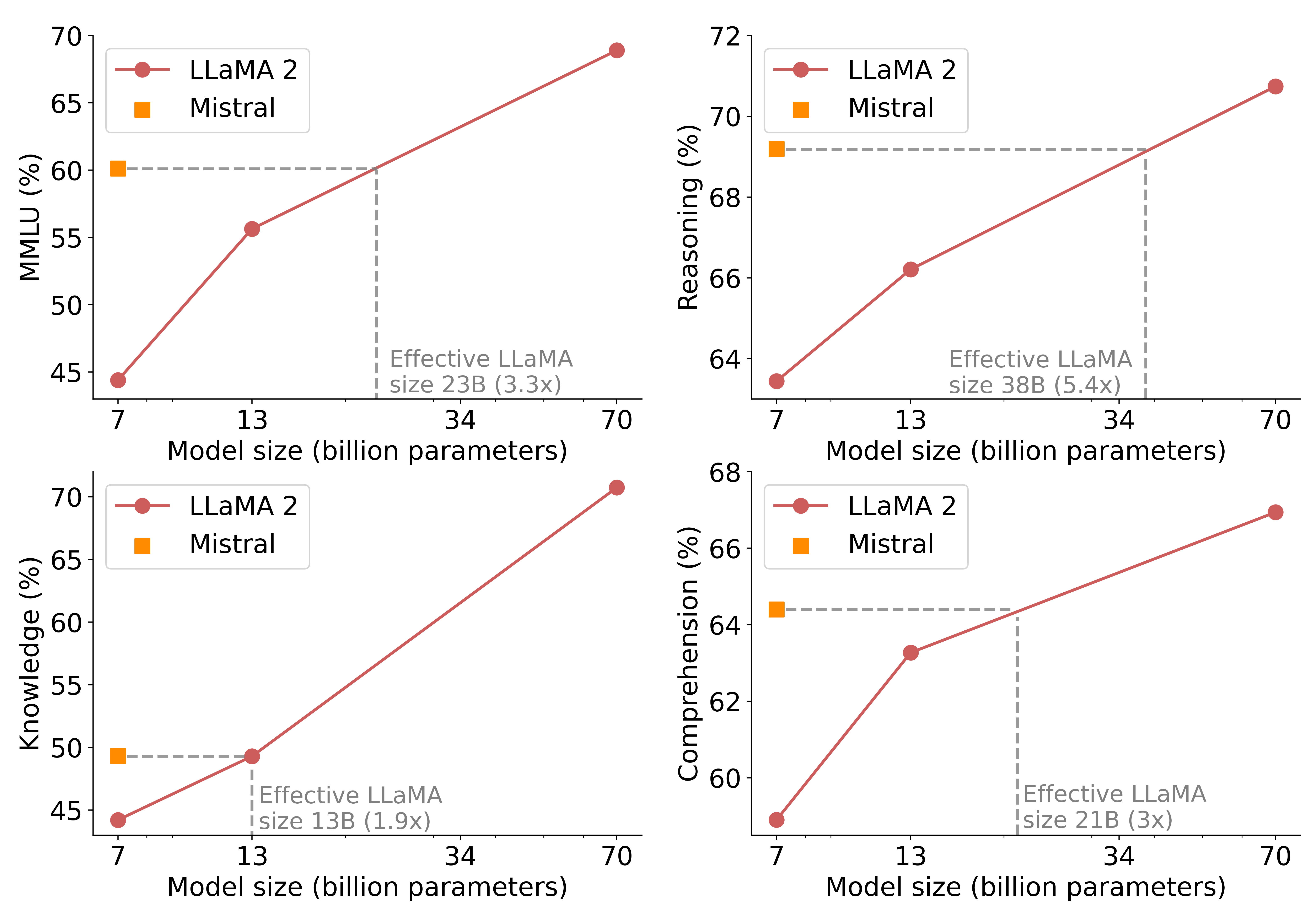

For instance, in the challenging Massive Multitask Language Understanding (MMLU) test, covering an extensive array of subjects, Mistral 7B demonstrated an accuracy of 60.1%. In comparison, Llama 2 7B and 13B achieved a modest 44% and 55%, respectively. Likewise, in tests evaluating commonsense reasoning and reading comprehension, Mistral 7B consistently outperformed the Llama models with impressive accuracy scores of 69% and 64%, respectively. The only area where Llama 2 13B managed to match Mistral 7B was in the world knowledge test, a limitation attributed to the model’s parameter count.

The company proudly declared, “For all metrics, all models were re-evaluated with our evaluation pipeline for accurate comparison. Mistral 7B significantly outperforms Llama 2 13B on all metrics and is on par with Llama 34B (on many benchmarks).”

However, it’s worth noting that in the realm of coding tasks, Mistral 7B, while impressive, fell short of outperforming the finely-tuned CodeLlama 7B. Meta’s model achieved an accuracy of 31.1% and 52.5% in 0-shot Humaneval and 3-shot MBPP tests, with Mistral 7B closely following at 30.5% and 47.5%, respectively.

A Game-Changer for Businesses

Mistral AI’s achievement with Mistral 7B holds significant promise for businesses across the globe. For instance, in MMLU, Mistral 7B delivers performance equivalent to a Llama 2 model three times its size (23 billion parameters). This translates to substantial memory savings and cost benefits without compromising on the final output quality.

The company attributes its ability to achieve faster inference and handle longer sequences efficiently to two key innovations: Grouped-Query Attention (GQA) and Sliding Window Attention (SWA). Mistral 7B utilizes SWA to improve computational efficiency, resulting in a remarkable 2x speed improvement for sequences of 16k with a window of 4k.

Looking ahead, Mistral AI has ambitious plans to expand its offerings. The company is gearing up to release a larger model capable of enhanced reasoning and multilingual capabilities, expected to make its debut in 2024. For now, Mistral 7B is readily deployable across various platforms, from local servers to leading cloud providers like AWS, GCP, or Azure, thanks to the company’s reference implementation and vLLM inference server.

Conclusion:

Mistral AI’s launch of Mistral 7B signifies a remarkable advancement in language models, particularly for enterprise applications. Its outstanding performance, open-source approach, and cost-efficiency hold the potential to disrupt the market and accelerate AI-driven innovations in various industries. Businesses should closely monitor Mistral AI’s developments as they could offer substantial competitive advantages in the near future.