TL;DR:

- Researchers from MIT and Adobe have introduced Distribution Matching Distillation (DMD), an AI method.

- DMD streamlines diffusion models, enabling one-step image generation.

- Unlike GANs and VAEs, DMD reduces the need for expensive neural network evaluations.

- DMD fine-tunes pretrained diffusion models to achieve realistic image generation.

- The method balances realism and artificiality through gradient-based updates.

- DMD offers versatile applications, including test-time optimization of 3D objects.

- It outperforms known diffusion methods on various benchmarks, achieving remarkable efficiency.

- DMD can produce 512×512 images at 20 frames per second, opening possibilities for interactive applications.

Main AI News:

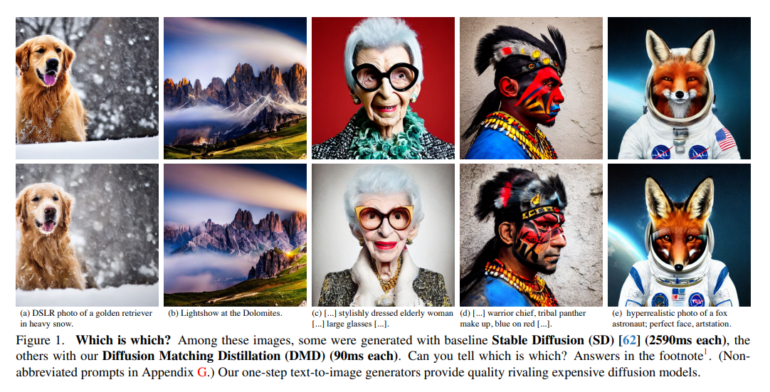

Artificial intelligence is continuously pushing the boundaries of what’s possible in the realm of image generation. Among the latest breakthroughs is Distribution Matching Distillation (DMD), a cutting-edge method developed collaboratively by researchers from MIT and Adobe. DMD aims to transform diffusion models into highly efficient one-step image generators, redefining the landscape of creative image production.

Diffusion models have already made waves in the field of picture generation, delivering unprecedented levels of diversity and realism. Unlike their counterparts, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), diffusion models follow an iterative process to reduce noise in Gaussian samples progressively. While this approach yields impressive results, it demands numerous evaluations of expensive neural networks, hindering real-time interaction.

Previous attempts to expedite this process involved condensing the complex noise-to-image mapping found in multi-step diffusion sampling into a single-pass student network. However, mastering this high-dimensional mapping posed significant challenges, and these distilled versions often fell short of the original multi-step diffusion model’s performance.

Enter DMD, a game-changing solution that bridges the gap between speed and quality in image generation. DMD’s innovation lies in ensuring that the student-generated images closely resemble those produced by the original diffusion model, eliminating the need for direct correspondence between noise and diffusion-generated images. This approach aligns with the principles behind other distribution-matching generative models, such as GMMN or GANs.

Scaling up diffusion models for general text-to-image data has historically presented challenges, despite their remarkable performance in rendering lifelike graphics. The research team overcomes this hurdle by leveraging a diffusion model that has undergone extensive training on text-to-image data. By fine-tuning this pre-trained model, they capture not only the underlying data distribution but also the synthetic distribution generated by their distillation generator.

In essence, the denoised diffusion outputs serve as gradient directions, guiding the transformation of images towards greater realism or, if needed, heightened artificiality. This innovation is further extended to test-time optimization of 3D objects, showcasing the versatility of DMD in a wide range of applications.

The critical distinction in DMD lies in the creation of a gradient update rule that bridges the gap between realism and artifice. By minimizing the difference between the real and fake distributions, DMD propels the artificial images toward greater authenticity. The technique’s effectiveness is evident, not only in its ability to generate lifelike images but also in its applicability to training a whole generative model.

Furthermore, DMD introduces a novel approach by incorporating a distribution matching loss, which allows for the pre-computation of a small number of multi-step diffusion sampling outcomes. A simple regression loss tied to the one-step generation serves as an effective regularizer in this context, enhancing the model’s stability.

Conclusion:

Distribution Matching Distillation (DMD) represents a significant leap in the field of image generation. Its ability to transform diffusion models into one-step image generators while preserving quality and efficiency has promising implications for the market. DMD’s potential to reduce the computational burden of generating high-quality images could pave the way for widespread adoption in creative industries, gaming, and interactive applications, offering cost-effective solutions with impressive performance. Businesses should monitor developments in DMD closely, as it has the potential to disrupt the image generation market.