- IF-COMP introduces a scalable approach for uncertainty estimation and calibration in deep learning.

- Developed by MIT, University of Toronto, and Vector Institute researchers.

- Method leverages temperature-scaled Boltzmann influence function for model linearization.

- Focuses on improving calibration under distribution shifts.

- Demonstrates superior performance in uncertainty calibration, mislabel detection, and OOD detection tasks.

- Achieves significant computational efficiency gains compared to traditional Bayesian and NML-based methods.

- Sets new benchmarks in AUROC scores for OOD detection on CIFAR-10 and MNIST datasets.

Main AI News:

In the realm of machine learning, particularly deep neural networks, there exists a critical need to not only accurately predict outcomes but also to quantify the uncertainty surrounding those predictions. This dual objective holds immense significance in high-stakes domains such as healthcare, medical imaging, and autonomous driving, where the reliability of model outputs directly impacts decision-making and safety.

Traditional approaches to uncertainty estimation, often rooted in Bayesian principles, face significant hurdles when applied to modern deep learning models. These methods necessitate defining prior distributions and sampling posterior distributions, processes that prove challenging due to the complexity and scale of contemporary neural networks. As a result, scalable solutions that can reliably calibrate models under distribution shifts are in high demand.

Enter IF-COMP, a pioneering method introduced by researchers from the Massachusetts Institute of Technology, University of Toronto, and Vector Institute for Artificial Intelligence. IF-COMP offers a scalable approximation of the predictive normalized maximum likelihood (pNML) distribution, crucial for enhancing model calibration and complexity measurement across labeled and unlabeled datasets.

IF-COMP achieves this by leveraging a temperature-scaled Boltzmann influence function to linearize model responses, thereby improving the model’s ability to handle low-probability labels effectively. By applying a proximal Bregman objective, IF-COMP mitigates model overconfidence and adjusts to additional data points, all while maintaining computational efficiency—a significant advantage over existing methods.

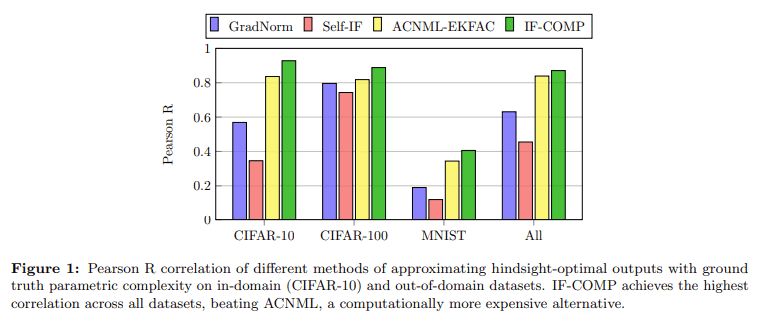

Experimental validation of IF-COMP demonstrates its superiority in uncertainty calibration, mislabel detection, and out-of-distribution (OOD) detection tasks. For instance, on CIFAR-10 and CIFAR-100 datasets, IF-COMP consistently outperforms traditional Bayesian and NML-based methods in terms of expected calibration error (ECE) and computational speed, achieving a notable 7-15 times speedup compared to ACNML.

In mislabel detection scenarios, IF-COMP showcases robust performance with high AUROC scores, surpassing benchmarks set by methods like Trac-IN, EL2N, and GraNd. Furthermore, in OOD detection tasks on MNIST and CIFAR-10 datasets, IF-COMP sets new benchmarks with exceptional AUROC scores, affirming its capability to accurately identify out-of-distribution data points.

These findings underscore IF-COMP’s efficacy in delivering precise uncertainty estimates and detecting anomalies, thereby enhancing the reliability and safety of deep learning applications across diverse domains. As advancements continue, IF-COMP stands poised to redefine standards in uncertainty estimation and calibration under distribution shifts, paving the way for more robust and trustworthy AI-driven solutions.

Conclusion:

The introduction of IF-COMP represents a significant advancement in the field of deep learning reliability. By offering a scalable solution to uncertainty estimation and calibration challenges, IF-COMP not only enhances model accuracy and reliability but also improves computational efficiency. This innovation is poised to set new standards in AI-driven applications across industries such as healthcare, autonomous driving, and beyond, where precise and well-calibrated predictions are paramount.