TL;DR:

- MIT researchers introduce “contextual pruning” for Mini-GPT development.

- Large language models (LLMs) face challenges of size, computational demands, and energy consumption.

- Model pruning and identifying “lottery tickets” in LLMs are existing optimization methods.

- Contextual pruning tailors pruning to specific domains, enhancing efficiency.

- Rigorous evaluation shows pruned Mini-GPTs maintain or improve performance.

- Contextual pruning holds promise for more versatile and sustainable LLMs.

Main AI News:

In recent strides within the realm of artificial intelligence, the optimization of large language models (LLMs) has emerged as a paramount concern. While these advanced AI models offer unparalleled prowess in natural language processing and comprehension, they come with notable caveats. The primary issues encompass their colossal dimensions, formidable computational demands, and substantial energy consumption. These factors, in turn, render LLMs exorbitantly expensive to deploy and curtail their accessibility and pragmatic utility, particularly for entities lacking abundant resources. Consequently, there exists a burgeoning imperative for methodologies that streamline these models, enhancing their efficiency without compromising their performance.

The existing landscape of LLM optimization encompasses a multitude of techniques, with model pruning taking center stage as a prominent method. Model pruning revolves around the reduction of neural network size by excising non-essential weights. The underlying concept is to distill the model to its fundamental constituents, thereby diminishing complexity and operational requisites. Model pruning serves as an antidote to the exorbitant costs and latency issues entailed by running unwieldy models.

Furthermore, the identification of trainable subnetworks within expansive models, colloquially referred to as ‘lottery tickets,’ presents a viable avenue for achieving commensurate accuracy while significantly curtailing the model’s footprint.

The innovative proposition by MIT researchers introduces a novel technique christened ‘contextual pruning,’ designed to facilitate the creation of efficient Mini-GPTs. This method tailors the pruning process to specific domains, including but not limited to law, healthcare, and finance. By methodically analyzing and selectively discarding less pivotal weights concerning particular domains, this approach aspires to uphold or augment the model’s performance, while drastically reducing its dimensions and resource prerequisites. This targeted pruning strategy constitutes a monumental stride toward endowing LLMs with greater versatility and sustainability.

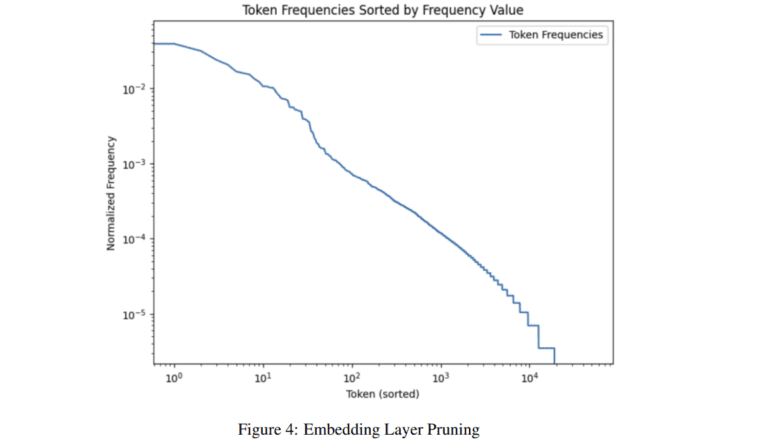

The methodology underpinning contextual pruning entails a meticulous examination and pruning of linear layers, activation layers, and embedding layers within LLMs. The research team conducted extensive investigations to pinpoint less critical weights for preserving performance in diverse domains. This undertaking featured a multifaceted pruning regimen, targeting various model components to optimize efficiency.

The performance evaluation of Mini-GPTs following contextual pruning underwent rigorous scrutiny, incorporating metrics such as perplexity and multiple-choice question assessments. The promising outcomes evinced that, by and large, the pruned models either maintained or enhanced their performance across various datasets post-pruning and fine-tuning. These findings underscored that the models conserved their core competencies despite the reduction in size and intricacy. In select instances, the pruned models even surpassed their unpruned counterparts in specific tasks, thereby accentuating the efficacy of contextual pruning.

Conclusion:

MIT’s innovative approach of contextual pruning for Mini-GPT development addresses critical challenges in the AI market, promising more efficient and versatile large language models. This breakthrough has the potential to significantly reduce the cost and resource demands associated with LLMs, making them more accessible and impactful for businesses across various industries.