TL;DR:

- Vision foundational models are fundamental in computer vision, providing the basis for specific models.

- Vision models extended to video data are crucial for various AI applications.

- Kyung Hee University’s research enhances SAM, a vision model, by addressing SegAny and SegEvery challenges.

- SAM comprises a ViT-based image encoder and a prompt-guided mask decoder.

- Object-aware box prompts improve SegEvery’s efficiency, reducing the need for mask predictions.

- The research focuses on determining object presence in specific image regions.

Main AI News:

Vision foundational models play a pivotal role in the realm of computer vision, serving as the fundamental building blocks for more sophisticated and application-specific models. These foundational models serve as the starting point, providing researchers and developers with a robust foundation to address complex challenges and optimize for specific applications.

Extending the capabilities of vision models to handle video data is essential for tasks like action recognition, video captioning, and anomaly detection in surveillance footage. Their adaptability and effectiveness in addressing a wide array of computer vision tasks make them indispensable in the world of modern AI applications.

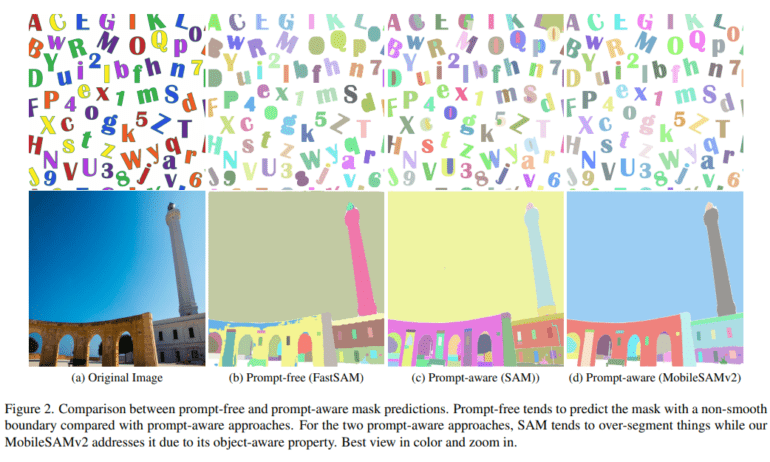

In a groundbreaking development, researchers at Kyung Hee University have successfully addressed key challenges in one such vision model known as SAM, or the Segment Anything Model. Their innovative approach tackles two critical image segmentation challenges: segmenting anything (SegAny) and segmenting everything (SegEvery). As the name implies, SegAny focuses on using specific prompts to segment a single item of interest within an image, while SegEvery takes on the formidable task of segmenting all elements within an image.

At its core, SAM comprises a ViT-based image encoder paired with a prompt-guided mask decoder. The mask decoder is instrumental in generating fine-grained masks, leveraging a two-way attention mechanism that facilitates efficient interaction between image encoders. SegEvery, unlike SegAny, does not rely on prompts for segmentation. Instead, it directly generates images.

One of the key challenges identified by the researchers in SAM’s SegEvery component was its relatively slower performance. To address this, they introduced object-aware box prompts as an alternative to the default grid-search point prompts. This strategic shift led to a substantial increase in image generation speed. The researchers have demonstrated that the adoption of object-aware prompts aligns seamlessly with the distilled image encoders in MobileSAM, paving the way for a unified framework that enhances the efficiency of both SegAny and SegEvery tasks.

Central to their research is the task of determining whether an object exists within a specific region of an image. While traditional object detection methods address this issue, they often generate overlapping bounding boxes. To mitigate this challenge, pre-filtering becomes necessary to ensure the validity of prompts and eliminate overlap.

The crux of the matter with point prompts lies in their need to forecast three output masks, a requirement aimed at tackling ambiguity. This, in turn, necessitates further mask filtering. In stark contrast, box prompts distinguish themselves by providing more detailed information, resulting in superior-quality masks with reduced ambiguity. This distinctive feature reduces the requirement to predict three masks, making it a highly advantageous choice for SegEvery due to its enhanced efficiency.

Conclusion:

The introduction of MobileSAMv2, with its efficient image segmentation capabilities, signifies a significant advancement in computer vision. This innovation will likely drive increased adoption of AI-driven solutions in various industries, offering improved accuracy and speed in tasks requiring image segmentation. This development will further solidify the role of computer vision in shaping the future of AI applications.