TL;DR:

- MOREH’s ‘MoMo-70B’ LLM ranks first globally with a score of 77.29 points in the ‘Open LLM Leaderboard’ by Hugging Face.

- This achievement comes in just three months since the model’s development.

- MOREH’s success is attributed to its proprietary AI platform, ‘MoAI,’ which enables efficient large-scale AI model development.

- The company offers AI cloud solutions compatible with various GPU and NPU resources, including AMD and NVIDIA.

- Founded in 2020, MOREH received a $22 million Series B round investment from KT and AMD in October last year.

Main AI News:

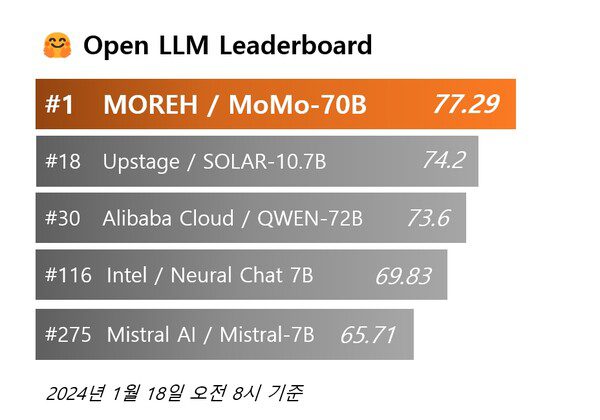

In the fiercely competitive landscape of artificial intelligence, MOREH, the AI infrastructure solutions pioneer, has emerged as an unrivaled force. Their flagship creation, the Large Language Model (LLM) ‘MoMo-70B,’ now reigns supreme, securing the coveted top spot with an exceptional score of 77.29 points in the renowned ‘Open LLM Leaderboard’ evaluation, hosted by Hugging Face, the world’s preeminent machine learning platform.

The ‘Open LLM Leaderboard’ stands as the ultimate litmus test for AI models globally, attracting over 3,400 contenders, each striving to prove their mettle. These models are meticulously scrutinized across six pivotal dimensions, including reasoning, common sense acumen, and linguistic proficiency.

The meteoric ascent of ‘MoMo-70B’ is nothing short of a triumph. Within a mere three-month span since the model’s inception, it ascended to the apex of the leaderboard on January 17th and has maintained its undisputed reign ever since.

What sets MOREH apart and fuels its rapid ascent in this fiercely competitive arena? The answer lies in ‘MoAI,’ an AI platform exclusively developed and owned by MOREH. In the domain of LLM development, swift progress hinges on the acquisition of an efficient model learning methodology. It is here that ‘MoAI’ distinguishes itself, facilitating the streamlined development and training of large-scale AI models through cutting-edge parallel processing techniques.

Lim Junghwan, the visionary leader at the helm of MOREH’s AI Group, underscores the significance of this achievement, stating, “This milestone is a testament to MOREH’s exceptional prowess in AI model development and the technological prowess of our proprietary AI platform, ‘MoAI’.” He further elaborates, “Through ‘MoAI,’ we have succeeded in dramatically reducing both the time and cost associated with large-scale AI model development.“

MOREH, a prominent luminary in the AI semiconductor ecosystem, is renowned for its comprehensive suite of AI cloud solutions tailored for enterprises, encompassing AI infrastructure software. What sets MOREH apart is its remarkable adaptability, offering seamless compatibility with a multitude of GPU and NPU resources, including AMD, in addition to its established support for NVIDIA. This expanded compatibility empowers AI operators with a broader array of cost-efficient alternatives and substantial time savings.

Founded in 2020 by CEO Jo Gangwon and an illustrious cadre of graduates from Seoul National University’s renowned Manycore Programming Research Group, MOREH has rapidly catapulted to prominence. In October of the previous year, it created a buzz by securing a substantial Series B round investment totaling $22 million, drawing support from industry titans such as KT and AMD.

Conclusion:

MOREH’s rapid ascent to the top of the global AI leaderboard with ‘MoMo-70B’ underscores their prowess in AI model development and the significance of their ‘MoAI’ platform. Their efficient approach to large-scale AI model creation has the potential to disrupt the market by reducing development time and costs, offering a wider range of alternatives for AI operators, and solidifying MOREH’s position as a major player in the AI ecosystem.