TL;DR:

- Large Language Models (LLMs) have made significant strides in NLP, leading to the development of Multimodal Large Language Models (MLLMs) that combine vision and language capabilities.

- MovieChat is an innovative framework that integrates vision models with LLMs for extended video comprehension tasks, overcoming challenges with lengthy movies.

- The proposed memory system based on the Atkinson-Shiffrin model optimizes computing complexity and memory costs while enhancing long-term temporal connections.

- MovieChat outperforms previous algorithms and offers state-of-the-art performance in extended video comprehension.

- Practical ramifications include applications in content analysis, video recommendation systems, and video monitoring.

Main AI News:

The realm of Large Language Models (LLMs) has experienced remarkable advancements in the field of Natural Language Processing (NLP). The logical progression from this point is to enhance LLMs with multi-modality capabilities, transforming them into Multimodal Large Language Models (MLLMs) capable of conducting multimodal perception and interpretation. Such a stride is believed to be a crucial step toward achieving Artificial General Intelligence (AGI). MLLMs have exhibited astonishing emergent skills in diverse multimodal tasks, including perception (e.g., existence, count, location, OCR), commonsense reasoning, and code reasoning. Compared to conventional LLMs and other task-specific models, MLLMs offer a more human-like perspective of the environment, boast a user-friendly interface for interaction, and possess a broader range of task-solving abilities.

Incorporating multi-modality into Large Language Models (LLMs) has been a significant advancement in Natural Language Processing (NLP). By creating Multimodal Large Language Models (MLLMs) capable of multimodal perception and interpretation, researchers have taken a logical step toward enhancing Artificial General Intelligence (AGI). These MLLMs have demonstrated remarkable abilities in various multimodal tasks, from perception and commonsense reasoning to code reasoning. They provide a more human-like perspective, offer an interactive user interface, and exhibit superior skills compared to their predecessors and other task-specific models.

The integration of vision models with LLMs has been an ongoing pursuit in the quest for extended video comprehension. However, prior attempts have mostly focused on shorter videos, leaving a gap in addressing the challenges posed by lengthy movies lasting over a minute. Researchers from esteemed institutions like Zhejiang University, the University of Washington, Microsoft Research Asia, and Hong Kong University have collaborated to bridge this gap and introduce a unique framework named MovieChat. This framework is specifically designed to tackle the interpretation challenges associated with lengthy videos by combining the power of vision models with LLMs.

The researchers acknowledge the obstacles that exist in achieving comprehensive video comprehension, which includes computing difficulties, memory expenses, and long-term temporal linkages. To address these challenges, they propose a memory system based on the Atkinson-Shiffrin memory model. This memory system comprises a rapidly updated short-term memory and a compact, enduring long-term memory. The innovative memory mechanism plays a key role in minimizing computing complexity and memory costs while enhancing long-term temporal connections.

The MovieChat framework represents a groundbreaking approach, enabling extended video comprehension tasks that were previously unexplored. Rigorous quantitative assessments and case studies were conducted to evaluate both the understanding capability and inference cost of the system. The researchers also introduced their novel memory mechanism, which effectively handles long-term temporal relationships while optimizing memory usage and computing complexity. The incorporation of this memory process empowers the model to store and retrieve relevant information for extended periods, significantly enhancing video comprehension.

One remarkable feature of MovieChat is its ability to overcome the challenges associated with analyzing lengthy films. By leveraging the Atkinson-Shiffrin model-inspired memory process, represented by tokens in Transformers, the system achieves state-of-the-art performance in extended video comprehension. This technological advancement holds tremendous potential for various industries, including content analysis, video recommendation systems, and video monitoring.

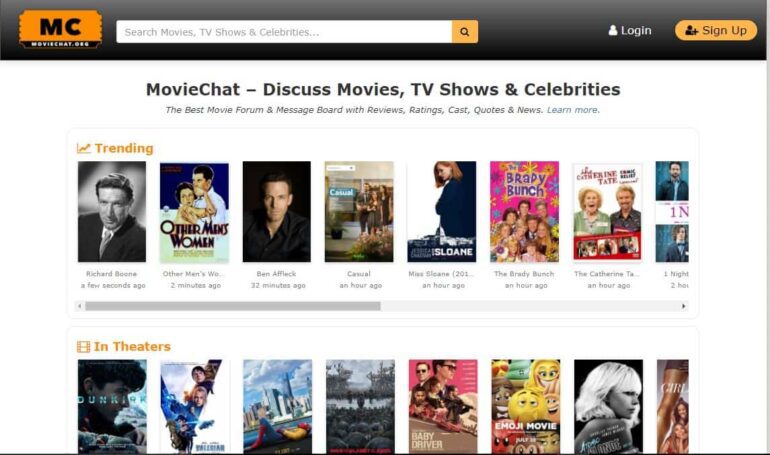

Looking ahead, future studies might explore avenues to strengthen the memory system further and integrate additional modalities, such as audio, to enhance video comprehension capabilities. The research conducted on MovieChat opens up new possibilities for applications that require a comprehensive understanding of visual data, making it a promising tool for businesses seeking cutting-edge solutions. For more information and practical demonstrations of MovieChat’s capabilities, visit their website, which features multiple demos showcasing the power of this innovative video understanding system.

Conclusion:

MovieChat represents a groundbreaking development in the video understanding market. By combining vision models with Large Language Models (LLMs), it enables businesses to comprehend lengthy videos effectively. The proposed memory mechanism addresses critical challenges and offers state-of-the-art performance in extended video comprehension. This innovation has significant potential in various industries, presenting exciting opportunities for content analysis, video recommendation systems, and video monitoring applications. Companies leveraging MovieChat can gain a competitive advantage in understanding and analyzing visual data, driving improved decision-making and customer engagement.