TL;DR:

- MVControl revolutionizes text-driven multi-view image generation and 3D content creation.

- Score distillation optimization (SDS) techniques enable 3D knowledge extraction from pre-trained text-to-image models.

- Challenges in ensuring view consistency in 3D content are addressed through innovative methods.

- MVControl integrates a control network for precise text-to-multi-view image control.

- Edge maps and other input scenarios are leveraged to enhance versatility.

- The hybrid diffusion prior enhances the production of high-fidelity, fine-grain multi-view images and 3D content.

Main AI News:

In the realm of 2D image production, recent strides have been nothing short of spectacular. With the advent of input text prompts, the creation of high-fidelity graphics has become a walk in the park. However, the transition to the text-to-3D domain has been a challenging endeavor, primarily due to the scarcity of 3D training data. Enter the world of diffusion models and differentiable 3D representations, where the cutting-edge optimization technique known as score distillation optimization (SDS) has emerged as a beacon of hope. Rather than starting from scratch with copious amounts of 3D data, SDS-based methods aim to distill 3D knowledge from pre-trained text-to-image generative models, delivering impressive results. DreamFusion, for instance, is a shining example of innovation in 3D asset creation.

Over the past year, methodologies have undergone rapid evolution, aligning with the 2D-to-3D distillation paradigm. A slew of studies has emerged, all dedicated to enhancing generation quality. These efforts involve multiple optimization stages, concurrent refinement of diffusion and 3D representation, meticulous fine-tuning of the score distillation algorithm, and an overall enhancement of pipeline specifics. While these approaches excel in texture rendering, ensuring view consistency in the generated 3D content remains a formidable challenge, mainly due to the lack of dependency on the 2D diffusion prior. Consequently, considerable efforts have been invested in incorporating multi-view information into pre-trained diffusion models.

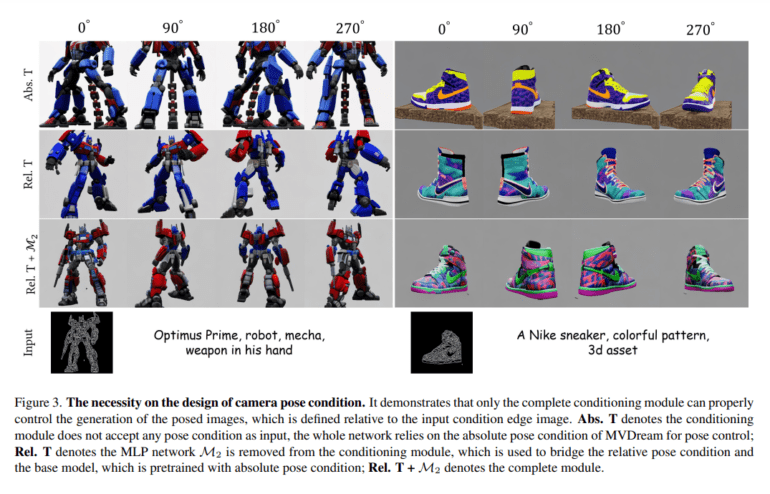

The foundation model is seamlessly fused with a control network, paving the way for controlled text-to-multi-view image production. Interestingly, the research team focused solely on training the control network, leaving the weights of MVDream untouched. Experimental findings revealed that the relative pose condition, as opposed to the absolute world coordinate system, proved more effective in controlling text-to-multi-view generation, a surprising revelation considering MVDream’s pretrained network description. Furthermore, achieving view consistency necessitates the direct integration of 2D ControlNet’s control network with the base model, given its inherent suitability for single-image creation and adaptability to multi-view scenarios.

To address these challenges, a collaborative effort from Zhejiang University, Westlake University, and Tongji University gave rise to a unique conditioning technique based on the original ControlNet architecture. This approach, while straightforward, has proven to be remarkably successful in enabling controlled text-to-multi-view generation. Leveraging a combination of the extensive 2D dataset LAION and the 3D dataset Objaverse, the team trained MVControl. In a noteworthy exploration, the researchers considered the use of edge maps as conditional inputs, showcasing the network’s versatility in accommodating various input scenarios, including depth maps and sketch images. Once trained, MVControl can provide 3D priors for controlled text-to-3D asset production. This process follows a coarse-to-fine generation methodology, with texture optimization occurring at the fine step, once a satisfactory geometry is achieved in the coarse stage. Rigorous testing has affirmed that this innovative approach can harness input condition images and written descriptions to produce high-fidelity, fine-grain controlled multi-view images and 3D content.

Conclusion:

MVControl represents a game-changing development in the field of AI-driven multi-view imaging and 3D content creation. Its ability to bridge the gap between text prompts and high-quality, controllable visuals opens up exciting opportunities in industries such as entertainment, design, and marketing. As businesses seek innovative ways to engage audiences and create compelling content, MVControl’s capabilities are poised to drive market growth and creativity to new heights.