- LLMs show promise in programming and robotics but struggle with complex reasoning.

- MIT CSAIL researchers introduce LILO, Ada, and LGA frameworks.

- LILO enhances code synthesis through natural language abstractions.

- Ada improves AI task planning using language-based action libraries.

- LGA facilitates robotic interpretation of surroundings via language-guided abstraction.

Main AI News:

In today’s tech landscape, large language models (LLMs) are proving invaluable for various tasks, from programming to robotics. However, their performance still lags behind humans when it comes to complex reasoning challenges. One critical factor is the inability of these systems to grasp new concepts and form high-level abstractions essential for tackling sophisticated tasks effectively.

Fortunately, researchers from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) have made significant strides in leveraging natural language to bridge this gap. Their work, to be presented at the International Conference on Learning Representations, demonstrates how everyday language can serve as a rich source of context for LLMs, empowering them to develop superior representations for tasks like code synthesis, AI planning, and robotic navigation.

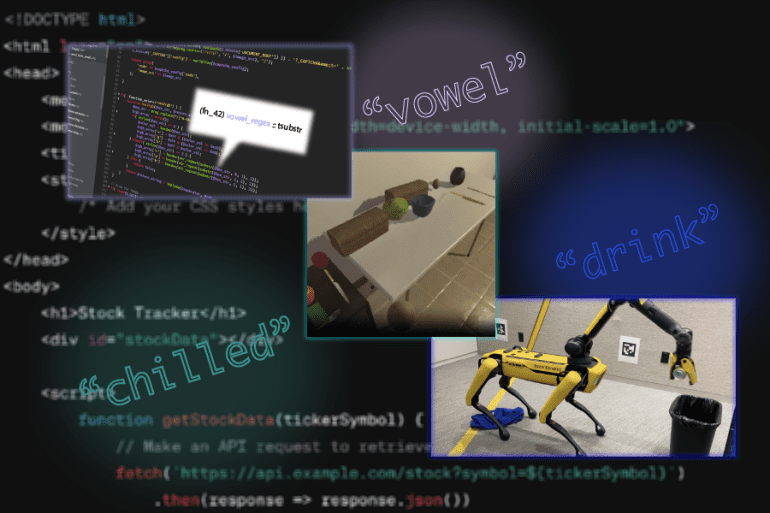

The research introduces three frameworks, each tailored to a specific domain: LILO (library induction from language observations), Ada (action domain acquisition), and LGA (language-guided abstraction). These frameworks leverage neurosymbolic methods, blending neural networks with logical components to enhance AI capabilities.

LILO: Streamlining Code Synthesis

While LLMs excel at generating code for small-scale tasks, they fall short in architecting comprehensive software libraries like human engineers. LILO addresses this limitation by combining standard LLMs with the Stitch algorithm, which identifies and refactors code into concise, reusable abstractions. By emphasizing natural language, LILO enhances the system’s ability to understand and execute tasks requiring common-sense knowledge, such as manipulating text and graphics.

According to Gabe Grand SM ’23, lead author of the research, LILO’s integration of natural language names and documentation leads to more interpretable code, enhancing performance for programmers and AI systems alike.

Ada: Advancing AI Task Planning

Drawing inspiration from Ada Lovelace, the world’s first programmer, the Ada framework focuses on automating multi-step tasks in virtual environments. By leveraging natural language descriptions, Ada constructs libraries of action abstractions, empowering AI agents to reason effectively in complex scenarios. Integrating GPT-4, Ada outperforms baseline models in tasks like kitchen chores and gaming, showcasing significant accuracy improvements.

Lio Wong, lead researcher of Ada, highlights the potential for real-world applications, envisioning Ada assisting with household tasks and coordinating multiple robots. Future iterations aim to incorporate more powerful language models to further enhance planning capabilities.

LGA: Facilitating Robotic Interpretation

In unstructured environments, robots require a nuanced understanding of their surroundings to perform tasks effectively. LGA, spearheaded by Andi Peng SM ’23, addresses this need by leveraging natural language to guide abstraction formation. By translating task descriptions into essential elements, LGA enables robots to develop effective plans with human-like reasoning. Experimental results demonstrate LGA’s efficacy in tasks like fruit picking and object manipulation, highlighting its potential for diverse applications.

Peng emphasizes the importance of refining data to enhance robot utility, underscoring LGA’s role in imparting crucial world knowledge to machines.

A Promising Future for AI

Assistant professor Robert Hawkins lauds these neurosymbolic methods as a groundbreaking frontier in AI, enabling the rapid acquisition of interpretable code libraries. By harnessing natural language, these frameworks pave the way for more human-like AI models capable of tackling complex tasks with precision and efficiency.

Conclusion:

The integration of natural language into AI frameworks represents a significant advancement, enabling enhanced performance in programming, planning, and robotics. This innovation opens doors for more sophisticated applications across various industries, promising increased efficiency and productivity in AI-driven tasks. Companies investing in AI technologies stand to benefit from these advancements, gaining a competitive edge in the market by leveraging the power of natural language processing.