TL;DR:

- Over 2,000 individuals gathered in Las Vegas to test vulnerabilities in advanced generative AIs.

- Generative AIs create diverse content but face misuse and bias issues.

- Ethical hackers aided developers in identifying vulnerabilities during the Generative Red Team Challenge at DEFCON.

- Top participants exposed vulnerabilities, helping AI developers enhance system security.

- Ongoing efforts involve red teaming, transparency, and incentivizing third-party detection.

- Despite challenges, responsible AI development seeks to balance innovation with safeguards.

Main AI News:

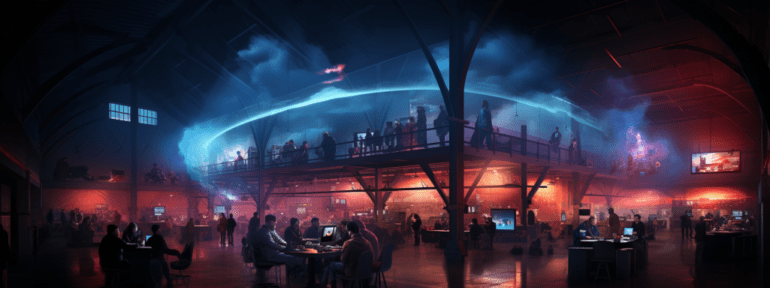

In a bustling convergence of tech enthusiasts, more than 2,000 individuals recently convened in Las Vegas, not to revel in the glitz and glamour of the city, but rather to engage in a unique endeavor – the challenge of outwitting some of the most advanced artificial intelligences (AIs) in existence. This intriguing event, aptly titled the “Generative Red Team (GRT) Challenge,” carried a distinctive objective: to exploit vulnerabilities within generative AIs, with the ultimate goal of fortifying these systems against potential harm.

Generative AIs, formidable software entities designed to produce original content upon user prompts, have surged in popularity. From crafting intricate images and videos to generating text and code, these AIs have infiltrated various domains, demonstrating their transformative potential. However, their rapid ascent has been accompanied by a surge in concerns. Incidents involving misuse, such as the creation of phishing emails and deceptive audio, have raised red flags. Furthermore, the issue of bias and skewed content generation due to the AI’s training data remains a critical challenge.

As the generative AI landscape evolves, the question arises: how can we harness their capabilities while minimizing their potential for adverse consequences? The answer might lie in an unexpected place – the world of ethical hackers.

Acknowledging that developers alone cannot fully comprehend and address all vulnerabilities within generative AIs, experts have sought assistance from the hacking community. A watershed moment occurred when the Biden administration initiated discussions with industry leaders with a focus on fostering “responsible AI innovation.” This pursuit of AI that serves the greater good, upholds security, and safeguards society culminated in a significant milestone – the Generative Red Team Challenge.

The event, held as part of the annual DEFCON conference, invited participants to uncover chinks in the armor of generative AIs. As attendees engaged in a captivating intellectual showdown, they exploited loopholes in text-generating AIs from prominent developers. Challenges encompassing diverse categories, each assigned point values reflecting their complexity, tested participants’ prowess. Armed with secured tools, they embarked on a race against time to navigate an array of prompts, utilizing AIs developed by Anthropic, Cohere, Google, Hugging Face, Meta, NVIDIA, OpenAI, and Stability AI.

The outcome was riveting. Over the weekend, approximately 2,200 individuals vied for supremacy. The top performers, the four individuals with the most points, were rewarded with an NVIDIA RTX A6000 GPU, a high-performance computing component valued at around $4,650.

This event was not merely a display of technological might; it ushered in a transformative phase for the AI community. By identifying vulnerabilities, participants facilitated the enhancement of these systems’ robustness. However, the exact nature of the detected vulnerabilities remains a closely guarded secret until February. This delay serves as a window of opportunity for developers to rectify these issues, ensuring a more secure future for generative AIs.

In the aftermath of DEFCON’s success, a trail of insights and challenges emerges. The journey toward responsible AI involves regular red teaming exercises, focused on areas such as bias identification, potential misuse, and national security threats. Embracing these principles, participants are committed to unveiling content’s AI-generated origins, fostering industry-wide information sharing, and incentivizing third-party detection of hitherto undisclosed AI vulnerabilities.

Despite these proactive measures, the road ahead is intricate. The march toward perfect AI systems devoid of flaws is an arduous one, mirroring the perpetual battle against security vulnerabilities in consumer devices. The inevitability of new challenges arising as more people integrate generative AIs into their lives underscores the need for continuous updates and vigilance.

As we tread this path, a dual challenge emerges: accepting that, at times, AI may act in unintended ways, while urging leaders to uphold the principles of responsible AI. As ethical hackers and developers join forces, supported by both voluntary commitments and legislative measures, the world of generative AIs stands on the precipice of a dynamic transformation, poised to yield groundbreaking solutions while navigating the ever-evolving landscape of technological innovation.

Conclusion:

The DEFCON Generative Red Team Challenge exemplifies collaborative efforts between ethical hackers and AI developers to strengthen generative AI systems. The event’s success underscores the importance of ongoing security measures in an evolving AI landscape. As the market continues to embrace generative AIs, integrating robust security practices will be crucial to maintain consumer trust, foster innovation, and ensure responsible AI implementation.