TL;DR:

- Integration of AI into software is revolutionary but poses challenges.

- Software engineers face a steep learning curve when embedding AI.

- Large language models (LLMs) are used to create conversational agents.

- ‘AI copilots’ enhance user interactions by refining LLM responses.

- Balancing context and constraints is crucial in AI copilot development.

- Continuous adjustments and fine-tuning are required for optimal performance.

Main AI News:

The integration of artificial intelligence into software products represents a monumental shift in the technology landscape. As businesses rush to incorporate cutting-edge AI functionalities, the concept of ‘product copilots’ has gained significant momentum. These innovative tools empower users to engage with software naturally, resulting in a markedly improved user experience. Yet, this transformative journey brings with it a unique set of challenges for software engineers, many of whom are navigating the complexities of AI integration for the first time. Embedding AI into software products is a multifaceted process that necessitates a reevaluation of established software engineering tools and methodologies.

The advent of AI in software products presents a complex dilemma. Software engineers venturing into uncharted AI territory find themselves faced with a steep learning curve. The principal obstacle lies in the task of Embedding AI effectively to ensure optimal functionality and user-centric reliability. This undertaking is made even more formidable due to the lack of standardized tools and proven techniques in the realm of AI integration, prompting a pressing need for innovative solutions.

One prevailing approach to infusing AI into software revolves around harnessing large language models (LLMs) to create conversational agents. These agents are meticulously designed to comprehend and respond to user inputs in natural language, thereby facilitating seamless interactions. However, the process of developing these prompts, often referred to as ‘prompt engineering,’ is fraught with intricacies. It demands a substantial allocation of both time and resources and is predominantly characterized by a trial-and-error methodology.

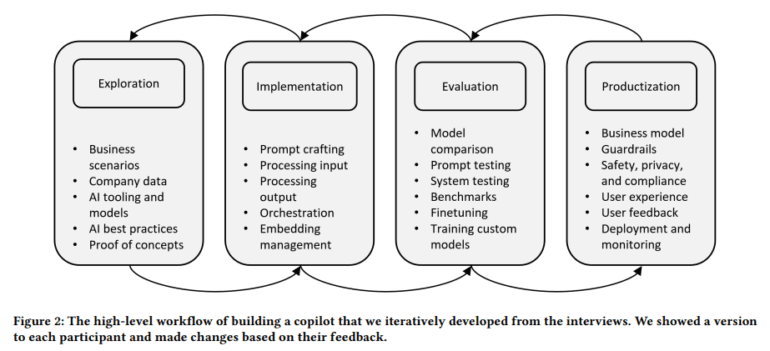

Pioneered by researchers from Microsoft and GitHub, the concept of ‘AI copilots’ has emerged as a beacon of progress. These sophisticated software systems are engineered to elevate user interactions with applications. AI copilots operate by translating user actions into prompts for LLMs and subsequently refining the model’s output into formats that are easily understandable to users. This approach involves a strategic orchestration of various prompts and responses, with the overarching goal of rendering interactions more intuitive and effective.

Diving deeper into the methodology underpinning AI copilots reveals a nuanced strategy. The crux of the matter lies in striking a balance between furnishing the AI with ample context while navigating constraints like token limits. The process entails breaking down prompts into distinct components, including examples, instructions, and templates, and dynamically modifying them based on user inputs. This meticulous approach guarantees that the AI’s responses are precise and contextually pertinent, aligning seamlessly with the user’s requirements. However, it necessitates ongoing adjustments and fine-tuning, transforming the role of the software engineer into a dynamic and iterative one.

The implementation of AI copilots has ushered in significant advancements in user-software interactions. Through refined prompt engineering, AI models have achieved heightened accuracy and relevance in their responses. Nevertheless, evaluating the performance of these copilots remains a formidable task. Establishing benchmarks for performance evaluation is imperative, alongside ensuring that these systems uphold the highest standards of safety, privacy, and regulatory compliance.

Conclusion:

The emergence of AI product copilots signifies a transformative shift in software integration. While they promise improved user experiences, the challenges of AI integration and the need for continuous refinement highlight the dynamic nature of this market. Businesses should invest in innovative solutions and prioritize user-centric reliability to thrive in this evolving landscape.