TL;DR:

- Neuchips will showcase the Raptor Gen AI accelerator chip and Evo PCIe card LLM solutions at CES 2024.

- Raptor chip offers remarkable AI inferencing capabilities with up to 200 TOPS per chip.

- Evo card combines PCIe Gen 5 with energy efficiency, delivering high performance at just 55 watts.

- The modular design of Evo ensures scalability and future-proofing.

- Neuchips’ solutions significantly reduce hardware costs and energy consumption.

- Businesses can harness LLMs for various AI applications, benefiting from improved cost-effectiveness.

Main AI News:

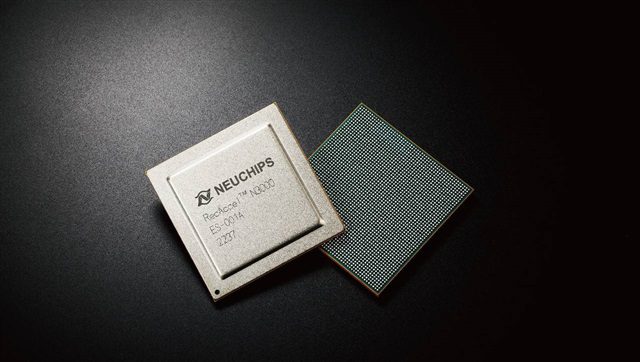

Neuchips, a renowned leader in AI Application-Specific Integrated Circuits (ASIC) solutions, is set to showcase its groundbreaking Raptor Gen AI accelerator chip (formerly known as N3000) and Evo PCIe accelerator card LLM solutions at CES 2024. The Raptor chip, a game-changing innovation, empowers enterprises to embrace large language models (LLMs) for inference tasks at a fraction of the cost of existing solutions.

Raptor Gen AI Accelerator Redefines LLM Performance

Ken Lau, CEO of Neuchips, expressed his enthusiasm about the upcoming showcase, stating, “We are thrilled to introduce our Raptor chip and Evo card to the industry at CES 2024. Neuchips’ solutions mark a monumental shift in the price-to-performance ratio for natural language processing. With Neuchips, organizations of all sizes can harness the capabilities of LLMs for a wide array of AI applications.”

Democratizing Access to LLMs

Together, the Raptor chip and Evo card present a seamlessly optimized stack that democratizes access to market-leading LLMs for enterprises. Neuchips’ AI solutions not only significantly reduce hardware costs compared to existing alternatives but also prioritize high energy efficiency, resulting in lower electricity consumption and an overall reduction in the total cost of ownership.

At CES 2024, Neuchips will demonstrate the prowess of Raptor and Evo by accelerating the Whisper and Llama AI chatbots within a Personal AI Assistant application. This demonstration underscores the potential of LLM inferencing in addressing genuine business requirements.

Enterprises eager to experience Neuchips’ breakthrough performance can visit booth 62700 to participate in a free trial program. In addition, technical sessions will shed light on how Raptor and Evo can drastically cut deployment costs for speech-to-text applications.

Raptor Gen AI Accelerator: Paving the Way for Breakthrough LLM Performance

The Raptor chip boasts an astounding processing capability of up to 200 tera operations per second (TOPS) per chip. Its exceptional performance in AI inferencing operations, such as Matrix Multiply, Vector, and embedding table lookup, makes it ideally suited for Gen-AI and transformer-based AI models. This remarkable throughput is the result of Neuchips’ patented compression techniques and efficiency optimizations finely tuned for neural networks.

Evo Gen 5 PCIe Card Sets a New Benchmark for Acceleration and Energy Efficiency

Complementing the Raptor chip is Neuchips’ ultra-low-powered Evo acceleration card. Evo seamlessly combines PCIe Gen 5 with eight lanes and LPDDR5 32GB, delivering a staggering 64GB/s host I/O bandwidth and an impressive 1.6-Tbps per second of memory bandwidth, all while consuming just 55 watts per card.

As demonstrated with DLRM, Evo also offers 100% scalability, enabling customers to linearly increase performance by adding more chips. This modular design ensures that investments remain protected and future AI workloads can be efficiently accommodated.

An upcoming product in the half-height half-length (HHHL) form factor, Viper, is slated for launch in the latter half of 2024. This new series promises to provide even greater deployment flexibility, bringing data center-class AI acceleration in a compact and versatile design.

Conclusion:

Neuchips’ innovative Gen AI accelerators, Raptor and Evo, showcased at CES 2024, are poised to disrupt the market by providing cost-effective access to powerful AI inferencing capabilities. These solutions not only reduce hardware costs but also prioritize energy efficiency, making them an attractive proposition for businesses looking to leverage large language models in their AI applications. This development represents a significant advancement in the field and sets a new standard for AI acceleration, offering businesses a competitive edge in the market.