TL;DR:

- Neuchips, an AI accelerator manufacturer, shifts focus to LLM inference from recommendation workloads.

- New CEO Ken Lau leads the strategic transition, with former CEO YounLong Lin becoming the chairman.

- Llama2-7B demonstrates impressive performance on a single-chip PCIe card (60 tokens/second) and a four-chip PCIe card (240 tokens/second).

- Neuchips offers a single-chip PCIe card with 55W TDP and 33-GB LPDDR5 memory, as well as an M.2 card with a 25W TDP.

- A new four-chip card with a 300W TDP and 256-GB LPDDR5 memory achieves a remarkable bandwidth of 6.4 Tbps.

- Llama2 performance scales linearly, reaching 1,920 tokens/second with eight quad-chip cards.

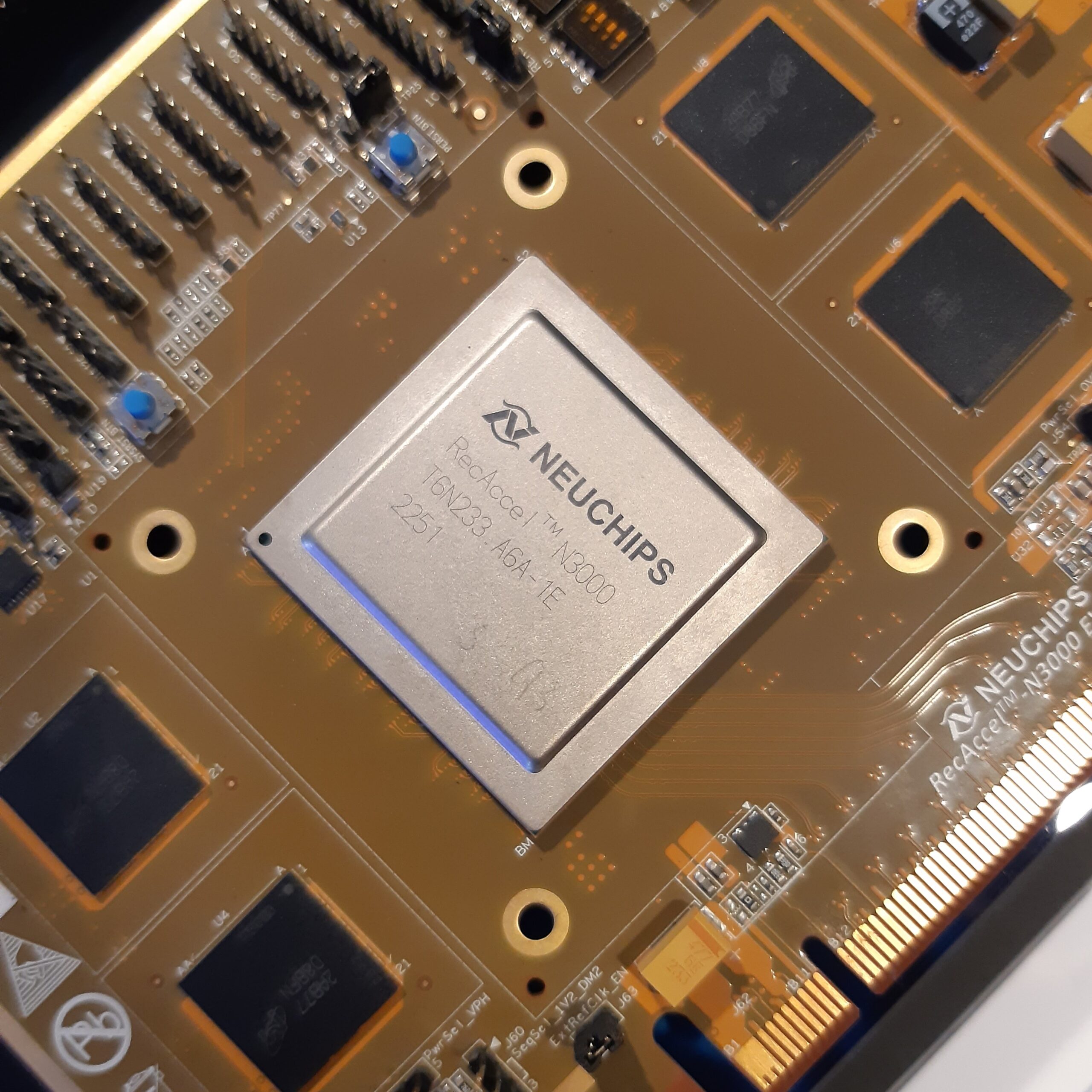

- The RecAccel chip is rebranded as the N3000, maintaining its reliability and software stack.

- Neuchips remains committed to hyperscale recommendation workloads while exploring the growing LLM market.

Main AI News:

Taiwanese AI accelerator manufacturer Neuchips is setting its sights on LLM inference, a strategic move from its original focus on recommendation workloads. Ken Lau, the newly appointed CEO, shared this shift in strategy with EE Times, while YounLong Lin, the previous CEO, assumed the role of company chairman.

The company has showcased its Llama2-7B, a cutting-edge AI accelerator, in action. Running on a single-chip PCIe card, it achieves an impressive rate of 60 tokens per second, catering to 16 users simultaneously within a batch. Alternatively, Neuchips has introduced a four-chip PCIe card, which accelerates LLM inference at an astonishing rate of 240 tokens per second. Ken Lau, a former general manager at Intel Taiwan, brings his extensive expertise to Neuchips.

The existing single-chip PCIe card boasts a full-height, full-length design and houses Neuchips’ domain-specific AI accelerator with a Thermal Design Power (TDP) of 55 watts. This card is paired with 33-gigabyte LPDDR5 memory, delivering a memory bandwidth of 1.6 terabits per second. Additionally, Neuchips offers an M.2 card with a 25-watt TDP, boasting the same memory and memory bandwidth.

Taking innovation further, Neuchips has introduced a new four-chip card with a TDP of 300 watts for the accelerators. This powerhouse card incorporates 256-gigabyte LPDDR5 memory, achieving a remarkable bandwidth of 6.4 terabits per second. Notably, Llama2’s performance scales linearly, especially with the deployment of up to eight quad-chip cards, totaling 32 chips, capable of generating a remarkable 1,920 tokens per second.

Neuchips’ RecAccel chip has undergone a rebranding, now known as the N3000. Despite the new name, it retains the same silicon architecture and software stack utilized in the company’s MLPerf inference results—a testament to its proven reliability. Neuchips remains committed to addressing the needs of hyperscale enterprises with recommendation workloads while actively pursuing opportunities in the burgeoning LLM market. This strategic shift positions Neuchips as a formidable player in the world of AI acceleration.

Conclusion:

Neuchips’ strategic pivot toward LLM inference marks a significant move in the AI accelerator market. With its impressive performance and continued focus on hyperscale recommendation workloads, Neuchips is well-positioned to capture opportunities in the expanding LLM market, solidifying its presence as a key player in AI acceleration solutions.