TL;DR:

- Brain-inspired neural networks show promise in surpassing human capabilities.

- The opacity of AI decision-making raises concerns about reliability.

- A new study visualizes neural network relationships to shed light on their decision-making processes.

- The technique maps connections between images and classifications, pinpointing areas of confusion.

- The tool unveils errors in training data, highlighting human fallibility.

- Applications include healthcare, criminal justice, and bias detection in predictions.

Main AI News:

In the realm of AI, brain-inspired neural networks have emerged as formidable contenders, promising to outperform humans in various domains, from deciphering cancer-related mutations to determining loan eligibility. Yet, these AI systems remain enshrouded in a veil of opacity, leaving us to ponder their reliability. A groundbreaking study has now uncovered a means to demystify neural networks, offering insights into their decision-making processes when they err.

Neural networks, as they process data sets, meticulously zoom in on intricate details within each sample, be it potential facial features in images or genetic markers in sequences. These numerical representations of details are employed to gauge the likelihood of a sample belonging to a specific category, like discerning whether an image portrays a human face.

However, the manner in which neural networks acquire their problem-solving insights often resembles a labyrinthine enigma. Their ‘black box’ nature makes it arduous to ascertain the correctness of their responses, setting them apart from human cognition.

In this novel study, the focus wasn’t on deciphering the decision-making process for individual test samples. Instead, David Gleich, a computer science professor at Purdue University, and his team embarked on visualizing the relationships perceived by these AI systems across an entire database. Gleich states, “I’m still amazed at how helpful this technique is to help us understand what a neural network might be doing to make a prediction.”

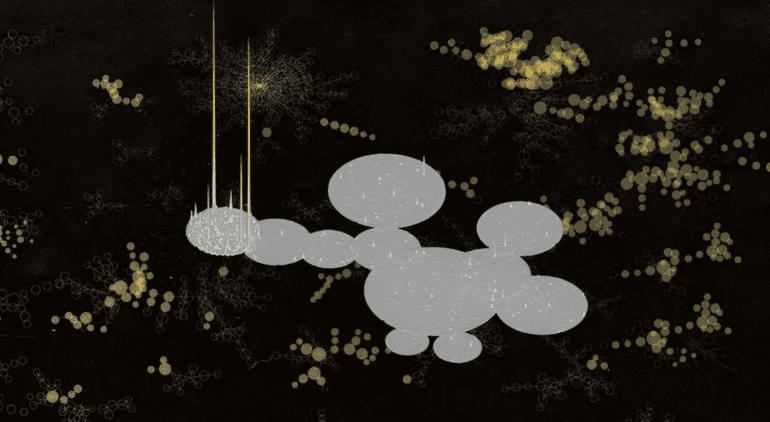

To elucidate, the researchers worked with a neural network trained on a vast repository of approximately 1.3 million images in the ImageNet database. They devised a method that involved segregating and overlapping classifications, thereby flagging images with high probabilities of fitting multiple categories. Drawing inspiration from topology—a field that studies geometric object properties—they mapped the connections the neural network inferred between each image and classification.

The generated maps depicted groups of related images as singular dots, each dot color-coded by classification. Closer dots indicated higher similarity between groups, typically displayed as clusters of similarly colored dots. However, images with significant overlap between classifications were represented by two distinct, differently colored dots. Gleich explains, “Our tool allows us to build something like a map that makes it possible to zoom in on regions of data… It highlights specific data predictions that are worth investigating further.”

This technique empowers observers to identify areas where the network struggles to differentiate between classifications, enabling them to hypothesize about its functioning. Gleich elaborates, “This allowed us to forecast how the network would respond to totally new inputs based on the relationships suggested.”

In their experiments, the research team observed neural networks misclassifying images across various databases, spanning chest X-rays, gene sequences, and apparel. For instance, one network consistently misidentified car images as cassette players, due to the presence of stereo equipment tags in online sales listings.

Moreover, the tool proved adept at uncovering errors in the training data itself, underscoring human fallibility in data labeling. Gleich notes, “People do make mistakes when they hand-label data.”

This analytical approach holds immense promise, particularly in high-stakes neural-network applications, such as healthcare or criminal justice. Gleich and his team even attempted to apply it to a network assessing the likelihood of repeat criminal offenses, although inconclusive results were obtained due to data limitations.

Critics argue that neural networks, influenced by historical biases, perpetuate societal inequities. Gleich suggests that leveraging their tool to discern bias in predictions could yield substantial progress.

Conclusion:

The development of error-detection tools to uncover AI vulnerabilities is a significant advancement. It enhances transparency and reliability, benefiting markets reliant on AI applications, such as healthcare and criminal justice. Additionally, addressing bias in predictions is a crucial step toward improving AI’s societal impact and fostering trust in AI technologies.