TL;DR:

- New Relic introduces advanced AI capabilities to its observability platform.

- AI functionalities aim to identify and address alert coverage gaps in DevOps.

- Machine learning algorithms detect potential issues and recommend alerts.

- DevOps teams can customize alerts based on AI recommendations.

- AI ensures accurate monitoring thresholds to eliminate blind spots.

- Predictive and generative AI is utilized for faster issue detection and resolution.

- New Relic’s aim is to make observability more accessible by surfacing issues.

- AI capabilities reduce alert noise and improve issue comprehension.

- Trust in AI recommendations grows over time as AI models adapt to IT environments.

- Debates arise regarding the construction of large language models for AI.

- DevOps teams are advised to list tasks for AI automation.

- The market impact revolves around improved DevOps efficiency and evolving AI trust.

Main AI News:

In a strategic move this week, New Relic, a prominent player in the realm of observability platforms, has taken a significant stride by introducing advanced artificial intelligence (AI) functionalities. These newly integrated capabilities are aimed squarely at addressing a pervasive challenge for DevOps teams – the identification and rectification of alert coverage gaps.

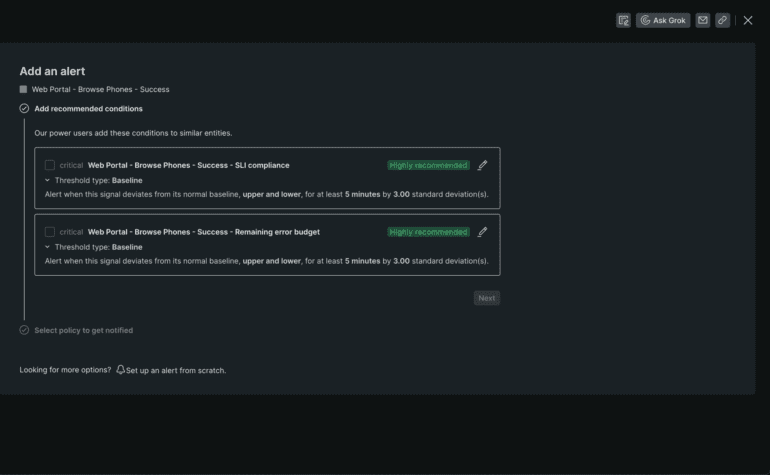

According to Camden Swita, a seasoned senior product manager at New Relic, the conundrum often encountered by DevOps teams pertains to stumbling upon critical issues that their existing alert mechanisms were ill-equipped to tackle. Recognizing this industry-wide concern, New Relic is taking a groundbreaking leap by incorporating machine learning algorithms, requiring no additional expenditure. The principal objective of these algorithms is to discern anomalies that could potentially indicate impending issues. Following this discernment, the platform proactively recommends tailored alerts that DevOps teams can seamlessly implement or further refine as per their requirements, Swita elaborated.

The integration of these machine learning algorithms performs a crucial role in ensuring that DevOps teams adeptly monitor optimal thresholds, thereby eliminating any potential blind spots that could cast a shadow on application performance, Swita highlighted.

New Relic is harnessing the power of both predictive and generative AI, empowering DevOps teams to expedite the detection and resolution of issues. While the company has been harnessing machine learning algorithms for predictive AI for a considerable period, it has also introduced early access to a revolutionary generative AI tool named Grok, signifying its commitment to innovation.

The overarching objective of these advancements is to democratize the capabilities of observability platforms, as stated by Swita. This empowerment is manifested through the streamlined identification of issues, obviating the need for intricate queries crafted by software engineers to dissect metrics, logs, and traces.

The infusion of AI capabilities not only reduces the cacophony of alerts but also enhances the comprehension of the root causes of issues, consequently curtailing the influx of superfluous alerts that might inundate the workflow, Swita affirmed.

Yet, even as the AI revolution barrels ahead, a pertinent consideration arises: the level of trust that DevOps teams should vest in AI-generated recommendations. AI models inherently require a period of acclimatization to grasp the nuances of an IT environment, and the initial recommendations might not be uniformly accurate. As time progresses, however, DevOps teams are poised to take on a supervisory role over processes executed by AI models.

Moreover, the field witnesses diverse methodologies in constructing large language models (LLMs) that underpin generative AI. Swita alludes to an ongoing debate over whether stringing together multiple LLMs proves more efficacious compared to developing a singular, expansive ‘parent’ LLM with subsidiary relationships to smaller counterparts.

While the dialogue continues, pragmatic counsel suggests that DevOps teams should compile a roster of tasks amenable to automation by AI models. Many of these tasks, often mundane, hold immense allure for AI-based solutions, relieving software engineers from these routine undertakings. The intriguing question that lingers, however, is whether diverse manifestations of AI could one day usher in comprehensive automation of the DevOps workflow. In the final analysis, the discerning lens of most DevOps engineers will scrutinize AI models against a lofty benchmark – the capability to match, if not surpass, human performance before being embraced unreservedly.

Conclusion:

The integration of advanced AI capabilities by New Relic into its observability platform signifies a pivotal step in enhancing the DevOps landscape. By efficiently identifying and addressing alert coverage gaps through machine learning algorithms, DevOps teams can customize alerts for optimal performance. The amalgamation of predictive and generative AI expedites issue resolution while reducing noise, bolstering overall efficiency. As AI models evolve to understand IT environments, trust in AI recommendations will likely grow. This shift not only streamlines DevOps workflows but also shapes the trajectory of AI’s role in the market, as organizations strive for greater automation and enhanced operational agility.