- Nvidia introduces Mistral-NeMo-Minitron 8B, a lightweight, open-source language model.

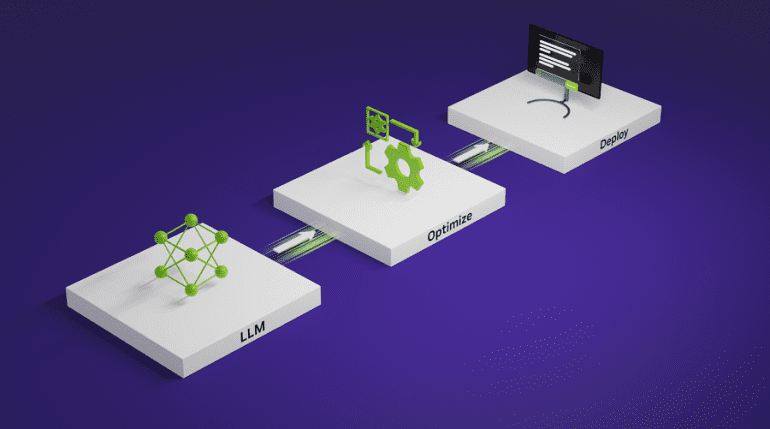

- The model uses pruning and distillation to reduce hardware needs while maintaining performance.

- Nvidia’s approach boosts efficiency, enabling the model to run on RTX-powered workstations and excel in AI tasks.

- Microsoft also launched three hardware-efficient models, including Phi-3.5-mini-instruct, which processes large data and outperforms larger models.

- Microsoft’s models include Phi-3.5-vision-instruct for image analysis and Phi-3.5-MoE-instruct, a larger model designed for efficient inference.

- Both companies focus on AI models with low hardware requirements and high performance for diverse applications.

Main AI News:

Nvidia Corporation has unveiled Mistral-NeMo-Minitron 8B, a lightweight language model that outperforms similar neural networks across various tasks. Released on Hugging Face under an open-source license, this model closely follows Microsoft’s recent launch of open-source language models designed for devices with limited processing capacity.

Mistral-NeMo-Minitron 8B is a streamlined version of the Mistral NeMo 12B model, introduced last month in collaboration with AI startup Mistral AI SAS. Nvidia developed this model using two key techniques: pruning, which reduces hardware requirements by removing less essential components, and distillation, where knowledge is transferred to a more hardware-efficient version. This process resulted in a model with 4 billion fewer parameters than its predecessor, maintaining high output quality while reducing costs and data needs.

According to Nvidia executive Kari Briski, Nvidia’s approach has significantly boosted the efficiency of Mistral-NeMo-Minitron 8B, making it capable of running on an Nvidia RTX-powered workstation while excelling in AI benchmarks for chatbots, virtual assistants, content generators, and educational tools.

Nvidia’s release coincides with Microsoft’s launch of three hardware-efficient language models, including the compact Phi-3.5-mini-instruct. With 3.8 billion parameters, it can process large volumes of data and outperforms models like Llama 3.1 8B and Mistral 7B. Microsoft also introduced Phi-3.5-vision-instruct for image analysis and Phi-3.5-MoE-instruct, a larger model with 60.8 billion parameters, designed to activate only a fraction of these during inference, minimizing hardware demands.

Both Nvidia and Microsoft are advancing AI technology, offering powerful and efficient models to meet the increasing needs of various industries.

Conclusion:

Nvidia and Microsoft’s focus on hardware-efficient AI models signals a shift in the AI landscape. These companies are addressing a critical market need for scalable, cost-effective AI solutions by optimizing performance while minimizing computational requirements. This move will likely accelerate AI adoption across industries, from content generation and virtual assistants to enterprise applications like document analysis. As competition intensifies, companies that innovate in delivering powerful yet accessible AI tools will likely lead the market, shaping the future of AI-driven business solutions.