TL;DR:

- Nvidia unveiled the HGX H200 Tensor Core GPU, leveraging Hopper architecture to accelerate AI applications.

- Addressing the compute bottleneck in AI progress promises more potent AI models and faster response times.

- The H200 is designed for data center AI applications, not graphics, with parallel matrix multiplications essential for neural networks.

- Featuring HBM3e memory, it offers 141GB of memory and 4.8TB/s bandwidth, surpassing the Nvidia A100.

- Available in various form factors and embraced by major cloud providers, including AWS, Google, Microsoft, and Oracle.

- Nvidia adapts to export restrictions with scaled-back AI chips for the Chinese market.

Main AI News:

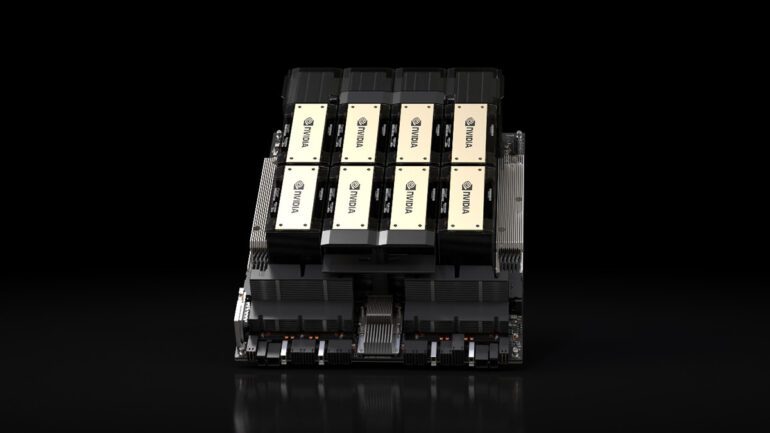

In a groundbreaking announcement on Monday, Nvidia introduced the HGX H200 Tensor Core GPU, a technological marvel that leverages the Hopper architecture to supercharge AI applications. Building upon the success of the H100 GPU, released just last year as Nvidia’s premier AI GPU chip, the HGX H200 promises to revolutionize the landscape of artificial intelligence. Its widespread adoption could lead to the emergence of more potent AI models and significantly enhanced response times for existing applications like ChatGPT.

The Challenges of Compute Bottlenecks in AI Progress

Experts have long identified the scarcity of computing power, commonly referred to as “compute,” as a significant impediment to AI advancements over the past year. This scarcity has not only hindered the deployment of existing AI models but also slowed down the development of innovative ones. The primary culprit behind this bottleneck has been the shortage of robust GPUs capable of accelerating AI models. While one approach to addressing this challenge is to manufacture more chips, the alternative involves enhancing the power of AI chips themselves. It is precisely this second approach that positions the H200 as an enticing product for cloud providers.

Unlocking the Potential of the H200

Contrary to its name, the H200 GPU isn’t primarily designed for graphics-related tasks. Datacenter GPUs, like the H200, excel in AI applications because they excel at performing vast numbers of parallel matrix multiplications, an essential operation for neural networks. These GPUs play a pivotal role in both training AI models and the “inference” phase, where users input data into an AI model and receive real-time results.

Ian Buck, Vice President of Hyperscale and HPC at Nvidia, emphasized the importance of efficient data processing with the H200, stating, “To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory.” With the introduction of the Nvidia H200, the industry’s leading end-to-end AI supercomputing platform takes a significant leap forward in addressing some of the world’s most pressing challenges.

A Solution for AI Resource Constraints

For instance, OpenAI has repeatedly highlighted its struggle with GPU resource limitations, leading to performance slowdowns in services like ChatGPT. To cope with this issue, the company has resorted to rate limiting its services. However, the adoption of the H200 could potentially provide breathing space for existing AI language models, enabling them to serve a more extensive clientele without compromising performance.

HBM3e Memory: Setting a New Standard

Nvidia’s H200 introduces a groundbreaking feature as the first GPU to offer HBM3e memory. Thanks to this cutting-edge technology, the H200 boasts an impressive 141GB of memory and a mind-boggling 4.8 terabytes per second bandwidth. According to Nvidia, this bandwidth is a staggering 2.4 times greater than that of the Nvidia A100, released in 2020. (Despite its age, the A100 remains in high demand due to shortages of more potent chips.)

Versatile Deployment Options

Nvidia intends to offer the H200 in various form factors, including Nvidia HGX H200 server boards in both four- and eight-way configurations, ensuring compatibility with existing HGX H100 systems’ hardware and software. Additionally, the H200 will be integrated into the Nvidia GH200 Grace Hopper Superchip, a fusion of CPU and GPU in a single package designed to provide an extra boost to AI capabilities.

Leading Cloud Providers Embrace the H200

Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure have already committed to deploying H200-based instances starting next year. Nvidia also confirmed that the H200 will be available “from global system manufacturers and cloud service providers” beginning in the second quarter of 2024.

Navigating Export Restrictions

In a challenging backdrop of export restrictions imposed by the US government, Nvidia has engaged in a cat-and-mouse game to maintain its market presence. These restrictions aim to prevent the proliferation of advanced technologies, particularly to nations like China and Russia. Nvidia’s response has included the development of new chips to circumvent these barriers. However, the US recently imposed further bans on these innovations.

In a recent report by Reuters, Nvidia is once again adapting to the changing landscape by introducing three scaled-back AI chips—the HGX H20, L20 PCIe, and L2 PCIe—tailored for the Chinese market. This market segment represents a significant quarter of Nvidia’s data center chip revenue. Two of these chips comply with US restrictions, while the third falls into a “gray zone” that may be permissible with the appropriate license. Expect to witness ongoing developments in the relationship between the US government and Nvidia in the coming months.

Conclusion:

Nvidia’s HGX H200 GPU represents a significant leap in AI acceleration technology. Its introduction addresses the critical compute bottleneck in the AI market, offering more powerful AI chips and enabling cloud providers to enhance their services. Nvidia’s ability to adapt to export restrictions ensures its continued relevance in a rapidly evolving market.